In this blog post, we will get hands-on experience on Kubernetes taints and tolerations. Taints placed on a set of nodes are used to prevent PODs from running on those nodes. Tolerations defined within the POD’s specification are defining exceptions of that rule: they allow the POD to ignore the corresponding matching taint.

We will go into the details on what happens to PODs with tolerations that do not match the taint of a node. Moreover, we will investigate, what happens to the already running PODs if a taint with no matching toleration is added to a node. Lastly, we will investigate the difference between the „NoSchedule“, the „NoExecute“ and the „PreferNoSchedule“ effects.

References

Step 0: Preparations

Step 0.1: Access the Kubernetes Playground

As always, we start by accessing the Katacode Kubernetes Playground. We press the launch.sh command and type the following command, so we can use auto-completion together with the predefined alias k for kubectl:

source <(kubectl completion bash) # optional, because katacoda has done that for us already alias k=kubectl # optional, because katacoda has done that for us already complete -F __start_kubectl k

As an example, k g<tab> will be completed to k get. Also nice: k get pod <tab> will reveal the name of the POD(s).

Note: the last command found in the official kubernetes documentation can also be replaced by the command we had used in the previous blog posts:

source <(kubectl completion bash | sed 's/kubectl/k/g')

Task 1: Make sure PODs are not scheduled on node01

The Katacode Kubernetes Playground provides us with a master and a worker node (node01). In this task, we try to run a POD on the master by repelling it from node01 by a taint.

Step 1.1: Place a NoSchedule Taint on node01

Let us put a taint on node01 and observe the effect on scheduled PODs.

kubectl taint node node01 myKey=myValue:NoScheduleNote: If we run PODs with no nodeSelectors on two nodes that have no tains, the PODs are scheduled on both nodes, as we have demonstrated on task 2 of the previous blog post on labels and node selectors. However, we have seen, that the first few PODs are scheduled on node01 instead of the master. Therefore, we choose putting the taint on node01 to see the effect of repelling PODs more easily.

We can now see the taint via kubectl describe nodes:

kubectl describe nodes | egrep "Name:|Taints:"

Name: master

Taints: node-role.kubernetes.io/master:NoSchedule

Name: node01

Taints: myKey=myValue:NoScheduleOkay, we see that also the master has a taint. We will keep the taint for now to see, which influence it has to our PODs. If they prevent the PODs from running on the master, we will remove the taint later.

Step 1.2 Create PODs with no Tolerations

Let us now create a set or PODs. This time, we create PODs by creating a Kubernetes deployment:

kubectl create deployment nginx --image=nginx deployment.apps/nginx created

Let us check the corresponding POD:

kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-65f88748fd-26ctr 0/1 Pending 0 11s <none> <none> <none> <none>

Step 1.3: Retrieve the Events

The POD is in Pending. Let us retrieve the event messages:

kubectl describe pods | grep Events -A 10 Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 47s (x4 over 3m33s) default-scheduler 0/2 nodes are available: 2 node(s) had taints that the pod didn't tolerate.

It tells us that the POD could not find a node without taint that it didn’t tolerate.

Step 1.4: Check Taints of the Nodes

Let us print the taints of the nodes again:

kubectl describe nodes | egrep "Name:|Taints:" Name: master Taints: node-role.kubernetes.io/master:NoSchedule Name: node01 Taints: myKey=myValue:NoSchedule

Okay, that is true; both nodes have taints. Since the POD has no toleration configured, it cannot be scheduled on any of the two nodes.

Note: The node-role.kubernetes.io/master:NoSchedule taint is found on each master node per default and it makes sure that normal workloads are not run on the master. This makes sure that the master is not overwhelmed with workloads, so the kubernetes API is working with no performance problems.

Step 1.5: Remove Taint from the Master

For allowing the scheduler to schedule the POD on the master, let us get rid of the standard taint of the master:

kubectl taint node master node-role.kubernetes.io/master- node/master untainted

Step 1.6: Verify that the POD is running on the Master

Now the POD should run on the master:

k get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-65f88748fd-26ctr 0/1 ContainerCreating 0 10m <none> master <none> <none>

We were quick and we have seen the ContainerCreating status that will turn into a Running status within seconds.

Step 1.7: Remove the Taint from node01

To check what happens to running nodes, if a taint is removed from a node, we remove the taint from node01:

kubectl taint node node01 myKey- node/node01 untainted

Step 1.8: Verify that the POD is still running on the Master

We now will see, whether this has any effect on the POD:

kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-65f88748fd-s5k9z 1/1 Running 0 3m52s 10.32.0.4 master <none> <none>

No, it does not. The existing PODs will still be running on the master.

Step 1.9: Add POD by Scaling the Deployment

However, what happens to newly create PODs? We will see if we scale the Deployment from one replica to two replicas:

kubectl scale deployment nginx --replicas=2 deployment.extensions/nginx scaled

Step 1.10: Verify that the second POD is scaled on node01

kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-65f88748fd-rx4qm 1/1 Running 0 89s 10.44.0.1 node01 <none> <none> nginx-65f88748fd-s5k9z 1/1 Running 0 8m5s 10.32.0.4 master <none> <none>

We see that the scheduler has chosen to spin up the second POD on node01, since the taint of node01 that has prevented to do so, is removed.

Summary Task 1

In this task, we have shown, which effects a NoSchedule taint has on a POD:

- The scheduler will spin up new PODs with no tolerations on nodes with no taint only.

- If all nodes are tainted, a newly created POD with no toleration will enter the Pending status

- After we remove the taint of a node, the scheduler will spin up pending PODs on that node

- Once a POD is running on a node, it will not be affected by adding or removal of taints of the nodes they are running on

Task 2: Tolerate Taints

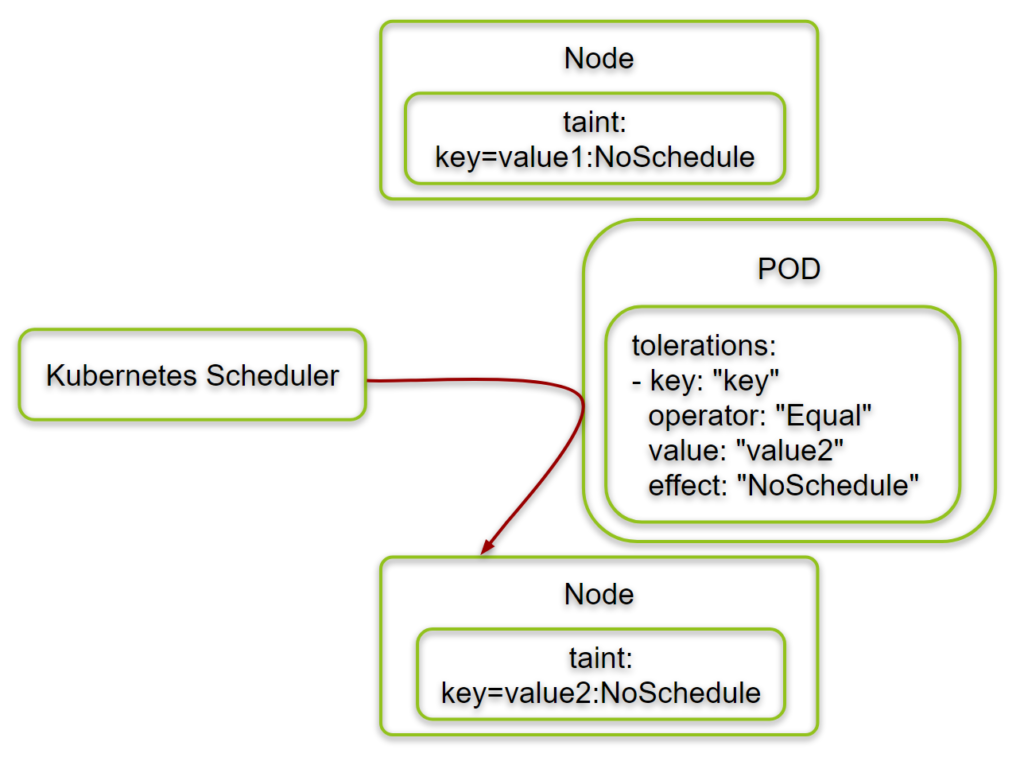

In this task, we will show how PODs can be configured to tolerate a taint. I.e., we will configure the PODs to be scheduled on a node, even if this node has a taint.

Step 2.1: Add Taint to node01 (again)

Here we add the taint to node01 again.

kubectl taint node node01 myKey=myValue:NoSchedule

node/node01 taintedFor task 2 being independent of task 1, we make sure that the master is untainted:

kubectl taint node master node-role.kubernetes.io/master- # output: node/master untainted # or: error: taint "node-role.kubernetes.io/master:" not found # depending on whether the taint is already removed or not.

Step 2.2: Create PODs with taint Tolerations#

According to the documentation, the tolerations can be placed inside the POD spec like follows:

# snippet: tolerations: - key: "key" operator: "Equal" #<---------- optional, since this is the default value: "value" effect: "NoSchedule"

Note:

- the

operator: "Equal"line can be omitted, since this is the default operator.- The

value: "..."line is ignored, if theoperatoris"Exists"instead.- If the

effectis omitted, the toleration will match any effect (i.e."NoSchedule","PreferNoSchedule"and"NoExecute".- If the

keyis omitted and theoperatoris"Exists", the POD will tolerate any taint.

We can use this snippet and place it inside the POD spec with the correct indentation:

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

tolerations:

- key: "myKey"

operator: "Equal"

value: "myValue"

effect: "NoSchedule"

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

EOFStep 2.2: Check that the PODs are running on both Nodes

Even though node01 is tainted, the 10 PODs are distributed among both nodes, master, and node01:

k get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-7cd7c86dd7-6vgs6 1/1 Running 0 2m2s 10.32.0.2 node01 <none> <none> nginx-deployment-7cd7c86dd7-7bnh4 1/1 Running 0 14s 10.32.0.6 node01 <none> <none> nginx-deployment-7cd7c86dd7-hm9jx 1/1 Running 0 14s 10.32.0.5 node01 <none> <none> nginx-deployment-7cd7c86dd7-mc844 1/1 Running 0 14s 10.32.0.7 node01 <none> <none> nginx-deployment-7cd7c86dd7-qf5kr 1/1 Running 0 2m2s 10.32.0.3 node01 <none> <none> nginx-deployment-7cd7c86dd7-qtwgl 1/1 Running 0 14s 10.40.0.2 master <none> <none> nginx-deployment-7cd7c86dd7-rghvl 1/1 Running 0 14s 10.32.0.8 node01 <none> <none> nginx-deployment-7cd7c86dd7-sccjj 1/1 Running 0 14s 10.32.0.4 node01 <none> <none> nginx-deployment-7cd7c86dd7-t2hb4 1/1 Running 0 2m2s 10.40.0.1 master <none> <none> nginx-deployment-7cd7c86dd7-tw4sl 1/1 Running 0 14s 10.32.0.9 node01 <none> <none>

Step 2.3: Change Toleration Value

For a double-check, let us edit the deployment ad follows:

kubectl edit deploy nginx-deployment ... tolerations: - effect: NoSchedule key: myKey operator: Equal value: otherValue # <----------- changed effect: "NoSchedule" ... deployment.extensions/nginx-deployment edited

Step 2.4: Verify that now all PODs are running on the Master

Now the toleration does not match the taint of node01.:

kubectl describe nodes | egrep "Name:|Taints:"

Name: master

Taints: <none>

Name: node01

Taints: myKey=myValue:NoScheduleThis is the reason, why no PODs are running on node01 anymore:

kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-cf79bd8bf-87knt 1/1 Running 0 23s 10.40.0.7 master <none> <none> nginx-deployment-cf79bd8bf-8tl72 1/1 Running 0 19s 10.40.0.2 master <none> <none> nginx-deployment-cf79bd8bf-ffbvm 1/1 Running 0 23s 10.40.0.5 master <none> <none> nginx-deployment-cf79bd8bf-jmg65 1/1 Running 0 19s 10.40.0.9 master <none> <none> nginx-deployment-cf79bd8bf-jwrbh 1/1 Running 0 23s 10.40.0.6 master <none> <none> nginx-deployment-cf79bd8bf-l9sbk 1/1 Running 0 23s 10.40.0.4 master <none> <none> nginx-deployment-cf79bd8bf-lbwtl 1/1 Running 0 23s 10.40.0.3 master <none> <none> nginx-deployment-cf79bd8bf-q6kcn 1/1 Running 0 19s 10.40.0.11 master <none> <none> nginx-deployment-cf79bd8bf-s99vt 1/1 Running 0 19s 10.40.0.10 master <none> <none> nginx-deployment-cf79bd8bf-wpnm8 1/1 Running 0 20s 10.40.0.8 master <none> <none>

Note: you might have to wait a minute or two, until all PODs of the Deployment are replaced by the new version.

Summary Task 2

In this task, we have seen that we can specify a toleration in the POD’s spec that allows the scheduler to spin up the POD on a tainted node if the taint matches the toleration. Tolerations work with operators „Equal“ or „Exists“ and can be used to match a certain value and/or effect.

Task 3: Move running PODs from a Node

In this task, we will move away PODs that are already running on a node by applying a taint with „NoExecute“ effect on the node.

Step 3.1: Restart the Playground

We will restart the Katacoda Kubernetes Playground, so we will find following taints on the nodes:

kubectl describe nodes | egrep "Name:|Taints:" Name: master Taints: node-role.kubernetes.io/master:NoSchedule Name: node01 Taints: <none>

Step 3.2: Run PODs without any Toleration

We will run PODs by creating a Deployment like follows:

k create deploy nginx-deployment --image=nginx deployment.apps/nginx-deployment created

Step 3.3: Check that the POD is running on node01

Because of the master’s taint, the PODs will not be scheduled on the master, but on node01 instead:

master $ k get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-7cf89f8c94-kw4tl 1/1 Running 0 11s 10.40.0.1 node01 <none> <none>Step 3.4: Add a NoExecute Taint on node01

We now add a taint on node01 with a NoExecute effect:

kubectl taint node node01 myKey=myValue:NoExecute node/node01 tainted

Step 3.5: Verify that the PODs on node01 are terminated

If you are quick enough, you will see that the POD on node01 is terminated.

kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-7cf89f8c94-kw4tl 0/1 Terminating 0 4m3s <none> node01 <none> <none> nginx-deployment-7cf89f8c94-sz9xd 0/1 Pending 0 6s <none> <none> <none> <none>

Shortly thereafter the original POD is gone and is replaced by a Pending POD:

kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-7cf89f8c94-sz9xd 0/1 Pending 0 3m7s <none> <none> <none> <none>

The new POD is Pending since it cannot find a node without taint:

kubectl describe pods nginx-deployment-7cf89f8c94-sz9xd | grep -A 10 Events Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning FailedScheduling 16s (x7 over 4m44s) default-scheduler 0/2 nodes are available: 2 node(s) had taints that the pod didn't tolerate.

Step 3.6: Remove Taint of the master

To allow the POD to be scheduled, we either need to add a toleration, or we need to remove a taint. We choose to remove the taint of the master:

kubectl taint node master node-role.kubernetes.io/master:NoSchedule- node/master untainted

Note: this time we have applied a different syntax than before by specifying the taint effect.

Step 3.7: Verify that the POD is running on the master

Now the scheduler is spinning up the POD on the master:

kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-7cf89f8c94-sz9xd 1/1 Running 0 11m 10.32.0.4 master <none> <none>

Summary Task 3

A taint with „NoExecute“ effect will terminate any running POD that has no matching toleration.

Summary

In this session, we have investigated Kubernetes Taints and Tolerations. Nodes can be tainted with

- an arbitrary key,

- an arbitrary value and an

- effect with one of the values „NoSchedule“, „PreferNoSchedule“ and „NoExecute“.

A node that has a taint with

- „NoSchedule“ effect will prevent PODs to be scheduled on this node, as long as the POD has not a matching toleration defined.

- The „NoExecute“ effect will terminate any running PODs without matching tolerations on the node, while

- „PreferNoSchedule“ is a soft requirement. A POD with no toleration will prefer to not start on a node with a „PreferNoSchedule“ taint unless it does not have another choice and would enter the „Pending“ status otherwise.

PODs can be specified to tolerate certain taints. We have the following cases for tolerations:

- operator=“Exists“ and no other parameter –> will tolerate any taint

- operator=“Exists“ and key=“myKey“ –> will tolerate any taint with the „myKey“ key

- operator=“Equal“ and key=“myKey“ and value=“myValue“ –> will tolerate taints with the corresponding key and value

- operator=“Equal“ and key=“myKey“ and value=“myValue“ and effect=“NoSchedule“ –> will tolerate taints with the corresponding key, value, and effect

The operator „Equal“ is the default operator and can be omitted.