What, if you could release and deploy fully tested and bullet-proof software on a day by day basis? Isn’t this a huge effort for hobby developers like me? Even for hobby developers, Docker and its ecosystem promises an easy way to automate the integration and deployment of the software. Continuous Integration (CI) and continuous deployment (CD) are made easy.

This blog is exploring a tiny aspect of this automation framework: the containerization of an application and the automatic creation of a Docker image from source code for a Rails Web application as target application. For that,

- we will create and test a so-called Dockerfile that describes all automation steps: e.g. which base image to use, which files to copy, which commands to perform and which ports to expose.

- In a second step, we will create a Link (via a „Webhook“) between the Github Software repository and the Docker Hub docker image repository. This will allow us to automatically create a new Docker image in the background, each time a code change is pushed to the Software repository.

Next possible steps towards full CI/CD (continuous integration/continuous deployment) are discussed a the end of the blog.

Note: the procedure requires Internet downloads of >1 GB. If you are interested in a more lightweight „Hello World“ example with (probably) <15 MB of downloads during the process, you might be interested in this example: https://github.com/oveits/docker-nginx-busybox.

Why Docker?

Here are the reasons, why I have chosen to containerize my web application using Docker:

- similar to Hypervisor based virtualization technologies, container technologies help to create portable application images by bundling libraries with the application and by providing an environment that is independent from other applications.

- compared to Hypervisor-based virtualization technologies, the resulting images are much more lightweight and thus are much easier to handle and share. A layered container design helps to further reduce latencies and download volumes since unchanged layers can be cached.

- when comparing the performance of Rails on Windows and dockerized Rails on Windows, we experience a 50% performance gain. For details, see here.

- when comparing docker with other container technologies, we find that docker is by far the most popular container technology as of today.

See also this blog for more details.

Manual Build from Dockerfile

Prerequisites

- Install docker, if not already done. Using Vagrant, I have created this blog that shows how this can be done in less than 10 Minutes, if Vagrant and Virtualbox is installed. Here we will need only a single host, so you can skip the etcd discovery part and the change of the num_instances variable.

You may also want to test the new official way of installing Docker using the Docker Toolbox (at the time I had started with Docker, the official way of installing Docker on Windows was based on boot2docker and had resulted in a nightmare…). - Install git on the docker host, if it is not installed already (check with „git –version“ on the docker host’s command line interface).

Fork and download the Rails App from Github

In the docker host, clone the application’s repository and change the working directory by issuing following commands:

git clone https://github.com/oveits/ProvisioningEngine cd ProvisioningEngine

Dockerfile

Note, that the Dockerfile is already part of the git repository, you have cloned above, so this sub-chapter is for your information only.

The Dockerfile describes the automation steps during creation of a docker image. Among others, the Dockerfile specifies

- the base image (i.e. the official „rails“ image in this case),

- the commands that are run within the image container during the build process,

- the files to be copied from the docker host to the image container,

- the TCP ports to be exposed to the outside world,

- the default command that is run within a container (e.g. started with the „docker run“).

In our case, the Dockerfile looks like follows:

FROM rails # update the operating system: RUN apt-get update # if you need "vi" and "less" for easier troubleshooting later on: RUN apt-get install -y vim; apt-get install -y less # copy the ProvisioningEngine app to the container: ADD . /ProvisioningEngine # Define working directory: WORKDIR /ProvisioningEngine # Install the Rails Gems and prepare the database: RUN bundle install; bundle exec rake db:migrate RAILS_ENV=development # expose tcp port 80 EXPOSE 80 # default command: run the web server on port 80: CMD ["rails", "server", "-p", "80"]

Build Docker Image

Now we will build the image. We assume that we have direct Internet access over a NAT firewall, but with no need to pass a HTTP proxy here. In case, a HTTP proxy is involved, note that both, the docker host as well as the container image needs to be prepared for that.

docker build --tag=provisioningengine_manual:latest .

You will see in the log that a new image layer is created at each step. This may take a while.

Test the Docker Image

On the docker host, stop any container that might be running on port 80 (check with „docker ps“, stop the container with the command „docker stop <container-id>“). Then perform:

ID=$(docker run -d -p 80:80 provisioningengine_manual); sleep 5; docker logs $ID

Verify that the Web Server is operational

Now test the web server by issuing the command

curl localhost

on the docker host command line. The output should look like

<html><body>You are being <a href="http://localhost/customers">redirected</a>.</body></html>

Troubleshooting Steps

The logs can be retrieved any time by repeating the „docker logs“ command. In case there was no output because the container exits right away (i.e. „docker ps“ returns a list without the running container based on the image provisioningengine_manual) and you want to find out, why, you can perform an interactive session:

docker run -it -p 80:80 provisioningengine_manual

In case you want to get access to the Linux shell in the container (in this case, the rails server can be started manually by issuing „rails server -p 80“), start a container with

docker run -it -p 80:80 provisioningengine_manual /bin/bash

Automatically Triggered Build

Prerequisites

- Install docker, if not already done. Using Vagrant, I have created this blog that shows how this can be done in less than 10 Minutes, if Vagrant and Virtualbox is installed. Here we will need only a single host, so you can skip the etcd discovery part and the change of the num_instances variable.

You may also want to test the new official way of installing Docker using the Docker Toolbox (at the time I had started with Docker, the official way of installing Docker on Windows was based on boot2docker and had resulted in a nightmare…). - Sign up for Github and Docker Hub, if not already done.

Steps for linking Github and Docker Hub

- Fork the git repository https://github.com/oveits/ProvisioningEngine (button on the upper right). This is needed, since you will trigger a new Docker image build process by committing a software change to your repository.

- On your Git Hub repository project home (in my case this was https://github.com/oveits/ProvisioningEngine) goto

-> (on the right)->

(on the right)->

->

-> choose Docker

-> enter your Docker Hub password and confirm - On Docker Hub Home, left of your username

-> Create

Create

-> Choose „Create Automated Build“ from drop down list

-> Choose your repository

-> Enter short description (required)

-> Create

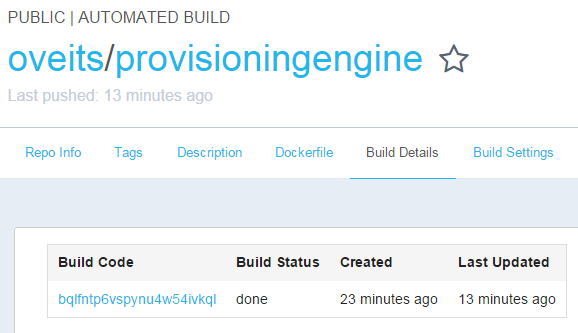

Test the Link between Github and Docker Hub

Test the automatic build trigger by perform a change the master branch. In my case, I have created a new git repository branch, performed a set of changes (add a Dockerfile and others) and I have migrated back the branch to the master branch. However, for a simple test, you can also perform an online change and commit of the README.rdoc file on the master branch of your forked Github repository.

This should trigger a new Docker image build (this may take a while). Check the results on the Docker Hub Build Details tab of the newly created docker repository.

After some time, the Build Status should turn „done“.

Test the Docker Image

Test the docker image by issuing following commands (change „oveits“ to your Docker Hub username)

ID=$(docker run -d -p 80:80 oveits/ProvisioningEngine); echo $ID

Check the container status and the logs with

docker ps

docker logs $ID

And connect to the server by issuing the command

curl localhost

on the docker host. The answer should look like

<html><body>You are being <a href="http://localhost/customers">redirected</a>.</body></html>

Done.

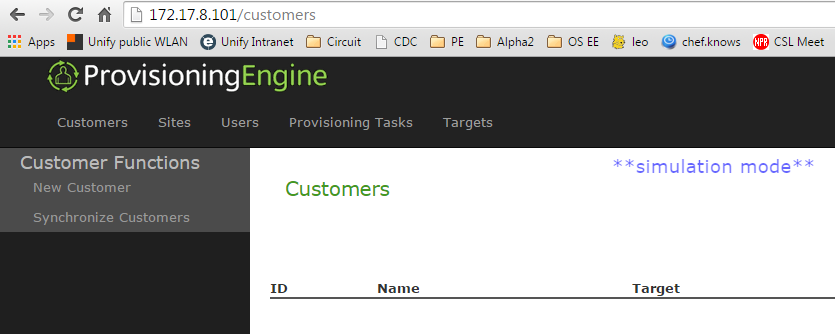

Connecting from an Internet Browser

Docker networking can be somewhat confusing. With the

-p 80:80

option of the docker run command above, we have instructed docker to map the container port 80 to the docker host port 80.

However, in order to reach this port, the IP address of the docker host must be reachable from the Internet browser, obviously.

On the docker host, find the IP addresses by issuing an

ifconfig

command. In my case, this results in an output like (abbreviated):

core@core-01 ~/docker-nginx-busybox $ ifconfig

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.1.42.1 netmask 255.255.0.0 broadcast 0.0.0.0 <--- not reachable from the outside!!

...

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.2.15 netmask 255.255.255.0 broadcast 10.0.2.255 <--- not reachable from the outside!!

...

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.8.101 netmask 255.255.255.0 broadcast 172.17.8.255 <--- host-only network -> only reachable from the Virtualbox host

...

In my case, the docker host is a Virtualbox CoreOS VM running on a Windows host, created using Vagrant. The addresses of docker0 (is reachable only from docker containers running on the host) and eth0 (is a NATed Virtualbox network) are not reachable from the Windows host. Therefore, within the Vagrantfile, I had made sure that the VM is equipped with an additional Ethernet interface eth1 (host only Virtualbox network), that is reachable from the Windows machine. This is the case here:

If I want the docker host to be reachable from outside, additional care must be taken with respect to routing. Using a bridged network instead of a host only network can help here, but you can also create a static NAT/PAT within Virtualbox that maps the Window’s address to the docker host port.

Summary

We have shown how to automate the Docker build process by adding an appropriate Dockerfile to the application, save the application software on Github, and link Github with the Docker Hub image repository. Now, any time a code change is pushed to Github, a new Docker image will be built automatically.

Next Steps towards Continuous Integration

A continuous integration (CI) and continuous deployment (CD) process involves other steps like branching the software, pull requests, merging, automatic testing a.s.o.

As a starting point, you might want to check out part 2 and part 3 of this nice post of Michael Herman, which gives an introduction on CI and CD based on the CircleCI framework.

Other CI/CD frameworks are discussed in the answers of the following posted question:

I guess, I will give TravisCI and/or CircleCI a try…

Hi,

I followed your blogpost on installing images through source code an I am trying to do the same thing. I have just started using docker and I want to build the gnu compiler image inside the ubuntu image using the source code. So i created the base file ubuntu, now I have to install the gnu compiler and several other components in that. How can I build it using the source code ? Here is what i have tried to do.

FROM ubuntu

RUN apt-get update && apt-get install -y build-essential gcc wget git

RUN git clone https://github.com/gcc-mirror/gcc.git

WORKDIR /gcc

RUN ./configure

RUN make

RUN make install

ENTRYPOINT

But I don’t know what goes in the entrypoint part of the file.

Please tell me how to move ahead. I have to install several other components over this, like the openssl image over ubuntu etc. If there is any tutorial or any source that you can refer it will be of a great help to set my basics straight.

@Anvikar: I am updating this misleading answer of mine after reading the Docker documentation more carefully (on 2016-04-22):

In my previous answer I had recommended not to use

ENTRYPOINTat all. However, the recommendation of Docker is not to use the shell format ofENTRYPOINT, e.g.ENTRYPOINT echo hello world. The exec format ofENTRYPOINT(e.g.ENTRYPOINT ["echo", "hello", "world"]) is fine.The best practice is to use

ENTRYPOINTfor the part of the command that does not change, andCMDfor the options that change often. E.g. you could define something likeENTRYPOINT ["echo", "hello"]together withCMD ["world"]. If you perform adocker run imagenamewith no command, the container will executeecho hello world. However, you have the possibility to change replace the command by specifying it on the docker run commandline. E.g. adocker run imagename Anvikarwill execute the commandecho hello AnvikarOriginal (misleading) answer (I am keeping it for reference, though):

SHORT ANSWER:

If the

ENTRYPOINTdoes not work for you, have you tried with a simple example likeENTRYPOINT ["echo", "hello", "world"]?In my previous answer

The Docker documentation recommends to use the exec form of the ENTRYPOINT.

I recommend to replace

ENTRYPOINTbyCMD. IfENTRYPOINTis used, anyRUNcommand will be ignored. Also anycommandargument ofdocker run ... commandwill be ignored, if

ENTRYPOINTwas used in the Dockerfile. At least, this is, how I understand the information in the official documentation https://docs.docker.com/engine/reference/builder/.The syntax of CMD for a command like

rails server -p 80has a syntax like

CMD ["rails", "server", "-p", "80"]Try with

CMD ["echo", "hello world"], build the image withdocker build ...and start the image with

docker run image-name.—-

LONG ANSWER:

I am also a newbie to Docker (I started in August, and I only have scratched the surface yet, since I am doing a lot of other stuff), but let me try:

So far, I have never used the ENTRYPOINT. A Dockerfile that has worked for me can be found here: https://github.com/oveits/ProvisioningEngine/blob/master/Dockerfile and in my blog.

The documentation about Dockerfiles can be found here: https://docs.docker.com/engine/reference/builder/

Okay, there, I can see that the ENTRYPOINT and the CMD are substitutes to each other, but ENTRYPOINT has the disadvantage that any RUN argument is ignored. Also any docker run command argument is ignored (docker run run_command). This is not a good idea, I think. Better use CMD instead.

Try with something like:

CMD [„echo“, „hello“]

Once you have built the image with docker build, you can test the image by issuing the docker run command

$ docker run -it /bin/bash

-> this should ignore the CMD command and let you access the command-line of the image. There you can look around on the operating system.

Then you can try a

$ docker run /bin/bash

-> this should send a “hello” to the command line of the docker host and exit the image. If the image should be kept up and running, you will need to use the –d flag (docker run -d ). You can then enter the commandline with docker exec.

Note: for testing, it is better to do a docker run without the ID=$(…) assignment, since, if there is an error with the docker run, the assignment will might hide the error message; I will change this in the blog post.

I hope this helps,

Oliver

I want to clarify something. After cloning the repository for the gcc code, it means that I can use the code. Then I need to put a executable artifact of the source code in the CMD or ENTRYPOINT of the dockerfile. So my question is where is the executable artifact or how do i get the executable artifact. As far as I know ./ config

make and make install commands in the docker file do that. So what should I use as an executable artifact to pass as argument to the ENTRYPOINT or CMD.

@Anvikar: I fear do not fully understand: what is an „executable artifact“? In any case, let me tell you the following: if you want to perform a set of commands any time the docker image is started, you should create and test a shell script that performs those commands (e.g. „startup.sh“), and make it part of the image in the build process. Then, define

CMD ["startup.sh"]in the Dockerfile. Then, when you run the image withdocker run ..., then the shell script will be executed.If your shell script consists of a single command, you also can define your command directly within CMD: e.g.

rails server -p 80translates intoCMD ["rails", "server", "-p", "80"].I am not sure, whether this comment helps, since I have not fully understood your problem, I guess.

Okay. Thank you. I have another question to ask, these are not related. How many containers can be run simultaneously on docker ? I just read that docker has only one write layer and rest are read only images. So my question is can I run many containers together or parellelly ?

Thanks in advance.

@Anvika: yes, I have read of case studies with more than 1000 simultaneous active docker containers on a single Docker host. Docker containers are much more lightweight than virtual machines. You might want to have a look at my other blog where I am discussing that kind of topics: https://vocon-it.com/2015/09/28/what-are-containers-why-to-start-with-docker/

Best regards, Oliver