You will find here step by step instructions on how to install an ingress controller on a Kubernetes multi-node cluster with an example application on both, HTTP and HTTPS.

In our last blog post, we have installed a Kubernetes Ingress controller on a minikube system. Minikube is restricted to single node clusters, though. This time, we will show how to install the ingress controller on a multi-node Kubernetes cluster.

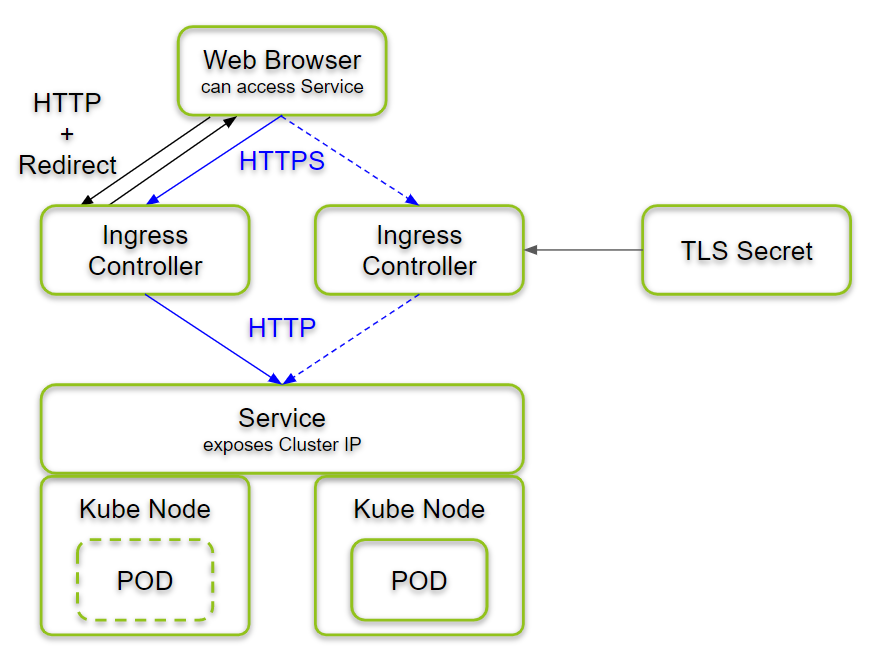

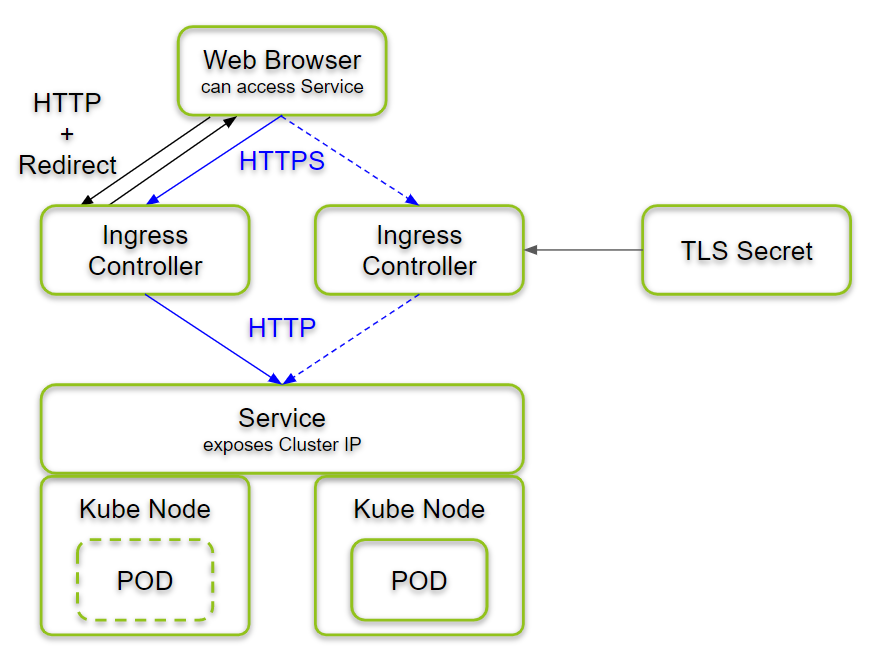

At the end of the session, the architecture looks as follows:

An ingress controller will run on each node of the Kubernetes cluster. See more details on the architecture in the Summary section at the end of this blog post.

Prerequisites

We have installed Kubernetes via kubeadm by following the blog post Kubernetes (3) – Installing a Kubernetes Cluster with kubeadm.

References

- We are using the GIT repo /kubernetes-ingress with some little tweaks: e.g. the default certificate did not work, so we had to create our own.

- Other blog posts of this kubernetes series:

Step 1: Install GIT

If you have started from scratch on a fresh CentOS system, as I did, you might need to install GIT first. On CentOS, we can install a rather new version of GIT as follows:

yum install -y http://opensource.wandisco.com/centos/7/git/x86_64/wandisco-git-release-7-2.noarch.rpm yum install -y git git --version

Step 2: Clone Kubernetes Ingress Installation Scripts

We make use of the installation scripts we find on Github on /kubernetes-ingress.

git clone https://github.com/nginxinc/kubernetes-ingress \ && cd kubernetes-ingress

Step 3: Create Namespace and Service Account

We now create a separate namespace for the ingress controller:

# kubectl apply -f deployments/common/ns-and-sa.yaml namespace/nginx-ingress created serviceaccount/nginx-ingress created

As seen below, the namespace and the service account are both called ’nginx-ingress‘:

# cat deployments/common/ns-and-sa.yaml apiVersion: v1 kind: Namespace metadata: name: nginx-ingress --- apiVersion: v1 kind: ServiceAccount metadata: name: nginx-ingress namespace: nginx-ingress

Step 3: Create your own Default Certificate

Step 3.0: Why not using the pre-installed Certificate?

Currently, /kubernetes-ingress offers to use a pre-configured default certificate with the following command (it is not recommended, though):

(BEWARE OF THE RISKS AND DRAWBACKS WHEN USING THIS) # kubectl apply -f deployments/common/default-server-secret.yaml secret/default-server-secret created

However, even if you were Okay to use such a public secret key for a test installation, be aware that it does not work the way expected: the purpose of the default certificate is to show the user with a ‚404 Not Found‚ if the user tries to access an unknown URL:

However, we have found that the pre-installed certificate has an invalid signature: therefore, browsers reject to display the 404 Not found message. Instead, you get a message that the certificate is invalid. The browser does not offer a way to display the message nevertheless.

Use the simple method above only, if

- this is a test installation and

- if you are Okay with using a secret key that is known to the Internet community (!)

- and you accept that you get a NET::ERR_CERT_INVALID message instead of the expected ‚404 Not Found‚ message when you enter a subdomain that is not known to the ingress controller

If so, you can use the simple command above and go on with Step 4. In all other situations, we recommend to go on with step 3.1.

Step 3.1: Create your own Certificate and Key

Instead of using the –very insecure– default certificate above (note: the private key is exposed to the Internet!), we install our own self-signed wildcard certificate very much as we have done on our last blog post Kubernetes (6) – HTTPS on Minikube.

We just need to replace some minikube commands which are not available on a multi-node Kubernetes cluster.

For the current phase, it is sufficient to create a self-signed key and certificate like follows:

cd ../example-com-tls/

export DOMAIN=example.com

openssl req -x509 -newkey rsa:4096 -sha256 -nodes -keyout tls_self.key -out tls_self.crt -subj "/CN=*.${DOMAIN}" -days 365

Here, we have chosen to create a self-signed certificate with wildcard domain “/CN=*.${DOMAIN}”. This helps us later to re-use the certificate for more than one application.

You might need to replace the DOMAIN to match you needs. Howerver, for testig purposes, example.com will work, even if we will need to creat /etc/hosts entries in the web client machine to map this domain to the IP addresses of your Kubernetes cluster nodes.

The output of the above commands will look as follows:

Generating a 4096 bit RSA private key ........................................................................................................................................................................................................++ ..................................++ writing new private key to 'tls_self.key' -----

Step 3.2: Create Kubernetes Secret

For the case, you had created the secret with the insecure method of Step 3.0, let us delete the secret:

# kubectl delete secret default-server-secret -n nginx-ingress secret "default-server-secret" deleted

As a replacement, we create a generic secret with the same name as follows:

# kubectl create secret generic --type=Opaque default-server-secret --from-file=tls.crt=tls_self.crt --from-file=tls.key=tls_self.key -n nginx-ingress secret/default-server-secret created

With that, we have created a secret with a valid certificate and key.

Step 4: Create a Config Map

Some parameters of the ingress controller are best to be configured via a config map. See below a recommended config map:

# cd <path to kubernetes-ingress GIT repo> # e.g. cd ../kubernetes-ingress/ # cat deployments/common/nginx-config.yaml kind: ConfigMap apiVersion: v1 metadata: name: nginx-config namespace: nginx-ingress data: proxy-connect-timeout: "60s" proxy-read-timeout: "60s" client-max-body-size: "1m"

Here, we have chosen the default values found in a table in this document, so we also could leave out the last three lines and use an empty data body.

Here, I had forgotten to apply the config map. Let us apply it now:

# kubectl apply -f deployments/common/nginx-config.yaml

Step 5: Configure RBAC

We had installed kubernetes via kubeadm and per default, role-based access control (RBAC) is enabled. Therefore, we need to add a ClusterRole and a ClusterRoleBinding for our nginx-ingress service account. The configuration we will apply can be reviewed with a cat command:

# cat deployments/rbac/rbac.yaml kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: nginx-ingress rules: - apiGroups: - "" resources: - services - endpoints verbs: - get - list - watch - apiGroups: - "" resources: - secrets verbs: - get - list - watch - apiGroups: - "" resources: - configmaps verbs: - get - list - watch - update - create - apiGroups: - "" resources: - pods verbs: - list - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - extensions resources: - ingresses verbs: - list - watch - get - apiGroups: - "extensions" resources: - ingresses/status verbs: - update --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: nginx-ingress subjects: - kind: ServiceAccount name: nginx-ingress namespace: nginx-ingress roleRef: kind: ClusterRole name: nginx-ingress apiGroup: rbac.authorization.k8s.io

Now let us create the ClusterRole and the ClusterRoleBinding:

# kubectl apply -f deployments/rbac/rbac.yaml clusterrole.rbac.authorization.k8s.io/nginx-ingress created clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress created

Step 6: Deploy the Ingress Controller

For deploying the Ingress Controller on each of the cluster machines, we can deploy it as a DaemonSet. We first review the configuration again:

# cat deployments/daemon-set/nginx-ingress.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ingress

namespace: nginx-ingress

spec:

selector:

matchLabels:

app: nginx-ingress

template:

metadata:

labels:

app: nginx-ingress

spec:

serviceAccountName: nginx-ingress

containers:

- image: nginx/nginx-ingress:edge

imagePullPolicy: Always

name: nginx-ingress

ports:

- name: http

containerPort: 80

hostPort: 80

- name: https

containerPort: 443

hostPort: 443

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

args:

- -nginx-configmaps=$(POD_NAMESPACE)/nginx-config

- -default-server-tls-secret=$(POD_NAMESPACE)/default-server-secret

#- -v=3 # Enables extensive logging. Useful for troubleshooting.

#- -report-ingress-status

#- -external-service=nginx-ingress

#- -enable-leader-electionWe apply the above configuration:

# kubectl apply -f deployments/daemon-set/nginx-ingress.yaml daemonset.extensions/nginx-ingress created

This should create a POD for each node:

# kubectl get nodes NAME STATUS ROLES AGE VERSION centos-2gb-nbg1-1 Ready master 40d v1.12.2 node1 Ready <none> 31d v1.12.3 # kubectl get pods -n nginx-ingress NAME READY STATUS RESTARTS AGE nginx-ingress-dppnh 0/1 CrashLoopBackOff 4 2m14s nginx-ingress-pcr5w 0/1 CrashLoopBackOff 4 2m14s

But why do we see a CrashLoopBackOff status? This was because I had forgotten to apply the config map in step 4. The troubleshooting session is recorded in the appendix below. In your case, the output should look similar as in my case after resolving the problem:

# kubectl get pod -n nginx-ingress NAME READY STATUS RESTARTS AGE nginx-ingress-jfc78 1/1 Running 7 20m nginx-ingress-pcr5w 1/1 Running 255 21h

Step 7: Create an HTTP Application

As a test of the ingress controller, let us create and expose an HTTP hello world application to be reached via the ingress controller:

kubectl create deployment nginx-demo --image=nginxdemos/hello # output: 'deployment.apps/nginx-demo created' kubectl expose deployment nginx-demo --port=80 # output: 'service/nginx-demo exposed'

With the next command, we create an entry in the ingress controller:

cat <<EOF | kubectl apply -f -

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-demo

spec:

rules:

- host: example.com

http:

paths:

- backend:

serviceName: nginx-demo

servicePort: 80

EOFThis should produce an output as follows:

ingress.extensions/nginx-demo created

After having added an entry in the /etc/hosts file for the example.com domain, we can access the service locally:

# cat /etc/hosts | grep "127.0.0.1 example.com" || echo "127.0.0.1 example.com" >> /etc/hosts # curl example.com <!DOCTYPE html> <html> <head> <title>Hello World</title> ...

Also from a browser the Internet, the service can be reached, if we add the same entry to the ‚hosts‘ file (e.g. on Windows: copy C:\Windows\System32\drivers\etc\hosts to the desktop, add „127.0.0.1 example.com“ as the last line, and copy it back to the original path. Confirm the action as Administrator, if you are asked to do so).

Perfect.

Interestingly, but not fully unexpected, the HTTPS counterpart is showing a 404 Not Found message:

Step 8: Create an HTTPS Application

In step 3, we already have encapsulated a certificate into a secret. Therefore, it should be easy to use it for converting our HTTP application to an HTTPS application. However, the existing secret can be used only in the namespace it has been created for, i.e. the nginx-ingress namespace.

Why not copying the existing default Secret?

We could transfer the existing generic secret of type ‚Opaque‘ from the nginx-ingress namespace to the current namespace (see this StackOverflow answer):

kubectl get secret default-server-secret --namespace=nginx-ingress --export -o yaml \ | sed 's/default-server-secret/example-com-tls2/g' \ | kubectl apply --namespace=default -f - # output: secret/example-com-tls2 created

Here, we have inserted an SED command to change the name.

However, we have seen later that this kind of certificates does not seem to work: we have received an ERR_SSL_VERSION_OR_CIPHER_MISMATCH error:

So, it is better to create a new secret from scratch as in alternative B

Step 8.1: Create new Secret

Alternatively, we can create a new TLS secret by entering the directory containing the self-signed certificate and key:

cd ../example-com-tls/ kubectl create secret tls example-com-tls --cert=tls_self.crt --key=tls_self.key # output: secret/example-com-tls created

We now update the configuration of the ingress controller as follows:

cat <<EOF | kubectl apply -f -

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-demo

spec:

tls:

- secretName: example-com-tls

hosts:

- example.com

rules:

- host: example.com

http:

paths:

- backend:

serviceName: nginx-demo

servicePort: 80

EOF

# output: ingress.extensions/nginx-demo configuredNow we can see that http://example.com is redirected to the location https://example.com:443/:

# cat /etc/hosts | grep "127.0.0.1 example.com" || echo "127.0.0.1 example.com" >> /etc/hosts # curl -D - http://example.com HTTP/1.1 301 Moved Permanently Server: nginx/1.15.7 Date: Wed, 02 Jan 2019 08:00:17 GMT Content-Type: text/html Content-Length: 169 Connection: keep-alive Location: https://example.com:443/ <html> <head><title>301 Moved Permanently</title></head> <body> <center><h1>301 Moved Permanently</h1></center> <hr><center>nginx/1.15.7</center> </body> </html>

In the first line above, we have made sure that the system knows, how to resolve example.com to an IP address.

Also, a browser on the Internet will be redirected from HTTP to HTTPS:

After accepting to show insecure content (because we had used a self-signed certificate), we can see that the hello world application is redirected to https. It tells us that this is not a secure connection since the self-signed certificate is not trusted. We click on „extended“ options (sorry, screenshots are in German):

Then we click on „Goto example.com (insecure)“:

And we reach the HTTPS-secured version of our hello world application:

Perfect.

Find the summary after the Appendix…

Appendix: Troubleshoot a failing POD

Finding the cause of the problem

# kubectl get pod nginx-ingress-dppnh -n nginx-ingress -o yaml

...

containerStatuses:

- containerID: docker://0f522f0726f1df0fdcc6b88527ccac3bf89f1f128f91f3d9e615204465aad086

image: nginx/nginx-ingress:edge

imageID: docker-pullable://nginx/nginx-ingress@sha256:d3e8f01012c4c03e27a70dd70bb70e63b71b3e43f1140e40fd992ffbeeb73172

lastState:

terminated:

containerID: docker://0f522f0726f1df0fdcc6b88527ccac3bf89f1f128f91f3d9e615204465aad086

exitCode: 255

finishedAt: 2019-01-01T13:23:23Z

reason: Error

startedAt: 2019-01-01T13:23:23Z

name: nginx-ingress

ready: false

restartCount: 245

state:

waiting:

message: Back-off 5m0s restarting failed container=nginx-ingress pod=nginx-ingress-dppnh_nginx-ingress(0a04826e-0d1c-11e9-a13e-9600001441cb)

reason: CrashLoopBackOff

...Unfortunately, we do not see any terminating message.

This time, I have found out the problem only by looking at the logs of the terminating docker containers:

# docker ps -a | head -n 2 CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 799fab7938bb nginx/nginx-ingress "/nginx-ingress -ngi…" 5 minutes ago Exited (255) 4 minutes ago k8s_nginx-ingress_nginx-ingress-pcr5w_nginx-ingress_0a0366f9-0d1c-11e9-a13e-9600001441cb_246

Now we can see the log of the most recent failed container as follows:

# CONTAINER_ID=$(docker ps -a | head -n 2 | grep -v '^CONTAINER' | awk '{print $1}') && docker logs $CONTAINER_ID

I0101 13:33:09.512743 1 main.go:118] Starting NGINX Ingress controller Version=edge GitCommit=35f18749

F0101 13:33:09.536820 1 main.go:201] Error when getting nginx-ingress/nginx-config: configmaps "nginx-config" not found

Okay, we now have found out the reason: I had forgotten to apply the config map in step 4. But what I ask myself: why was there no possibility to retrieve the log with kubectl? Let us try to improve the logs before we fix the root of the problem.

Improving the Kubernetes Log

On Determine the Reason for Pod Failure – Kubernetes, we find a hint that the default terminationMessagePolicy is ‚File‘, but we can test to set it to ‚FallbackToLogsOnError‘ for the PODs:

# cat deployments/daemon-set/nginx-ingress.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ingress

namespace: nginx-ingress

spec:

selector:

matchLabels:

app: nginx-ingress

template:

metadata:

labels:

app: nginx-ingress

spec:

serviceAccountName: nginx-ingress

containers:

- image: nginx/nginx-ingress:edge

imagePullPolicy: Always

name: nginx-ingress

ports:

- name: http

containerPort: 80

hostPort: 80

- name: https

containerPort: 443

hostPort: 443

terminationMessagePolicy: FallbackToLogsOnError

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

args:

- -nginx-configmaps=$(POD_NAMESPACE)/nginx-config

- -default-server-tls-secret=$(POD_NAMESPACE)/default-server-secret

#- -v=3 # Enables extensive logging. Useful for trooublshooting.

#- -report-ingress-status

#- -external-service=nginx-ingress

#- -enable-leader-election

We apply the new configuration:

# kubectl apply -f deployments/daemon-set/nginx-ingress.yaml daemonset.extensions/nginx-ingress configured

This should not prevent the POD from crashing, but it should help us to retrieve the log via kubectl. However a ‚kubectl get pod‘ command had revealed that the POD was not changed at all:

# kubectl get pod -n nginx-ingress NAME READY STATUS RESTARTS AGE nginx-ingress-dppnh 0/1 CrashLoopBackOff 252 21h nginx-ingress-pcr5w 0/1 CrashLoopBackOff 252 21h

Also, the detailed output did not reveal any terminating message:

kubectl get pod -n nginx-ingress -o yaml | less ...(no changed output)...

Let us try to kill the old POD:

# kubectl get pod -n nginx-ingress NAME READY STATUS RESTARTS AGE nginx-ingress-dppnh 0/1 CrashLoopBackOff 252 21h nginx-ingress-pcr5w 0/1 CrashLoopBackOff 252 21h [root@centos-2gb-nbg1-1 kubernetes-ingress]# kubectl delete pod -n nginx-ingress nginx-ingress-dppnh pod "nginx-ingress-dppnh" deleted [root@centos-2gb-nbg1-1 kubernetes-ingress]# kubectl get pod -n nginx-ingress NAME READY STATUS RESTARTS AGE nginx-ingress-jfc78 1/1 Running 0 4s nginx-ingress-pcr5w 0/1 CrashLoopBackOff 252 21h #(waiting some minutes) # kubectl get pod -n nginx-ingress NAME READY STATUS RESTARTS AGE nginx-ingress-jfc78 0/1 CrashLoopBackOff 2 39s nginx-ingress-pcr5w 0/1 CrashLoopBackOff 252 21h

The POD has restarted. Now, the terminating message can be retrieved:

# kubectl get pod nginx-ingress-jfc78 -n nginx-ingress -o yaml

...

- containerID: docker://5d4bc8ac271a44a37f44a91ef814bc066e6141b9a1a95774088611e0d267e6c9

image: nginx/nginx-ingress:edge

imageID: docker-pullable://nginx/nginx-ingress@sha256:d3e8f01012c4c03e27a70dd70bb70e63b71b3e43f1140e40fd992ffbeeb73172

lastState:

terminated:

containerID: docker://5d4bc8ac271a44a37f44a91ef814bc066e6141b9a1a95774088611e0d267e6c9

exitCode: 255

finishedAt: 2019-01-01T14:05:22Z

message: |

I0101 14:05:22.104953 1 main.go:118] Starting NGINX Ingress controller Version=edge GitCommit=35f18749

F0101 14:05:22.138206 1 main.go:201] Error when getting nginx-ingress/nginx-config: configmaps "nginx-config" not found

reason: Error

startedAt: 2019-01-01T14:05:22Z

...

Resolving the Problem

Now is the time to resolve the problem: let us create the missing config:

# kubectl apply -f deployments/common/nginx-config.yaml configmap/nginx-config created # kubectl get pod -n nginx-ingress NAME READY STATUS RESTARTS AGE nginx-ingress-jfc78 0/1 CrashLoopBackOff 6 7m36s nginx-ingress-pcr5w 0/1 CrashLoopBackOff 254 21h ...(waiting some minutes)... # kubectl get pod -n nginx-ingress NAME READY STATUS RESTARTS AGE nginx-ingress-jfc78 1/1 Running 7 11m nginx-ingress-pcr5w 0/1 CrashLoopBackOff 254 21h

We can see that the first of two ingress controllers is running fine now. The next one is waiting for the next restart. After waiting some more minutes, we can see that the PODs recover without the need of deleting the PODs manually:

# kubectl get pod -n nginx-ingress NAME READY STATUS RESTARTS AGE nginx-ingress-jfc78 1/1 Running 7 13m nginx-ingress-pcr5w 1/1 Running 255 21h

Perfect.

Summary

In this blog post, we have installed an ingress controller to a Kubernets multi-node cluster. Once we have installed the controller and installed a self-signed certificate, we have tested the installation with a hello world application on HTTP and HTTPS.

In the end, we have reached an architecture as shown below:

An ingress controller is running on each node of the kubernetes cluster since we have installed it as a daemon set. The web browser is choosing one of the ingress controllers by means of DNS (in our case: /etc/hosts file).

The ingress controller redirects HTTP requests to HTTPS. The controller translates HTTPS connections back to HTTP before it forwards the connection to the kubernetes service. The service, in turn, forwards the connection to a running POD. If more than one PODs are available, it distributes the connections among all available PODs in a round-robin fashion.

From point of view of the user, this is just an HTTPS-secured connection to the application. That the application on a cluster is transparent to the user.

DumpsBuddy’s experts are well-aware of the prerequisites of the Salesforce Exam Platform-App-Builder and offer you the most updated and verified study material to grasp all concepts of Salesforce Exam Platform-App-Builder. You can opt for DumpsBuddy Questions Answers to equip yourself with deep and flawless understanding of the each and every topic of the syllabus. All the complex and confusing concepts of the certification exam are made clear in an easy to understand language. No worries from which academic background you come from. You will find DumpsBuddy Questions Answers immensely easy and interactive.