In the course of this Hello World style tutorial, we will explore DC/OS, a Data Center Operating System developed and open sourced by Mesosphere with the target to hide the complexity of data centers. We will

- install DC/OS on your local PC or Notebook using Vagrant and VirtualBox,

- deploy a „hello world“ application with more than one instance,

- load balance between the application instances

- and make sure the service is reachable from the outside world.

See also part 2: A Step towards productive Docker: installing and testing DC/OS on AWS (starts from scratch and does not require to have read/tested the current post).

DC/OS is a Data Center Operating System is built upon Apache Mesos and Mesosphere Marathon, an open source container orchestration platform. It has the target to hide the complexity of data centers when deploying applications: DC/OS performs the job of deploying your application on your data center hardware: DC/OS will automatically and choose the hardware servers to run your application on. It helps scaling your application according to your needs by adding or removing application instances at a push of a button. DC/OS will make sure that your client’s requests are load balanced and routed to you application instances: there is no need to manually re-configure the load-balancer(s), if you add or destroy an instance of your application: DC/OS will take care of this for you.

Note: If you want to get started with Marathon and Mesos first, you might be interested in this blog post, especially, if the resource requirements of this blog post exceeds what you have at hand: for the DC/OS tutorial you will need 10 GB or RAM, while in the Marathon/Mesos tutorial, 4 GB are sufficient.

Table of Contents

- Table of Contents

- Target

- Tools and Versions used

- Prerequisites

- Step 1: Install Vagrant and VirtualBox

- Step 2: Download Vagrant Box

- Step 3: Clone DCOS-Vagrant Repo

- Step 4: Install Vagrant Hostmanager Plugin

- Step 5: Boot DC/OS

- Step 6: Log into the DC/OS GUI

- Step 7: Install the DCOS CLI

- Step 8: Configure DC/OS Master URL

- Step 9: Receive Token from the DC/OS Master

- Step 10 (optional): Explore DC/OS and Marathon

- Step 11: Deploy a Hello World Service per GUI

- Step 12: Connect to the NginX Service

- Step 13 (optional): Explore the Default Mesos Networking

- Step 14: Deploy a Hello World Service per JSON with improved Networking and Load-Balancing

- Step 15: Reaching the Server from the outside World

- Appendix A: Virtualbox Installation Problem Resolution

- Appendix B:

dcos node log --leaderresults in „No files exist. Exiting.“ Message - Appendix C: Finding the DC/OS Version

- Appendix D: Error Message, when changing Service Name

- Next Steps

Target

What I want to do in this session:

- Install DC/OS on the local machine using Vagrant+VirtualBox

- Explore the networking and load balancing capabilities of DC/OS

Tools and Versions used

- Vagrant 1.8.6

- Virtualbox 5.0.20 r106931

- for Windows: GNU bash, version 4.3.42(5)-release (x86_64-pc-msys)

- DCOS 1.8.8

Prerequisites

- 10 GB free DRAM

- tested with 4 virtual CPUs (Quad Core CPU)

- Git is installed

Step 1: Install Vagrant and VirtualBox

Step 1.1: Install VirtualBox

Download and install VirtualBox. I am running version 5.0.20 r106931.

If the installation fails with error message „Setup Wizard ended prematurely“ see Appendix A: Virtualbox Installation Workaround below

Step 1.2: Install Vagrant

Download and install Vagrant (requires a reboot).

Step 2: Download Vagrant Box

We are following the Readme on https://github.com/dcos/dcos-vagrant:

Since this might be a long-running task (especially, if you are sitting in a hotel with low speed Internet connection like I do in the moment), we best start by downloading DC/OS first:

(base system)$ vagrant box add https://downloads.dcos.io/dcos-vagrant/metadata.json ==> box: Loading metadata for box 'https://downloads.dcos.io/dcos-vagrant/metadata.json' ==> box: Adding box 'mesosphere/dcos-centos-virtualbox' (v0.8.0) for provider: virtualbox box: Downloading: https://downloads.dcos.io/dcos-vagrant/dcos-centos-virtualbox-0.8.0.box box: Progress: 100% (Rate: 132k/s, Estimated time remaining: --:--:--) box: Calculating and comparing box checksum... ==> box: Successfully added box 'mesosphere/dcos-centos-virtualbox' (v0.8.0) for 'virtualbox'!

Step 3: Clone DCOS-Vagrant Repo

On another window, we clone the dcos-vagrant git repo:

(base system)$ git clone https://github.com/dcos/dcos-vagrant Cloning into 'dcos-vagrant'... remote: Counting objects: 2171, done. remote: Compressing objects: 100% (4/4), done. remote: Total 2171 (delta 0), reused 0 (delta 0), pack-reused 2167 Receiving objects: 100% (2171/2171), 14.98 MiB | 123.00 KiB/s, done. Resolving deltas: 100% (1297/1297), done. Checking connectivity... done.

(base system)$ cd dcos-vagrant

VagrantConfig.yaml shows:

m1: ip: 192.168.65.90 cpus: 2 memory: 1024 type: master a1: ip: 192.168.65.111 cpus: 4 memory: 6144 memory-reserved: 512 type: agent-private p1: ip: 192.168.65.60 cpus: 2 memory: 1536 memory-reserved: 512 type: agent-public aliases: - spring.acme.org - oinker.acme.org boot: ip: 192.168.65.50 cpus: 2 memory: 1024 type: boot

m1 is the DC/OS master. Private containers will run on a1, while the load balancer containers are public and will run on p1.

Step 4: Install Vagrant Hostmanager Plugin

Installation of the Vagrant Hostmanager Plugin is required; I had tried without, because I did not think that it works on Windows. However, vagrant up will not succeed, if the plugin is not installed; the presence of the plugin is checked before booting up the Vagrant box.

(base system)$ vagrant plugin install vagrant-hostmanager Installing the 'vagrant-hostmanager' plugin. This can take a few minutes... Installed the plugin 'vagrant-hostmanager (1.8.5)'!

Note: Some version updates later (VirtualBox 5.1.28 r117968 (Qt5.6.2)), I have found out, that also the VirtualBox Guest additions are needed in order to avoid the error message

sbin/mount.vboxsf: mounting failed with the error: No such device.

For that, I needed to re-apply the command

vagrant plugin install vagrant-vbguest.However, it still did not work. I could vagrant ssh to the box and I found in /var/log/vboxadd-install.log that it did not find the kernel headers during installation of the vbox guest additions. yum install kernel-headers returned that kernel-headers-3.10.0-693.5.2.el7.x86_64 were already installed. However, ls /usr/src/kernels/ showed, that there is a directory named 3.10.0-327.36.1.el7.x86_64 instead of 3.10.0-327.36.1.el7.x86_64. Now I have done a sudo ln -s 3.10.0-327.36.1.el7.x86_64 3.10.0-327.el7.x86_64 within the directory /usr/src/kernels/, and I could do a vagrant up with no problems. I guess un-installing and re-installing the headers would work as well.

All this did not work, but I have found that the build link on was wrong (hint was found here):

I fixed the link with cd /lib/modules/3.10.0-327.el7.x86_64; sudo mv build build.broken; sudo ln -s /usr/src/kernels/3.10.0-327.36.1.el7.x86_64 build

then cd /opt/VBoxGuestAdditions-*/init; sudo ./vboxadd setupBut still did not work! I give up and try installing DC/OS on AWS. Keep tuned.

Step 5: Boot DC/OS

Below I have set the DCOS_VERSION in order to get the exact same results next time I perform the test. If you omit to set the environment variable, the latest stable version will be used, when you boot up the VirtualBox VM:

(base system)$ export DCOS_VERSION=1.8.8 (base system)$ vagrant up Vagrant Patch Loaded: GuestLinux network_interfaces (1.8.6) Validating Plugins... Validating User Config... Downloading DC/OS 1.8.8 Installer... Source: https://downloads.dcos.io/dcos/stable/commit/602edc1b4da9364297d166d4857fc8ed7b0b65ca/dcos_generate_config.sh Destination: installers/dcos/dcos_generate_config-1.8.8.sh Progress: 16% (Rate: 1242k/s, Estimated time remaining: 0:09:16)

The speed of the hotel Internet seems to be better now, this late in the night…

(base system)$ vagrant up

Vagrant Patch Loaded: GuestLinux network_interfaces (1.8.6)

Validating Plugins...

Validating User Config...

Downloading DC/OS 1.8.8 Installer...

Source: https://downloads.dcos.io/dcos/stable/commit/602edc1b4da9364297d166d4857fc8ed7b0b65ca/dcos_generate_config.sh

Destination: installers/dcos/dcos_generate_config-1.8.8.sh

Progress: 100% (Rate: 1612k/s, Estimated time remaining: --:--:--)

Validating Installer Checksum...

Using DC/OS Installer: installers/dcos/dcos_generate_config-1.8.8.sh

Using DC/OS Config: etc/config-1.8.yaml

Validating Machine Config...

Configuring VirtualBox Host-Only Network...

Bringing machine 'm1' up with 'virtualbox' provider...

Bringing machine 'a1' up with 'virtualbox' provider...

Bringing machine 'p1' up with 'virtualbox' provider...

Bringing machine 'boot' up with 'virtualbox' provider...

==> m1: Importing base box 'mesosphere/dcos-centos-virtualbox'...

==> m1: Matching MAC address for NAT networking...

==> m1: Checking if box 'mesosphere/dcos-centos-virtualbox' is up to date...

==> m1: Setting the name of the VM: m1.dcos

==> m1: Fixed port collision for 22 => 2222. Now on port 2201.

==> m1: Clearing any previously set network interfaces...

==> m1: Preparing network interfaces based on configuration...

m1: Adapter 1: nat

m1: Adapter 2: hostonly

==> m1: Forwarding ports...

m1: 22 (guest) => 2201 (host) (adapter 1)

==> m1: Running 'pre-boot' VM customizations...

==> m1: Booting VM...

==> m1: Waiting for machine to boot. This may take a few minutes...

m1: SSH address: 127.0.0.1:2201

m1: SSH username: vagrant

m1: SSH auth method: private key

m1: Warning: Remote connection disconnect. Retrying...

m1: Warning: Remote connection disconnect. Retrying...

m1: Warning: Remote connection disconnect. Retrying...

m1: Warning: Remote connection disconnect. Retrying...

m1: Warning: Remote connection disconnect. Retrying...

m1: Warning: Remote connection disconnect. Retrying...

m1: Warning: Remote connection disconnect. Retrying...

m1: Warning: Remote connection disconnect. Retrying...

m1: Warning: Remote connection disconnect. Retrying...

==> m1: Machine booted and ready!

==> m1: Checking for guest additions in VM...

==> m1: Setting hostname...

==> m1: Configuring and enabling network interfaces...

==> m1: Mounting shared folders...

m1: /vagrant => D:/veits/Vagrant/ubuntu-trusty64-docker_2017-02/dcos-vagrant

==> m1: Updating /etc/hosts file on active guest machines...

==> m1: Updating /etc/hosts file on host machine (password may be required)...

==> m1: Running provisioner: shell...

m1: Running: inline script

==> m1: Running provisioner: dcos_ssh...

host: Generating new keys...

==> m1: Inserting generated public key within guest...

==> m1: Configuring vagrant to connect using generated private key...

==> m1: Removing insecure key from the guest, if it's present...

==> m1: Running provisioner: shell...

m1: Running: script: Certificate Authorities

==> m1: >>> Installing Certificate Authorities

==> m1: Running provisioner: shell...

m1: Running: script: Install Probe

==> m1: Probe already installed: /usr/local/sbin/probe

==> m1: Running provisioner: shell...

m1: Running: script: Install jq

==> m1: jq already installed: /usr/local/sbin/jq

==> m1: Running provisioner: shell...

m1: Running: script: Install DC/OS Postflight

==> m1: >>> Installing DC/OS Postflight: /usr/local/sbin/dcos-postflight

==> a1: Importing base box 'mesosphere/dcos-centos-virtualbox'...

==> a1: Matching MAC address for NAT networking...

==> a1: Checking if box 'mesosphere/dcos-centos-virtualbox' is up to date...

==> a1: Setting the name of the VM: a1.dcos

==> a1: Fixed port collision for 22 => 2222. Now on port 2202.

==> a1: Clearing any previously set network interfaces...

==> a1: Preparing network interfaces based on configuration...

a1: Adapter 1: nat

a1: Adapter 2: hostonly

==> a1: Forwarding ports...

a1: 22 (guest) => 2202 (host) (adapter 1)

==> a1: Running 'pre-boot' VM customizations...

==> a1: Booting VM...

==> a1: Waiting for machine to boot. This may take a few minutes...

a1: SSH address: 127.0.0.1:2202

a1: SSH username: vagrant

a1: SSH auth method: private key

a1: Warning: Remote connection disconnect. Retrying...

a1: Warning: Remote connection disconnect. Retrying...

a1: Warning: Remote connection disconnect. Retrying...

a1: Warning: Remote connection disconnect. Retrying...

a1: Warning: Remote connection disconnect. Retrying...

a1: Warning: Remote connection disconnect. Retrying...

a1: Warning: Remote connection disconnect. Retrying...

a1: Warning: Remote connection disconnect. Retrying...

a1: Warning: Remote connection disconnect. Retrying...

a1: Warning: Remote connection disconnect. Retrying...

==> a1: Machine booted and ready!

==> a1: Checking for guest additions in VM...

==> a1: Setting hostname...

==> a1: Configuring and enabling network interfaces...

==> a1: Mounting shared folders...

a1: /vagrant => D:/veits/Vagrant/ubuntu-trusty64-docker_2017-02/dcos-vagrant

==> a1: Updating /etc/hosts file on active guest machines...

==> a1: Updating /etc/hosts file on host machine (password may be required)...

==> a1: Running provisioner: shell...

a1: Running: inline script

==> a1: Running provisioner: dcos_ssh...

host: Found existing keys

==> a1: Inserting generated public key within guest...

==> a1: Configuring vagrant to connect using generated private key...

==> a1: Removing insecure key from the guest, if it's present...

==> a1: Running provisioner: shell...

a1: Running: script: Certificate Authorities

==> a1: >>> Installing Certificate Authorities

==> a1: Running provisioner: shell...

a1: Running: script: Install Probe

==> a1: Probe already installed: /usr/local/sbin/probe

==> a1: Running provisioner: shell...

a1: Running: script: Install jq

==> a1: jq already installed: /usr/local/sbin/jq

==> a1: Running provisioner: shell...

a1: Running: script: Install DC/OS Postflight

==> a1: >>> Installing DC/OS Postflight: /usr/local/sbin/dcos-postflight

==> a1: Running provisioner: shell...

a1: Running: script: Install Mesos Memory Modifier

==> a1: >>> Installing Mesos Memory Modifier: /usr/local/sbin/mesos-memory

==> a1: Running provisioner: shell...

a1: Running: script: DC/OS Agent-private

==> a1: Skipping DC/OS private agent install (boot machine will provision in parallel)

==> p1: Importing base box 'mesosphere/dcos-centos-virtualbox'...

==> p1: Matching MAC address for NAT networking...

==> p1: Checking if box 'mesosphere/dcos-centos-virtualbox' is up to date...

==> p1: Setting the name of the VM: p1.dcos

==> p1: Fixed port collision for 22 => 2222. Now on port 2203.

==> p1: Clearing any previously set network interfaces...

==> p1: Preparing network interfaces based on configuration...

p1: Adapter 1: nat

p1: Adapter 2: hostonly

==> p1: Forwarding ports...

p1: 22 (guest) => 2203 (host) (adapter 1)

==> p1: Running 'pre-boot' VM customizations...

==> p1: Booting VM...

==> p1: Waiting for machine to boot. This may take a few minutes...

p1: SSH address: 127.0.0.1:2203

p1: SSH username: vagrant

p1: SSH auth method: private key

p1: Warning: Remote connection disconnect. Retrying...

p1: Warning: Remote connection disconnect. Retrying...

p1: Warning: Remote connection disconnect. Retrying...

p1: Warning: Remote connection disconnect. Retrying...

p1: Warning: Remote connection disconnect. Retrying...

p1: Warning: Remote connection disconnect. Retrying...

p1: Warning: Remote connection disconnect. Retrying...

p1: Warning: Remote connection disconnect. Retrying...

p1: Warning: Remote connection disconnect. Retrying...

p1: Warning: Remote connection disconnect. Retrying...

==> p1: Machine booted and ready!

==> p1: Checking for guest additions in VM...

==> p1: Setting hostname...

==> p1: Configuring and enabling network interfaces...

==> p1: Mounting shared folders...

p1: /vagrant => D:/veits/Vagrant/ubuntu-trusty64-docker_2017-02/dcos-vagrant

==> p1: Updating /etc/hosts file on active guest machines...

==> p1: Updating /etc/hosts file on host machine (password may be required)...

==> p1: Running provisioner: shell...

p1: Running: inline script

==> p1: Running provisioner: dcos_ssh...

host: Found existing keys

==> p1: Inserting generated public key within guest...

==> p1: Configuring vagrant to connect using generated private key...

==> p1: Removing insecure key from the guest, if it's present...

==> p1: Running provisioner: shell...

p1: Running: script: Certificate Authorities

==> p1: >>> Installing Certificate Authorities

==> p1: Running provisioner: shell...

p1: Running: script: Install Probe

==> p1: Probe already installed: /usr/local/sbin/probe

==> p1: Running provisioner: shell...

p1: Running: script: Install jq

==> p1: jq already installed: /usr/local/sbin/jq

==> p1: Running provisioner: shell...

p1: Running: script: Install DC/OS Postflight

==> p1: >>> Installing DC/OS Postflight: /usr/local/sbin/dcos-postflight

==> p1: Running provisioner: shell...

p1: Running: script: Install Mesos Memory Modifier

==> p1: >>> Installing Mesos Memory Modifier: /usr/local/sbin/mesos-memory

==> p1: Running provisioner: shell...

p1: Running: script: DC/OS Agent-public

==> p1: Skipping DC/OS public agent install (boot machine will provision in parallel)

==> boot: Importing base box 'mesosphere/dcos-centos-virtualbox'...

==> boot: Matching MAC address for NAT networking...

==> boot: Checking if box 'mesosphere/dcos-centos-virtualbox' is up to date...

==> boot: Setting the name of the VM: boot.dcos

==> boot: Fixed port collision for 22 => 2222. Now on port 2204.

==> boot: Clearing any previously set network interfaces...

==> boot: Preparing network interfaces based on configuration...

boot: Adapter 1: nat

boot: Adapter 2: hostonly

==> boot: Forwarding ports...

boot: 22 (guest) => 2204 (host) (adapter 1)

==> boot: Running 'pre-boot' VM customizations...

==> boot: Booting VM...

==> boot: Waiting for machine to boot. This may take a few minutes...

boot: SSH address: 127.0.0.1:2204

boot: SSH username: vagrant

boot: SSH auth method: private key

boot: Warning: Remote connection disconnect. Retrying...

boot: Warning: Remote connection disconnect. Retrying...

boot: Warning: Remote connection disconnect. Retrying...

boot: Warning: Remote connection disconnect. Retrying...

boot: Warning: Remote connection disconnect. Retrying...

boot: Warning: Remote connection disconnect. Retrying...

boot: Warning: Remote connection disconnect. Retrying...

boot: Warning: Remote connection disconnect. Retrying...

boot: Warning: Remote connection disconnect. Retrying...

boot: Warning: Remote connection disconnect. Retrying...

==> boot: Machine booted and ready!

==> boot: Checking for guest additions in VM...

==> boot: Setting hostname...

==> boot: Configuring and enabling network interfaces...

==> boot: Mounting shared folders...

boot: /vagrant => D:/veits/Vagrant/ubuntu-trusty64-docker_2017-02/dcos-vagrant

==> boot: Updating /etc/hosts file on active guest machines...

==> boot: Updating /etc/hosts file on host machine (password may be required)...

==> boot: Running provisioner: shell...

boot: Running: inline script

==> boot: Running provisioner: dcos_ssh...

host: Found existing keys

==> boot: Inserting generated public key within guest...

==> boot: Configuring vagrant to connect using generated private key...

==> boot: Removing insecure key from the guest, if it's present...

==> boot: Running provisioner: shell...

boot: Running: script: Certificate Authorities

==> boot: >>> Installing Certificate Authorities

==> boot: Running provisioner: shell...

boot: Running: script: Install Probe

==> boot: Probe already installed: /usr/local/sbin/probe

==> boot: Running provisioner: shell...

boot: Running: script: Install jq

==> boot: jq already installed: /usr/local/sbin/jq

==> boot: Running provisioner: shell...

boot: Running: script: Install DC/OS Postflight

==> boot: >>> Installing DC/OS Postflight: /usr/local/sbin/dcos-postflight

==> boot: Running provisioner: shell...

boot: Running: script: DC/OS Boot

==> boot: Error: No such image or container: zookeeper-boot

==> boot: >>> Starting zookeeper (for exhibitor bootstrap and quorum)

==> boot: a58a678182b4c60df5fd4e1a0b86407456a33c75f4289c7fd7b0ce761afed567

==> boot: Error: No such image or container: nginx-boot

==> boot: >>> Starting nginx (for distributing bootstrap artifacts to cluster)

==> boot: c4bceea034f4d7488ae5ddd6ed708640a56064b191cd3d640a3311a58c5dcb5b

==> boot: >>> Downloading dcos_generate_config.sh (for building bootstrap image for system)

==> boot: % Total % Received % Xferd Average Speed Time Time Time Current

==> boot: Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

22 723M 22 160M 0 0 171M 0 0:00:04 --:--:-- 0:00:04 171M

41 723M 41 300M 0 0 155M 0 0:00:04 0:00:01 0:00:03 139M

65 723M 65 471M 0 0 160M 0 0:00:04 0:00:02 0:00:02 155M

88 723M 88 642M 0 0 163M 0 0:00:04 0:00:03 0:00:01 160M

100 723M 100 723M 0 0 164M 0 0:00:04 0:00:04 --:--:-- 163M

==> boot: Running provisioner: dcos_install...

==> boot: Reading etc/config-1.8.yaml

==> boot: Analyzing machines

==> boot: Generating Configuration: ~/dcos/genconf/config.yaml

==> boot: sudo: cat << EOF > ~/dcos/genconf/config.yaml

==> boot: ---

==> boot: master_list:

==> boot: - 192.168.65.90

==> boot: agent_list:

==> boot: - 192.168.65.111

==> boot: - 192.168.65.60

==> boot: cluster_name: dcos-vagrant

==> boot: bootstrap_url: http://192.168.65.50

==> boot: exhibitor_storage_backend: static

==> boot: master_discovery: static

==> boot: resolvers:

==> boot: - 10.0.2.3

==> boot: superuser_username: admin

==> boot: superuser_password_hash: "\$6\$rounds=656000\$123o/Qz.InhbkbsO\$kn5IkpWm5CplEorQo7jG/27LkyDgWrml36lLxDtckZkCxu22uihAJ4DOJVVnNbsz/Y5MCK3B1InquE6E7Jmh30"

==> boot: ssh_port: 22

==> boot: ssh_user: vagrant

==> boot: check_time: false

==> boot: exhibitor_zk_hosts: 192.168.65.50:2181

==> boot:

==> boot: EOF

==> boot:

==> boot: Generating IP Detection Script: ~/dcos/genconf/ip-detect

==> boot: sudo: cat << 'EOF' > ~/dcos/genconf/ip-detect

==> boot: #!/usr/bin/env bash

==> boot: set -o errexit

==> boot: set -o nounset

==> boot: set -o pipefail

==> boot: echo $(/usr/sbin/ip route show to match 192.168.65.90 | grep -Eo '[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}' | tail -1)

==> boot:

==> boot: EOF

==> boot:

==> boot: Importing Private SSH Key: ~/dcos/genconf/ssh_key

==> boot: sudo: cp /vagrant/.vagrant/dcos/private_key_vagrant ~/dcos/genconf/ssh_key

==> boot:

==> boot: Generating DC/OS Installer Files: ~/dcos/genconf/serve/

==> boot: sudo: cd ~/dcos && bash ~/dcos/dcos_generate_config.sh --genconf && cp -rpv ~/dcos/genconf/serve/* /var/tmp/dcos/ && echo ok > /var/tmp/dcos/ready

==> boot:

==> boot: Extracting image from this script and loading into docker daemon, this step can take a few minutes

==> boot: dcos-genconf.602edc1b4da9364297-5df43052907c021eeb.tar

==> boot: ====> EXECUTING CONFIGURATION GENERATION

==> boot: Generating configuration files...

==> boot: Final arguments:{

==> boot: "adminrouter_auth_enabled":"true",

==> boot: "bootstrap_id":"5df43052907c021eeb5de145419a3da1898c58a5",

==> boot: "bootstrap_tmp_dir":"tmp",

==> boot: "bootstrap_url":"http://192.168.65.50",

==> boot: "check_time":"false",

==> boot: "cluster_docker_credentials":"{}",

==> boot: "cluster_docker_credentials_dcos_owned":"false",

==> boot: "cluster_docker_credentials_enabled":"false",

==> boot: "cluster_docker_credentials_write_to_etc":"false",

==> boot: "cluster_docker_registry_enabled":"false",

==> boot: "cluster_docker_registry_url":"",

==> boot: "cluster_name":"dcos-vagrant",

==> boot: "cluster_packages":"[\"dcos-config--setup_4869fa95533aed5aad36093272289e6bd389b458\", \"dcos-metadata--setup_4869fa95533aed5aad36093272289e6bd389b458\"]",

==> boot: "config_id":"4869fa95533aed5aad36093272289e6bd389b458",

==> boot: "config_yaml":" \"agent_list\": |-\n [\"192.168.65.111\", \"192.168.65.60\"]\n \"bootstrap_url\": |-\n http://192.168.65.50\n \"check_time\": |-\n false\n \"cluster_name\": |-\n dcos-vagrant\n \"exhibitor_storage_backend\": |-\n static\n \"exhibitor_zk_hosts\": |-\n 192.168.65.50:2181\n \"master_discovery\": |-\n static\n \"master_list\": |-\n [\"192.168.65.90\"]\n \"provider\": |-\n onprem\n \"resolvers\": |-\n [\"10.0.2.3\"]\n \"ssh_port\": |-\n 22\n \"ssh_user\": |-\n vagrant\n \"superuser_password_hash\": |-\n $6$rounds=656000$123o/Qz.InhbkbsO$kn5IkpWm5CplEorQo7jG/27LkyDgWrml36lLxDtckZkCxu22uihAJ4DOJVVnNbsz/Y5MCK3B1InquE6E7Jmh30\n \"superuser_username\": |-\n admin\n",

==> boot: "curly_pound":"{#",

==> boot: "custom_auth":"false",

==> boot: "dcos_gen_resolvconf_search_str":"",

==> boot: "dcos_image_commit":"602edc1b4da9364297d166d4857fc8ed7b0b65ca",

==> boot: "dcos_overlay_config_attempts":"4",

==> boot: "dcos_overlay_enable":"true",

==> boot: "dcos_overlay_mtu":"1420",

==> boot: "dcos_overlay_network":"{\"vtep_subnet\": \"44.128.0.0/20\", \"overlays\": [{\"prefix\": 24, \"name\": \"dcos\", \"subnet\": \"9.0.0.0/8\"}], \"vtep_mac_oui\": \"70:B3:D5:00:00:00\"}",

==> boot: "dcos_remove_dockercfg_enable":"false",

==> boot: "dcos_version":"1.8.8",

==> boot: "dns_search":"",

==> boot: "docker_remove_delay":"1hrs",

==> boot: "docker_stop_timeout":"20secs",

==> boot: "exhibitor_static_ensemble":"1:192.168.65.90",

==> boot: "exhibitor_storage_backend":"static",

==> boot: "expanded_config":"\"DO NOT USE THIS AS AN ARGUMENT TO OTHER ARGUMENTS. IT IS TEMPORARY\"",

==> boot: "gc_delay":"2days",

==> boot: "ip_detect_contents":"'#!/usr/bin/env bash\n\n set -o errexit\n\n set -o nounset\n\n set -o pipefail\n\n echo $(/usr/sbin/ip route show to match 192.168.65.90 | grep -Eo ''[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}''\n | tail -1)\n\n\n '\n",

==> boot: "ip_detect_filename":"genconf/ip-detect",

==> boot: "ip_detect_public_contents":"'#!/usr/bin/env bash\n\n set -o errexit\n\n set -o nounset\n\n set -o pipefail\n\n echo $(/usr/sbin/ip route show to match 192.168.65.90 | grep -Eo ''[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}''\n | tail -1)\n\n\n '\n",

==> boot: "master_discovery":"static",

==> boot: "master_dns_bindall":"true",

==> boot: "master_list":"[\"192.168.65.90\"]",

==> boot: "master_quorum":"1",

==> boot: "mesos_container_logger":"org_apache_mesos_LogrotateContainerLogger",

==> boot: "mesos_dns_ip_sources":"[\"host\", \"netinfo\"]",

==> boot: "mesos_dns_resolvers_str":"\"resolvers\": [\"10.0.2.3\"]",

==> boot: "mesos_hooks":"",

==> boot: "mesos_isolation":"cgroups/cpu,cgroups/mem,disk/du,network/cni,filesystem/linux,docker/runtime,docker/volume",

==> boot: "mesos_log_directory_max_files":"162",

==> boot: "mesos_log_retention_count":"137",

==> boot: "mesos_log_retention_mb":"4000",

==> boot: "minuteman_forward_metrics":"false",

==> boot: "minuteman_max_named_ip":"11.255.255.255",

==> boot: "minuteman_max_named_ip_erltuple":"{11,255,255,255}",

==> boot: "minuteman_min_named_ip":"11.0.0.0",

==> boot: "minuteman_min_named_ip_erltuple":"{11,0,0,0}",

==> boot: "num_masters":"1",

==> boot: "oauth_auth_host":"https://dcos.auth0.com",

==> boot: "oauth_auth_redirector":"https://auth.dcos.io",

==> boot: "oauth_available":"true",

==> boot: "oauth_client_id":"3yF5TOSzdlI45Q1xspxzeoGBe9fNxm9m",

==> boot: "oauth_enabled":"true",

==> boot: "oauth_issuer_url":"https://dcos.auth0.com/",

==> boot: "package_names":"[\n \"dcos-config\",\n \"dcos-metadata\"\n]",

==> boot: "provider":"onprem",

==> boot: "resolvers":"[\"10.0.2.3\"]",

==> boot: "resolvers_str":"10.0.2.3",

==> boot: "rexray_config":"{\"rexray\": {\"modules\": {\"default-docker\": {\"disabled\": true}, \"default-admin\": {\"host\": \"tcp://127.0.0.1:61003\"}}, \"loglevel\": \"info\"}}",

==> boot: "rexray_config_contents":"\"rexray:\\n loglevel: info\\n modules:\\n default-admin:\\n host: tcp://127.0.0.1:61003\\n\\\n \\ default-docker:\\n disabled: true\\n\"\n",

==> boot: "rexray_config_preset":"",

==> boot: "telemetry_enabled":"true",

==> boot: "template_filenames":"[\n \"dcos-config.yaml\",\n \"cloud-config.yaml\",\n \"dcos-metadata.yaml\",\n \"dcos-services.yaml\"\n]",

==> boot: "ui_banner":"false",

==> boot: "ui_banner_background_color":"#1E232F",

==> boot: "ui_banner_dismissible":"null",

==> boot: "ui_banner_footer_content":"null",

==> boot: "ui_banner_foreground_color":"#FFFFFF",

==> boot: "ui_banner_header_content":"null",

==> boot: "ui_banner_header_title":"null",

==> boot: "ui_banner_image_path":"null",

==> boot: "ui_branding":"false",

==> boot: "ui_external_links":"false",

==> boot: "use_mesos_hooks":"false",

==> boot: "use_proxy":"false",

==> boot: "user_arguments":"{\n \"agent_list\":\"[\\\"192.168.65.111\\\", \\\"192.168.65.60\\\"]\",\n \"bootstrap_url\":\"http://192.168.65.50\",\n \"check_time\":\"false\",\n \"cluster_name\":\"dcos-vagrant\",\n \"exhibitor_storage_backend\":\"static\",\n \"exhibitor_zk_hosts\":\"192.168.65.50:2181\",\n \"master_discovery\":\"static\",\n \"master_list\":\"[\\\"192.168.65.90\\\"]\",\n \"provider\":\"onprem\",\n \"resolvers\":\"[\\\"10.0.2.3\\\"]\",\n \"ssh_port\":\"22\",\n \"ssh_user\":\"vagrant\",\n \"superuser_password_hash\":\"$6$rounds=656000$123o/Qz.InhbkbsO$kn5IkpWm5CplEorQo7jG/27LkyDgWrml36lLxDtckZkCxu22uihAJ4DOJVVnNbsz/Y5MCK3B1InquE6E7Jmh30\",\n \"superuser_username\":\"admin\"\n}",

==> boot: "weights":""

==> boot: }

==> boot: Generating configuration files...

==> boot: Final arguments:{

==> boot: "adminrouter_auth_enabled":"true",

==> boot: "bootstrap_id":"5df43052907c021eeb5de145419a3da1898c58a5",

==> boot: "bootstrap_tmp_dir":"tmp",

==> boot: "bootstrap_url":"http://192.168.65.50",

==> boot: "check_time":"false",

==> boot: "cluster_docker_credentials":"{}",

==> boot: "cluster_docker_credentials_dcos_owned":"false",

==> boot: "cluster_docker_credentials_enabled":"false",

==> boot: "cluster_docker_credentials_write_to_etc":"false",

==> boot: "cluster_docker_registry_enabled":"false",

==> boot: "cluster_docker_registry_url":"",

==> boot: "cluster_name":"dcos-vagrant",

==> boot: "cluster_packages":"[\"dcos-config--setup_4869fa95533aed5aad36093272289e6bd389b458\", \"dcos-metadata--setup_4869fa95533aed5aad36093272289e6bd389b458\"]",

==> boot: "config_id":"4869fa95533aed5aad36093272289e6bd389b458",

==> boot: "config_yaml":" \"agent_list\": |-\n [\"192.168.65.111\", \"192.168.65.60\"]\n \"bootstrap_url\": |-\n http://192.168.65.50\n \"check_time\": |-\n false\n \"cluster_name\": |-\n dcos-vagrant\n \"exhibitor_storage_backend\": |-\n static\n \"exhibitor_zk_hosts\": |-\n 192.168.65.50:2181\n \"master_discovery\": |-\n static\n \"master_list\": |-\n [\"192.168.65.90\"]\n \"provider\": |-\n onprem\n \"resolvers\": |-\n [\"10.0.2.3\"]\n \"ssh_port\": |-\n 22\n \"ssh_user\": |-\n vagrant\n \"superuser_password_hash\": |-\n $6$rounds=656000$123o/Qz.InhbkbsO$kn5IkpWm5CplEorQo7jG/27LkyDgWrml36lLxDtckZkCxu22uihAJ4DOJVVnNbsz/Y5MCK3B1InquE6E7Jmh30\n \"superuser_username\": |-\n admin\n",

==> boot: "curly_pound":"{#",

==> boot: "custom_auth":"false",

==> boot: "dcos_gen_resolvconf_search_str":"",

==> boot: "dcos_image_commit":"602edc1b4da9364297d166d4857fc8ed7b0b65ca",

==> boot: "dcos_overlay_config_attempts":"4",

==> boot: "dcos_overlay_enable":"true",

==> boot: "dcos_overlay_mtu":"1420",

==> boot: "dcos_overlay_network":"{\"vtep_subnet\": \"44.128.0.0/20\", \"overlays\": [{\"prefix\": 24, \"name\": \"dcos\", \"subnet\": \"9.0.0.0/8\"}], \"vtep_mac_oui\": \"70:B3:D5:00:00:00\"}",

==> boot: "dcos_remove_dockercfg_enable":"false",

==> boot: "dcos_version":"1.8.8",

==> boot: "dns_search":"",

==> boot: "docker_remove_delay":"1hrs",

==> boot: "docker_stop_timeout":"20secs",

==> boot: "exhibitor_static_ensemble":"1:192.168.65.90",

==> boot: "exhibitor_storage_backend":"static",

==> boot: "expanded_config":"\"DO NOT USE THIS AS AN ARGUMENT TO OTHER ARGUMENTS. IT IS TEMPORARY\"",

==> boot: "gc_delay":"2days",

==> boot: "ip_detect_contents":"'#!/usr/bin/env bash\n\n set -o errexit\n\n set -o nounset\n\n set -o pipefail\n\n echo $(/usr/sbin/ip route show to match 192.168.65.90 | grep -Eo ''[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}''\n | tail -1)\n\n\n '\n",

==> boot: "ip_detect_filename":"genconf/ip-detect",

==> boot: "ip_detect_public_contents":"'#!/usr/bin/env bash\n\n set -o errexit\n\n set -o nounset\n\n set -o pipefail\n\n echo $(/usr/sbin/ip route show to match 192.168.65.90 | grep -Eo ''[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}''\n | tail -1)\n\n\n '\n",

==> boot: "master_discovery":"static",

==> boot: "master_dns_bindall":"true",

==> boot: "master_list":"[\"192.168.65.90\"]",

==> boot: "master_quorum":"1",

==> boot: "mesos_container_logger":"org_apache_mesos_LogrotateContainerLogger",

==> boot: "mesos_dns_ip_sources":"[\"host\", \"netinfo\"]",

==> boot: "mesos_dns_resolvers_str":"\"resolvers\": [\"10.0.2.3\"]",

==> boot: "mesos_hooks":"",

==> boot: "mesos_isolation":"cgroups/cpu,cgroups/mem,disk/du,network/cni,filesystem/linux,docker/runtime,docker/volume",

==> boot: "mesos_log_directory_max_files":"162",

==> boot: "mesos_log_retention_count":"137",

==> boot: "mesos_log_retention_mb":"4000",

==> boot: "minuteman_forward_metrics":"false",

==> boot: "minuteman_max_named_ip":"11.255.255.255",

==> boot: "minuteman_max_named_ip_erltuple":"{11,255,255,255}",

==> boot: "minuteman_min_named_ip":"11.0.0.0",

==> boot: "minuteman_min_named_ip_erltuple":"{11,0,0,0}",

==> boot: "num_masters":"1",

==> boot: "oauth_auth_host":"https://dcos.auth0.com",

==> boot: "oauth_auth_redirector":"https://auth.dcos.io",

==> boot: "oauth_available":"true",

==> boot: "oauth_client_id":"3yF5TOSzdlI45Q1xspxzeoGBe9fNxm9m",

==> boot: "oauth_enabled":"true",

==> boot: "oauth_issuer_url":"https://dcos.auth0.com/",

==> boot: "package_names":"[\n \"dcos-config\",\n \"dcos-metadata\"\n]",

==> boot: "provider":"onprem",

==> boot: "resolvers":"[\"10.0.2.3\"]",

==> boot: "resolvers_str":"10.0.2.3",

==> boot: "rexray_config":"{\"rexray\": {\"modules\": {\"default-docker\": {\"disabled\": true}, \"default-admin\": {\"host\": \"tcp://127.0.0.1:61003\"}}, \"loglevel\": \"info\"}}",

==> boot: "rexray_config_contents":"\"rexray:\\n loglevel: info\\n modules:\\n default-admin:\\n host: tcp://127.0.0.1:61003\\n\\\n \\ default-docker:\\n disabled: true\\n\"\n",

==> boot: "rexray_config_preset":"",

==> boot: "telemetry_enabled":"true",

==> boot: "template_filenames":"[\n \"dcos-config.yaml\",\n \"cloud-config.yaml\",\n \"dcos-metadata.yaml\",\n \"dcos-services.yaml\"\n]",

==> boot: "ui_banner":"false",

==> boot: "ui_banner_background_color":"#1E232F",

==> boot: "ui_banner_dismissible":"null",

==> boot: "ui_banner_footer_content":"null",

==> boot: "ui_banner_foreground_color":"#FFFFFF",

==> boot: "ui_banner_header_content":"null",

==> boot: "ui_banner_header_title":"null",

==> boot: "ui_banner_image_path":"null",

==> boot: "ui_branding":"false",

==> boot: "ui_external_links":"false",

==> boot: "use_mesos_hooks":"false",

==> boot: "use_proxy":"false",

==> boot: "user_arguments":"{\n \"agent_list\":\"[\\\"192.168.65.111\\\", \\\"192.168.65.60\\\"]\",\n \"bootstrap_url\":\"http://192.168.65.50\",\n \"check_time\":\"false\",\n \"cluster_name\":\"dcos-vagrant\",\n \"exhibitor_storage_backend\":\"static\",\n \"exhibitor_zk_hosts\":\"192.168.65.50:2181\",\n \"master_discovery\":\"static\",\n \"master_list\":\"[\\\"192.168.65.90\\\"]\",\n \"provider\":\"onprem\",\n \"resolvers\":\"[\\\"10.0.2.3\\\"]\",\n \"ssh_port\":\"22\",\n \"ssh_user\":\"vagrant\",\n \"superuser_password_hash\":\"$6$rounds=656000$123o/Qz.InhbkbsO$kn5IkpWm5CplEorQo7jG/27LkyDgWrml36lLxDtckZkCxu22uihAJ4DOJVVnNbsz/Y5MCK3B1InquE6E7Jmh30\",\n \"superuser_username\":\"admin\"\n}",

==> boot: "weights":""

==> boot: }

==> boot: Package filename: packages/dcos-config/dcos-config--setup_4869fa95533aed5aad36093272289e6bd389b458.tar.xz

==> boot: Package filename: packages/dcos-metadata/dcos-metadata--setup_4869fa95533aed5aad36093272289e6bd389b458.tar.xz

==> boot: Generating Bash configuration files for DC/OS

==> boot: ‘/root/dcos/genconf/serve/bootstrap’ -> ‘/var/tmp/dcos/bootstrap’

==> boot: ‘/root/dcos/genconf/serve/bootstrap/5df43052907c021eeb5de145419a3da1898c58a5.bootstrap.tar.xz’ -> ‘/var/tmp/dcos/bootstrap/5df43052907c021eeb5de145419a3da1898c58a5.bootstrap.tar.xz’

==> boot: ‘/root/dcos/genconf/serve/bootstrap/5df43052907c021eeb5de145419a3da1898c58a5.active.json’ -> ‘/var/tmp/dcos/bootstrap/5df43052907c021eeb5de145419a3da1898c58a5.active.json’

==> boot: ‘/root/dcos/genconf/serve/bootstrap.latest’ -> ‘/var/tmp/dcos/bootstrap.latest’

==> boot: ‘/root/dcos/genconf/serve/cluster-package-info.json’ -> ‘/var/tmp/dcos/cluster-package-info.json’

==> boot: ‘/root/dcos/genconf/serve/dcos_install.sh’ -> ‘/var/tmp/dcos/dcos_install.sh’

==> boot: ‘/root/dcos/genconf/serve/packages’ -> ‘/var/tmp/dcos/packages’

==> boot: ‘/root/dcos/genconf/serve/packages/dcos-metadata’ -> ‘/var/tmp/dcos/packages/dcos-metadata’

==> boot: ‘/root/dcos/genconf/serve/packages/dcos-metadata/dcos-metadata--setup_4869fa95533aed5aad36093272289e6bd389b458.tar.xz’ -> ‘/var/tmp/dcos/packages/dcos-metadata/dcos-metadata--setup_4869fa95533aed5aad36093272289e6bd389b458.tar.xz’

==> boot: ‘/root/dcos/genconf/serve/packages/dcos-config’ -> ‘/var/tmp/dcos/packages/dcos-config’

==> boot: ‘/root/dcos/genconf/serve/packages/dcos-config/dcos-config--setup_4869fa95533aed5aad36093272289e6bd389b458.tar.xz’ -> ‘/var/tmp/dcos/packages/dcos-config/dcos-config--setup_4869fa95533aed5aad36093272289e6bd389b458.tar.xz’

==> m1: Installing DC/OS (master)

==> m1: sudo: bash -ceu "curl --fail --location --silent --show-error --verbose http://boot.dcos/dcos_install.sh | bash -s -- master"

==> m1:

==> m1: * About to connect() to boot.dcos port 80 (#0)

==> m1: * Trying 192.168.65.50...

==> m1: * Connected to boot.dcos (192.168.65.50) port 80 (#0)

==> m1: > GET /dcos_install.sh HTTP/1.1

==> m1: > User-Agent: curl/7.29.0

==> m1: > Host: boot.dcos

==> m1: > Accept: */*

==> m1: >

==> m1: < HTTP/1.1 200 OK ==> m1: < Server: nginx/1.11.4 ==> m1: < Date: Tue, 07 Mar 2017 22:46:20 GMT ==> m1: < Content-Type: application/octet-stream ==> m1: < Content-Length: 15293 ==> m1: < Last-Modified: Tue, 07 Mar 2017 22:46:11 GMT ==> m1: < Connection: keep-alive ==> m1: < ETag: "58bf3833-3bbd" ==> m1: < Accept-Ranges: bytes ==> m1: < ==> m1: { [data not shown]

==> m1: * Connection #0 to host boot.dcos left intact

==> m1: Starting DC/OS Install Process

==> m1: Running preflight checks

==> m1: Checking if DC/OS is already installed:

==> m1: PASS (Not installed)

==> m1: PASS Is SELinux disabled?

==> m1: Checking if docker is installed and in PATH:

==> m1: PASS

==> m1: Checking docker version requirement (>= 1.6):

==> m1: PASS (1.11.2)

==> m1: Checking if curl is installed and in PATH:

==> m1: PASS

==> m1: Checking if bash is installed and in PATH:

==> m1: PASS

==> m1: Checking if ping is installed and in PATH:

==> m1: PASS

==> m1: Checking if tar is installed and in PATH:

==> m1: PASS

==> m1: Checking if xz is installed and in PATH:

==> m1: PASS

==> m1: Checking if unzip is installed and in PATH:

==> m1: PASS

==> m1: Checking if ipset is installed and in PATH:

==> m1: PASS

==> m1: Checking if systemd-notify is installed and in PATH:

==> m1: PASS

==> m1: Checking if systemd is installed and in PATH:

==> m1: PASS

==> m1: Checking systemd version requirement (>= 200):

==> m1: PASS (219)

==> m1: Checking if group 'nogroup' exists:

==> m1: PASS

==> m1: Checking if port 53 (required by spartan) is in use:

==> m1: PASS

==> m1: Checking if port 80 (required by adminrouter) is in use:

==> m1: PASS

==> m1: Checking if port 443 (required by adminrouter) is in use:

==> m1: PASS

==> m1: Checking if port 1050 (required by 3dt) is in use:

==> m1: PASS

==> m1: Checking if port 2181 (required by zookeeper) is in use:

==> m1: PASS

==> m1: Checking if port 5050 (required by mesos-master) is in use:

==> m1: PASS

==> m1: Checking if port 7070 (required by cosmos) is in use:

==> m1: PASS

==> m1: Checking if port 8080 (required by marathon) is in use:

==> m1: PASS

==> m1: Checking if port 8101 (required by dcos-oauth) is in use:

==> m1: PASS

==> m1: Checking if port 8123 (required by mesos-dns) is in use:

==> m1: PASS

==> m1: Checking if port 8181 (required by exhibitor) is in use:

==> m1: PASS

==> m1: Checking if port 9000 (required by metronome) is in use:

==> m1: PASS

==> m1: Checking if port 9942 (required by metronome) is in use:

==> m1: PASS

==> m1: Checking if port 9990 (required by cosmos) is in use:

==> m1: PASS

==> m1: Checking if port 15055 (required by dcos-history) is in use:

==> m1: PASS

==> m1: Checking if port 33107 (required by navstar) is in use:

==> m1: PASS

==> m1: Checking if port 36771 (required by marathon) is in use:

==> m1: PASS

==> m1: Checking if port 41281 (required by zookeeper) is in use:

==> m1: PASS

==> m1: Checking if port 42819 (required by spartan) is in use:

==> m1: PASS

==> m1: Checking if port 43911 (required by minuteman) is in use:

==> m1: PASS

==> m1: Checking if port 46839 (required by metronome) is in use:

==> m1: PASS

==> m1: Checking if port 61053 (required by mesos-dns) is in use:

==> m1: PASS

==> m1: Checking if port 61420 (required by epmd) is in use:

==> m1: PASS

==> m1: Checking if port 61421 (required by minuteman) is in use:

==> m1: PASS

==> m1: Checking if port 62053 (required by spartan) is in use:

==> m1: PASS

==> m1: Checking if port 62080 (required by navstar) is in use:

==> m1: PASS

==> m1: Checking Docker is configured with a production storage driver:

==> m1: WARNING: bridge-nf-call-iptables is disabled

==> m1: WARNING: bridge-nf-call-ip6tables is disabled

==> m1: PASS (overlay)

==> m1: Creating directories under /etc/mesosphere

==> m1: Creating role file for master

==> m1: Configuring DC/OS

==> m1: Setting and starting DC/OS

==> m1: Created symlink from /etc/systemd/system/multi-user.target.wants/dcos-setup.service to /etc/systemd/system/dcos-setup.service.

==> a1: Installing DC/OS (agent)

==> p1: Installing DC/OS (agent-public)

==> a1: sudo: bash -ceu "curl --fail --location --silent --show-error --verbose http://boot.dcos/dcos_install.sh | bash -s -- slave"

==> p1: sudo: bash -ceu "curl --fail --location --silent --show-error --verbose http://boot.dcos/dcos_install.sh | bash -s -- slave_public"

==> a1:

==> p1:

==> a1: * About to connect() to boot.dcos port 80 (#0)

==> p1: * About to connect() to boot.dcos port 80 (#0)

==> a1: * Trying 192.168.65.50...

==> p1: * Trying 192.168.65.50...

==> a1: * Connected to boot.dcos (192.168.65.50) port 80 (#0)

==> p1: * Connected to boot.dcos (192.168.65.50) port 80 (#0)

==> p1: > GET /dcos_install.sh HTTP/1.1

==> p1: > User-Agent: curl/7.29.0

==> p1: > Host: boot.dcos

==> p1: > Accept: */*

==> p1: >

==> a1: > GET /dcos_install.sh HTTP/1.1

==> a1: > User-Agent: curl/7.29.0

==> a1: > Host: boot.dcos

==> a1: > Accept: */*

==> a1: >

==> p1: < HTTP/1.1 200 OK ==> p1: < Server: nginx/1.11.4 ==> p1: < Date: Tue, 07 Mar 2017 22:48:31 GMT ==> p1: < Content-Type: application/octet-stream ==> p1: < Content-Length: 15293 ==> p1: < Last-Modified: Tue, 07 Mar 2017 22:46:11 GMT ==> p1: < Connection: keep-alive ==> p1: < ETag: "58bf3833-3bbd" ==> p1: < Accept-Ranges: bytes ==> p1: < ==> p1: { [data not shown]

==> a1: < HTTP/1.1 200 OK ==> a1: < Server: nginx/1.11.4 ==> a1: < Date: Tue, 07 Mar 2017 22:48:31 GMT ==> a1: < Content-Type: application/octet-stream ==> a1: < Content-Length: 15293 ==> a1: < Last-Modified: Tue, 07 Mar 2017 22:46:11 GMT ==> a1: < Connection: keep-alive ==> a1: < ETag: "58bf3833-3bbd" ==> a1: < Accept-Ranges: bytes ==> a1: < ==> a1: { [data not shown]

==> p1: * Connection #0 to host boot.dcos left intact

==> a1: * Connection #0 to host boot.dcos left intact

==> p1: Starting DC/OS Install Process

==> p1: Running preflight checks

==> p1: Checking if DC/OS is already installed: PASS (Not installed)

==> a1: Starting DC/OS Install Process

==> a1: Running preflight checks

==> a1: Checking if DC/OS is already installed: PASS (Not installed)

==> a1: PASS Is SELinux disabled?

==> p1: PASS Is SELinux disabled?

==> p1: Checking if docker is installed and in PATH:

==> p1: PASS

==> p1: Checking docker version requirement (>= 1.6):

==> p1: PASS (1.11.2)

==> p1: Checking if curl is installed and in PATH:

==> p1: PASS

==> p1: Checking if bash is installed and in PATH:

==> a1: Checking if docker is installed and in PATH:

==> p1: PASS

==> p1: Checking if ping is installed and in PATH:

==> a1: PASS

==> a1: Checking docker version requirement (>= 1.6):

==> p1: PASS

==> p1: Checking if tar is installed and in PATH:

==> a1: PASS (1.11.2)

==> p1: PASS

==> a1: Checking if curl is installed and in PATH:

==> p1: Checking if xz is installed and in PATH:

==> a1: PASS

==> p1: PASS

==> p1: Checking if unzip is installed and in PATH:

==> a1: Checking if bash is installed and in PATH:

==> a1: PASS

==> p1: PASS

==> p1: Checking if ipset is installed and in PATH:

==> p1: PASS

==> p1: Checking if systemd-notify is installed and in PATH:

==> a1: Checking if ping is installed and in PATH:

==> p1: PASS

==> a1: PASS

==> a1: Checking if tar is installed and in PATH:

==> p1: Checking if systemd is installed and in PATH:

==> a1: PASS

==> p1: PASS

==> p1: Checking systemd version requirement (>= 200):

==> a1: Checking if xz is installed and in PATH:

==> p1: PASS (219)

==> p1: Checking if group 'nogroup' exists:

==> p1: PASS

==> p1: Checking if port 53 (required by spartan) is in use:

==> a1: PASS

==> a1: Checking if unzip is installed and in PATH:

==> p1: PASS

==> p1: Checking if port 5051 (required by mesos-agent) is in use:

==> a1: PASS

==> p1: PASS

==> p1: Checking if port 34451 (required by navstar) is in use:

==> a1: Checking if ipset is installed and in PATH:

==> p1: PASS

==> p1: Checking if port 39851 (required by spartan) is in use:

==> a1: PASS

==> p1: PASS

==> p1: Checking if port 43995 (required by minuteman) is in use:

==> a1: Checking if systemd-notify is installed and in PATH:

==> p1: PASS

==> p1: Checking if port 61001 (required by agent-adminrouter) is in use:

==> a1: PASS

==> p1: PASS

==> p1: Checking if port 61420 (required by epmd) is in use:

==> a1: Checking if systemd is installed and in PATH:

==> p1: PASS

==> p1: Checking if port 61421 (required by minuteman) is in use:

==> p1: PASS

==> p1: Checking if port 62053 (required by spartan) is in use:

==> a1: PASS

==> a1: Checking systemd version requirement (>= 200):

==> a1: PASS (219)

==> a1: Checking if group 'nogroup' exists:

==> p1: PASS

==> p1: Checking if port 62080 (required by navstar) is in use:

==> a1: PASS

==> a1: Checking if port 53 (required by spartan) is in use:

==> p1: PASS

==> p1: Checking Docker is configured with a production storage driver:

==> a1: PASS

==> a1: Checking if port 5051 (required by mesos-agent) is in use:

==> p1: WARNING: bridge-nf-call-iptables is disabled

==> p1: WARNING: bridge-nf-call-ip6tables is disabled

==> a1: PASS

==> a1: Checking if port 34451 (required by navstar) is in use:

==> p1: PASS (overlay)

==> p1: Creating directories under /etc/mesosphere

==> a1: PASS

==> a1: Checking if port 39851 (required by spartan) is in use:

==> p1: Creating role file for slave_public

==> a1: PASS

==> a1: Checking if port 43995 (required by minuteman) is in use:

==> p1: Configuring DC/OS

==> a1: PASS

==> a1: Checking if port 61001 (required by agent-adminrouter) is in use:

==> a1: PASS

==> a1: Checking if port 61420 (required by epmd) is in use:

==> a1: PASS

==> a1: Checking if port 61421 (required by minuteman) is in use:

==> a1: PASS

==> a1: Checking if port 62053 (required by spartan) is in use:

==> a1: PASS

==> a1: Checking if port 62080 (required by navstar) is in use:

==> a1: PASS

==> a1: Checking Docker is configured with a production storage driver:

==> p1: Setting and starting DC/OS

==> a1: WARNING: bridge-nf-call-iptables is disabled

==> a1: WARNING: bridge-nf-call-ip6tables is disabled

==> a1: PASS (overlay)

==> a1: Creating directories under /etc/mesosphere

==> a1: Creating role file for slave

==> a1: Configuring DC/OS

==> a1: Setting and starting DC/OS

==> a1: Created symlink from /etc/systemd/system/multi-user.target.wants/dcos-setup.service to /etc/systemd/system/dcos-setup.service.

==> p1: Created symlink from /etc/systemd/system/multi-user.target.wants/dcos-setup.service to /etc/systemd/system/dcos-setup.service.

==> m1: DC/OS Postflight

==> a1: DC/OS Postflight

==> p1: DC/OS Postflight

==> m1: sudo: dcos-postflight

==> a1: sudo: dcos-postflight

==> p1: sudo: dcos-postflight

==> a1:

==> p1:

==> m1:

==> a1: Setting Mesos Memory: 5632 (role=*)

==> a1: sudo: mesos-memory 5632

==> a1:

==> a1: Updating /var/lib/dcos/mesos-resources

==> a1: Restarting Mesos Agent

==> a1: sudo: bash -ceu "systemctl stop dcos-mesos-slave.service && rm -f /var/lib/mesos/slave/meta/slaves/latest && systemctl start dcos-mesos-slave.service --no-block"

==> a1:

==> p1: Setting Mesos Memory: 1024 (role=slave_public)

==> p1: sudo: mesos-memory 1024 slave_public

==> p1:

==> p1: Updating /var/lib/dcos/mesos-resources

==> p1: Restarting Mesos Agent

==> p1: sudo: bash -ceu "systemctl stop dcos-mesos-slave-public.service && rm -f /var/lib/mesos/slave/meta/slaves/latest && systemctl start dcos-mesos-slave-public.service --no-block"

==> p1:

==> boot: DC/OS Installation Complete

==> boot: Web Interface: http://m1.dcos/

==> boot: DC/OS Installation Complete ==> boot: Web Interface: http://m1.dcos/

The VirtualBox GUI shows the four machines we had seen in the VagrantConfig.yaml. They are up and running:

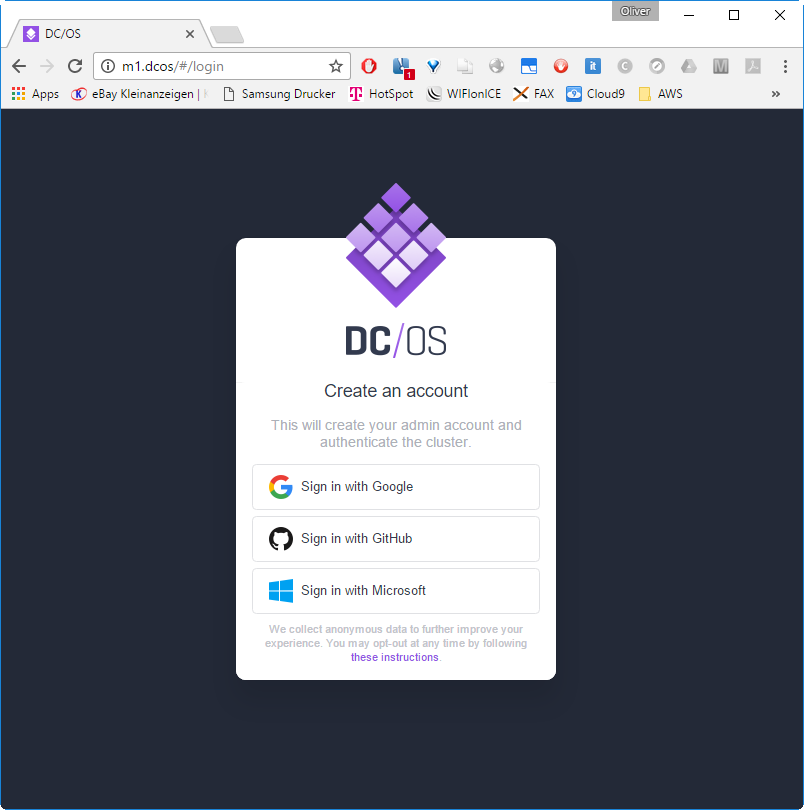

Step 6: Log into the DC/OS GUI

Now let us access the Web UI on m1.dcos:

The Vagrant Hostmanager Plugin works also on Windows: we can check this by reading the hosts file on C:\Windows\System32\drivers\etc\hosts. It contains the DNS mappings for the four machines (a1.dcos, boot.dcos, m1.dcos and p1.dcos). The DNS mapping for spring.acme.org with alias oinker.acme.org will be missing in your case and will be added at a later step, when we are installing the Marathon load balancer based on HAProxy.

The host manager has added m1 and some other FQDNs to the hosts file (found on C:\Windows\System32\drivers\etc\hosts):

## vagrant-hostmanager-start id: 9f1502eb-71bf-4e6a-b3bc-44a83db628b7 192.168.65.111 a1.dcos 192.168.65.50 boot.dcos 192.168.65.90 m1.dcos 192.168.65.60 p1.dcos 192.168.65.60 spring.acme.org oinker.acme.org ## vagrant-hostmanager-end

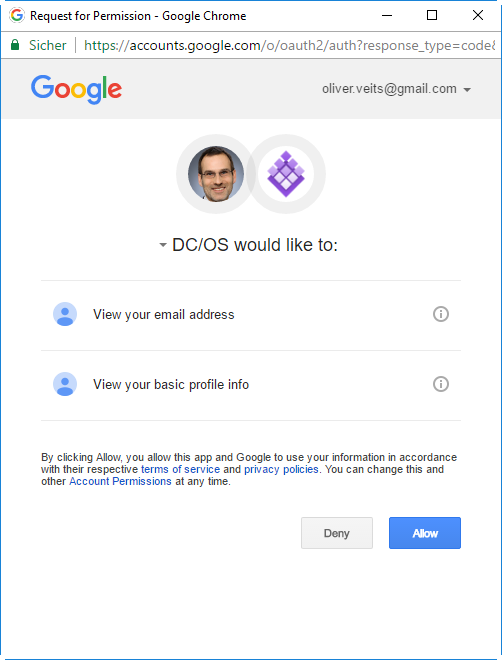

After login in via Google,

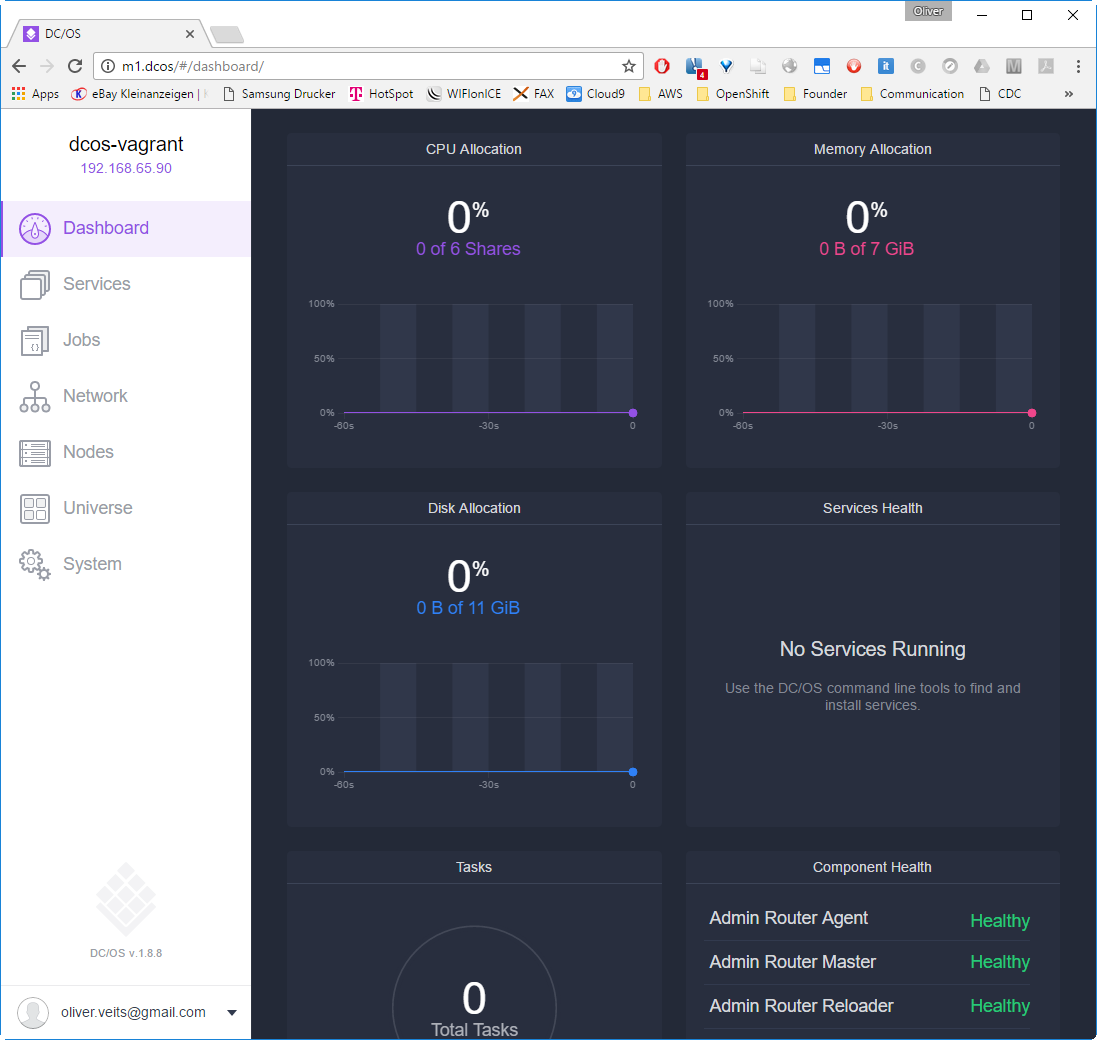

and pressing the Allow button, we reach at the DC/OS Dashboard:

(scrolling down)

Step 7: Install the DCOS CLI

Now we will continue to follow the DC/OS 101 Tutorial and install the DC/OS CLI. This can be done by clicking the profile on the lower left of the Web GUI:

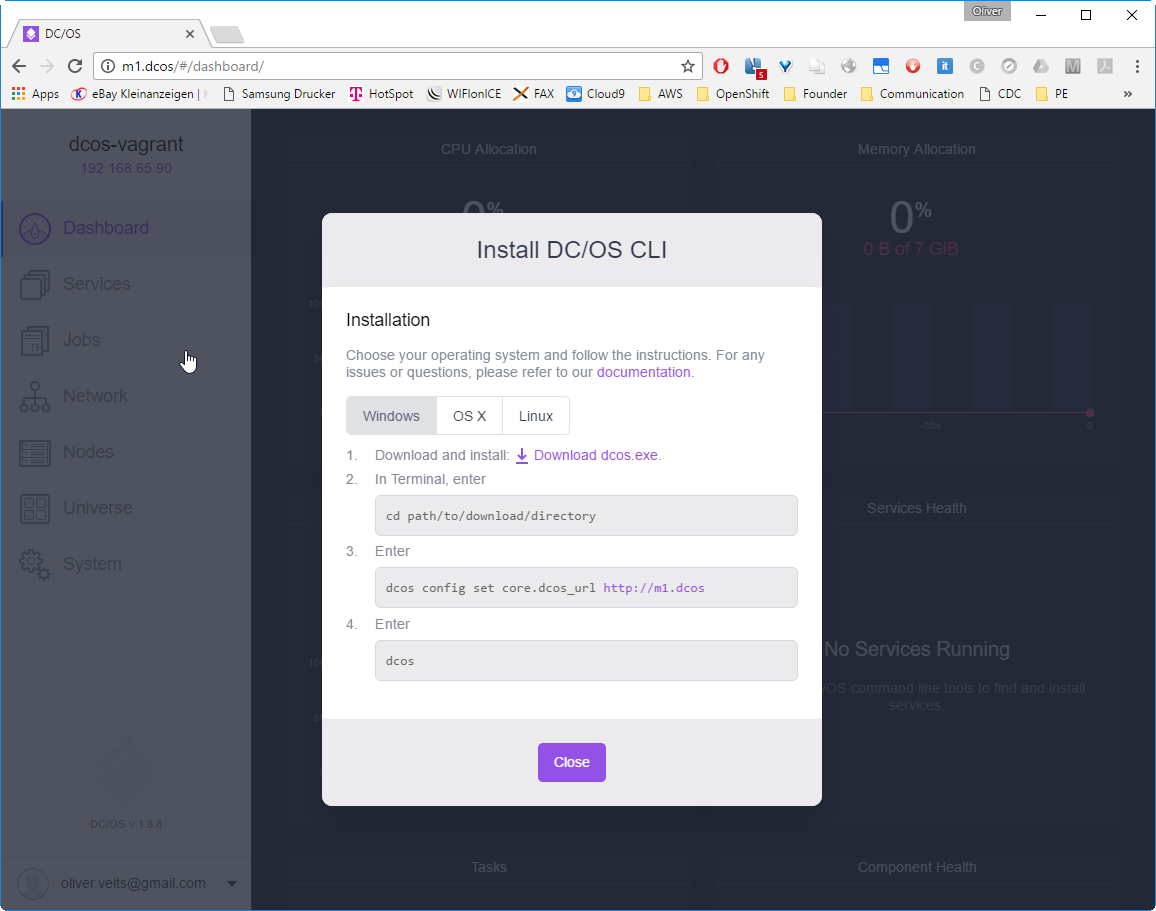

Choose the operating system type you are working on. In my case, I have a Windows system and I have performed following steps:

Step 8: Configure DC/OS Master URL

First, we cd into the the folder, where dcos.exe is located (D:\veits\downloads\DCOS CLI in my case), before we configure the core DCOS URL:

Windows> cd /D "D:\veits\downloads\DCOS CLI" Windows> dcos config set core.dcos_url http://m1.dcos Windows> dcos Command line utility for the Mesosphere Datacenter Operating System (DC/OS). The Mesosphere DC/OS is a distributed operating system built around Apache Mesos. This utility provides tools for easy management of a DC/OS installation. Available DC/OS commands: auth Authenticate to DC/OS cluster config Manage the DC/OS configuration file experimental Experimental commands. These commands are under development and are subject to change help Display help information about DC/OS job Deploy and manage jobs in DC/OS marathon Deploy and manage applications to DC/OS node Administer and manage DC/OS cluster nodes package Install and manage DC/OS software packages service Manage DC/OS services task Manage DC/OS tasks Get detailed command description with 'dcos --help'.

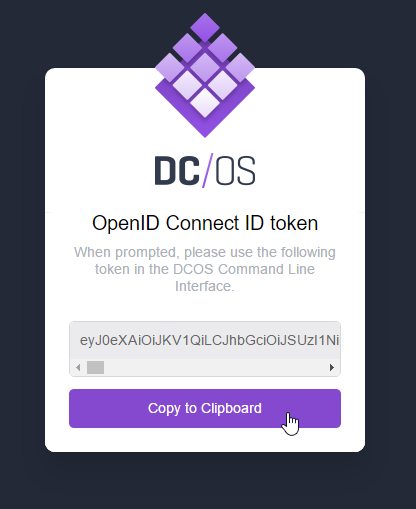

Step 9: Receive Token from the DC/OS Master

Windows> dcos auth login

Please go to the following link in your browser:

http://m1.dcos/login?redirect_uri=urn:ietf:wg:oauth:2.0:oob

Enter OpenID Connect ID Token:eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiIsIm...-YqOARGFN5Ewcf6YWlw <-------(shortened)

Login successful!

Here, I have cut&paste the link I have marked in red into the browser URL field:

Then logged in as Google user:

-> I have signed in with Google

-> clicked Copy to Clipboard

-> paste the clipboard to the terminal as shown above already (here again) and press <enter>:

Enter OpenID Connect ID Token:eyJ0eXAiOiJKV1QiLCJhbGciOiJSUzI1NiIsIm...-YqOARGFN5Ewcf6YWlw <-------(shortened) Login successful!

With that, you make sure only you have access to the (virtual) cluster.

Step 10 (optional): Explore DC/OS and Marathon

With the dcos service command, we will see, that Marathon is running already:

Windows> dcos service NAME HOST ACTIVE TASKS CPU MEM DISK ID marathon 192.168.65.90 True 0 0.0 0.0 0.0 1d3a11d0-1c3e-4ec2-8485-d1a3aa43c465-0001

With dcos node we see that two (virtual) nodes are connected (as we might have noticed on the dashboard as well):

Windows> dcos node HOSTNAME IP ID 192.168.65.111 192.168.65.111 1d3a11d0-1c3e-4ec2-8485-d1a3aa43c465-S2 192.168.65.60 192.168.65.60 1d3a11d0-1c3e-4ec2-8485-d1a3aa43c465-S3

The first one is a1, the private agent, and the second one is p1, the public agent.

With dcos log --leader we can check the Mesos master log:

Windows> dcos node log --leader dcos-log is not supported Falling back to files API... I0309 13:11:45.152153 3217 http.cpp:390] HTTP GET for /master/state-summary from 192.168.65.90:45654 with User-Agent='python-requests/2.10.0' I0309 13:11:47.176911 3214 http.cpp:390] HTTP GET for /master/state-summary from 192.168.65.90:45660 with User-Agent='python-requests/2.10.0' I0309 13:11:48.039836 3214 http.cpp:390] HTTP GET for /master/state from 192.168.65.90:41141 with User-Agent='Mesos-State / Host: m1, Pid: 5258' I0309 13:11:49.195853 3216 http.cpp:390] HTTP GET for /master/state-summary from 192.168.65.90:45666 with User-Agent='python-requests/2.10.0' I0309 13:11:51.216013 3217 http.cpp:390] HTTP GET for /master/state-summary from 192.168.65.90:45672 with User-Agent='python-requests/2.10.0' I0309 13:11:51.376802 3217 master.cpp:5478] Performing explicit task state reconciliation for 1 tasks of framework 1d3a11d0-1c3e-4ec2-8485-d1a3aa43c465-0001 (marathon) at scheduler-1a712a58-a49a-4c45-a89a-823b827a49bf@192.168.65.90:15101 I0309 13:11:53.236994 3217 http.cpp:390] HTTP GET for /master/state-summary from 192.168.65.90:45678 with User-Agent='python-requests/2.10.0' I0309 13:11:55.257347 3216 http.cpp:390] HTTP GET for /master/state-summary from 192.168.65.90:45684 with User-Agent='python-requests/2.10.0' I0309 13:11:57.274785 3217 http.cpp:390] HTTP GET for /master/state-summary from 192.168.65.90:45690 with User-Agent='python-requests/2.10.0' I0309 13:11:57.462590 3213 http.cpp:390] HTTP GET for /master/state.json from 192.168.65.90:45704 with User-Agent='Mesos-DNS'

Finally, dcos help shows the output

Windows> dcos help

Description:

The Mesosphere Datacenter Operating System (DC/OS) spans all of the machines in

your datacenter or cloud and treats them as a single, shared set of resources.

Usage:

dcos [options] [] [...]

Options:

--debug

Enable debug mode.

--help

Print usage.

--log-level=

Set the logging level. This setting does not affect the output sent to

stdout. The severity levels are:

The severity level:

* debug Prints all messages.

* info Prints informational, warning, error, and critical messages.

* warning Prints warning, error, and critical messages.

* error Prints error and critical messages.

* critical Prints only critical messages to stderr.

--version

Print version information

Environment Variables:

DCOS_CONFIG

Set the path to the DC/OS configuration file. By default, this variable

is set to ~/.dcos/dcos.toml.

DCOS_DEBUG

Indicates whether to print additional debug messages to stdout. By

default this is set to false.

DCOS_LOG_LEVEL

Prints log messages to stderr at or above the level indicated. This is

equivalent to the --log-level command-line option.

You can also check the CLI documentation.

Step 11: Deploy a Hello World Service per GUI

If you follow steps 11 and 12, you will see in step 13 that the default networking settings are sub-optimal. You can skip steps 11 to 14, if you wish to create a hello service with an improved networking including load balancing.

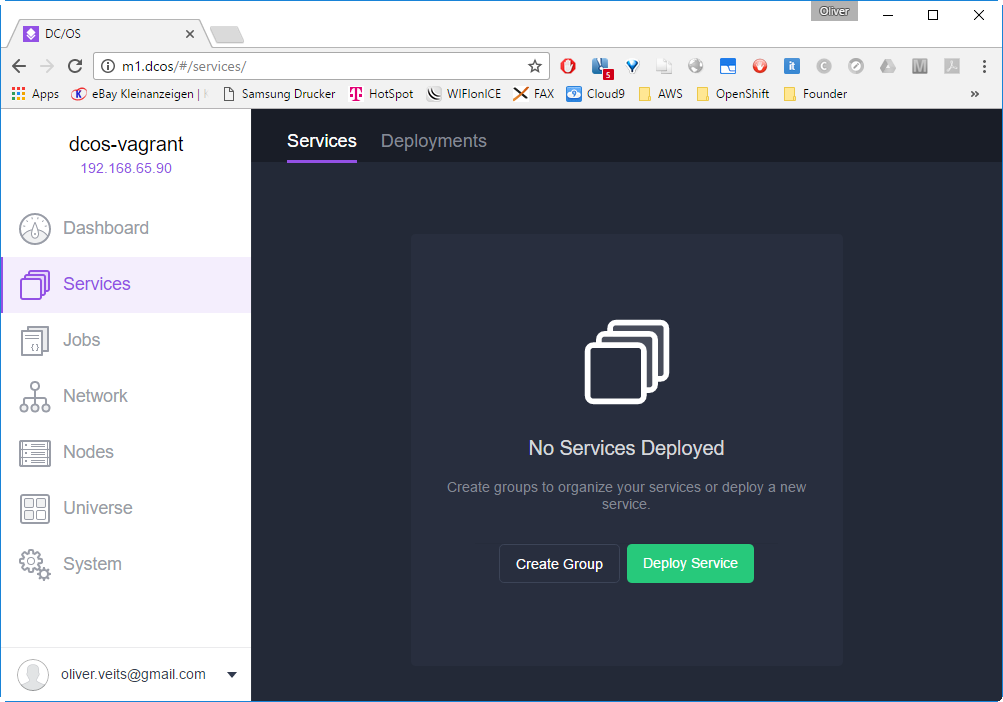

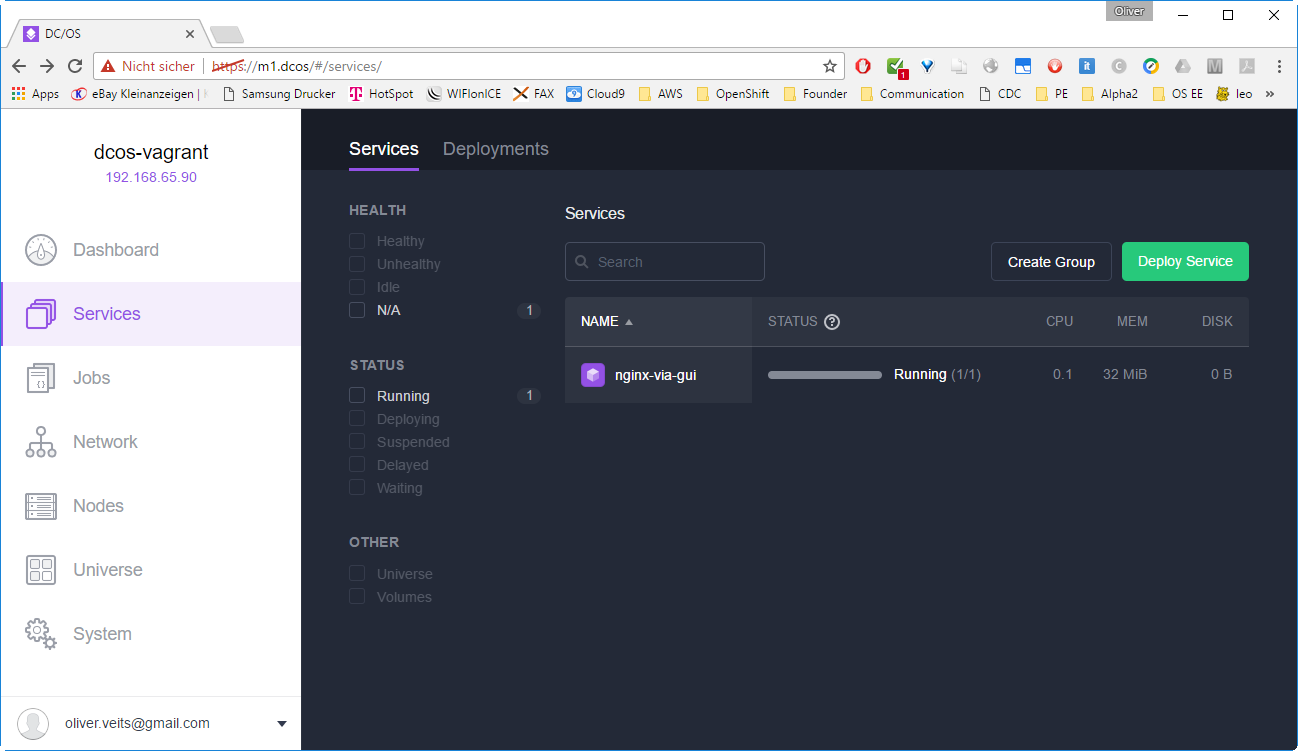

Now we will create a Hello World Service. For that, log into the DC/OS, if not done already and navigate to Services:

-> ![]()

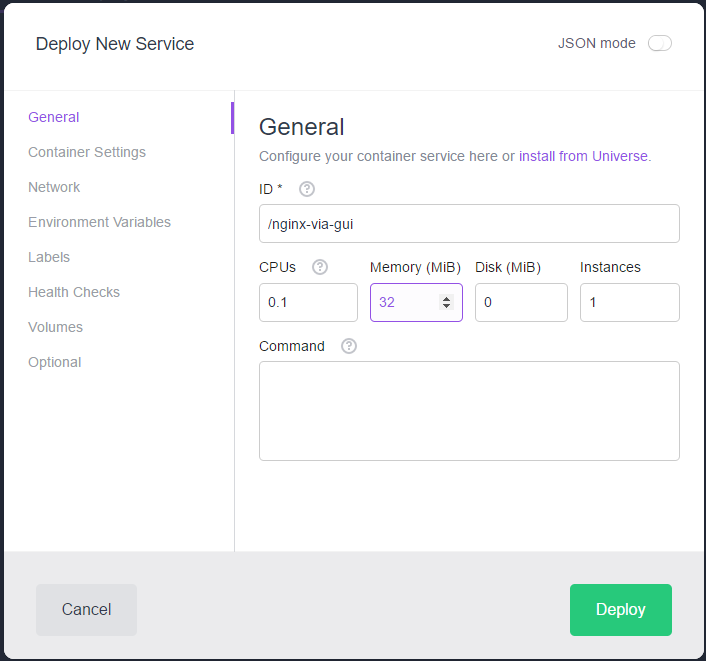

->

Here we have chosen only 0.1 CPU, since Mesos is quite strict on the resource reservations: the sum of CPUs reserved for the applications cannot exceed the number you have at hand, even if the application does not need the resources really. This is, what we have seen in my previous Mesos blog post, where we have deployed hello world applications that only printed out a „Hello World“ once a second with a reservation of one CPU. With two CPUs available, I could not start more than two such hello world applications.

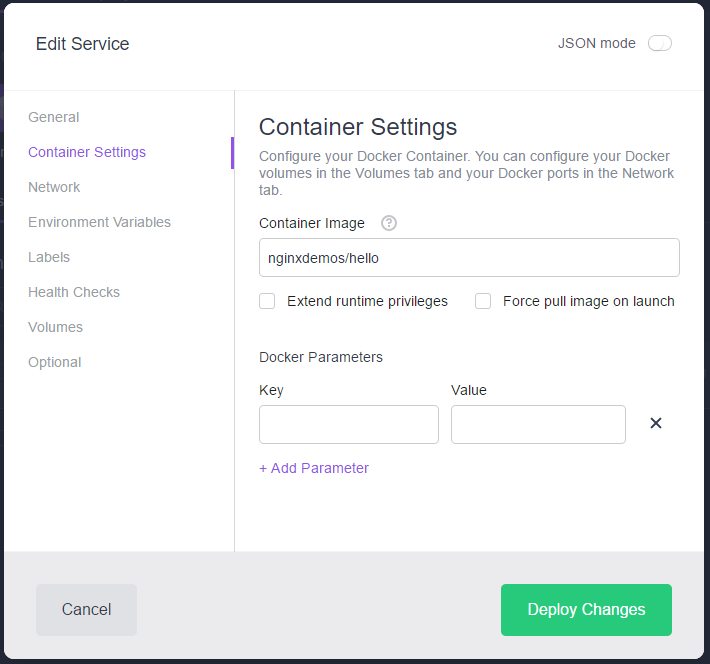

Let us deploy a container from the image nginxdemos/hello:

->

->

Now the Service is getting deployed:

Step 12: Connect to the NginX Service

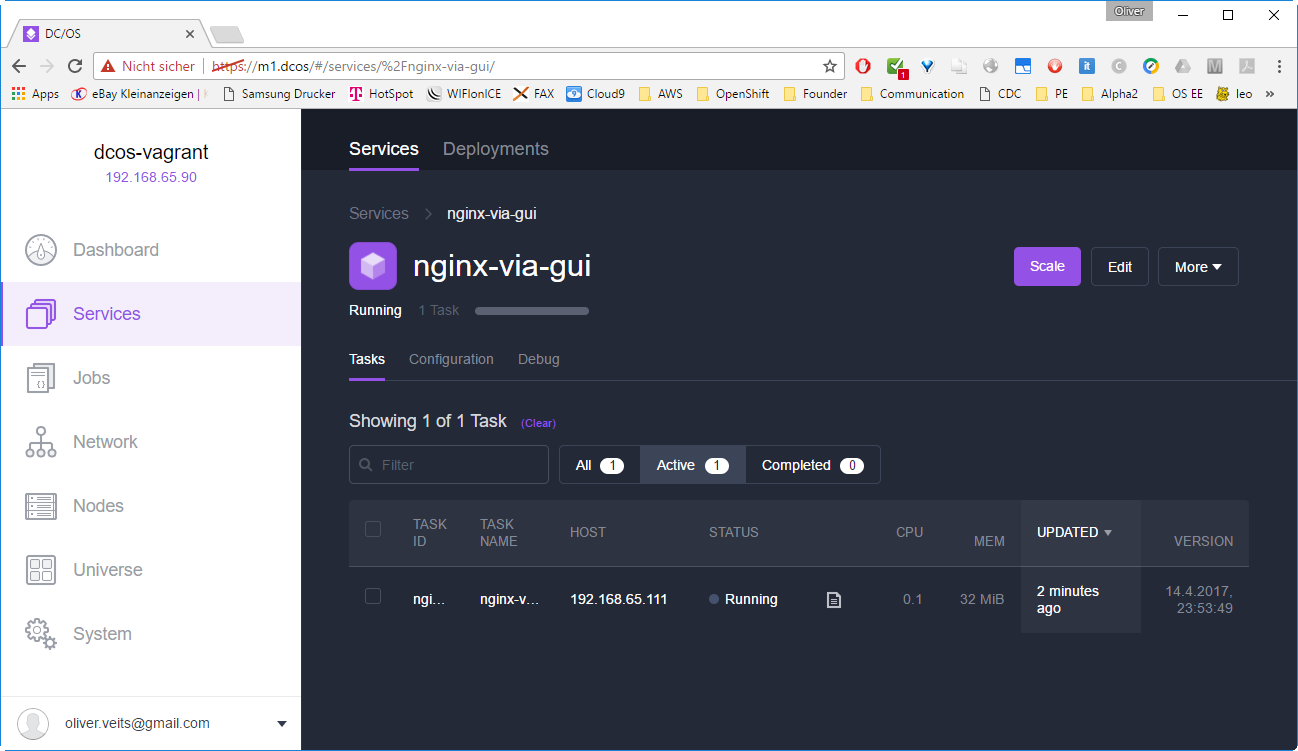

When we click on the nginx-via-gui service name, we will see that the service is running on the private Mesos agent a1 on 192.168.65.111:

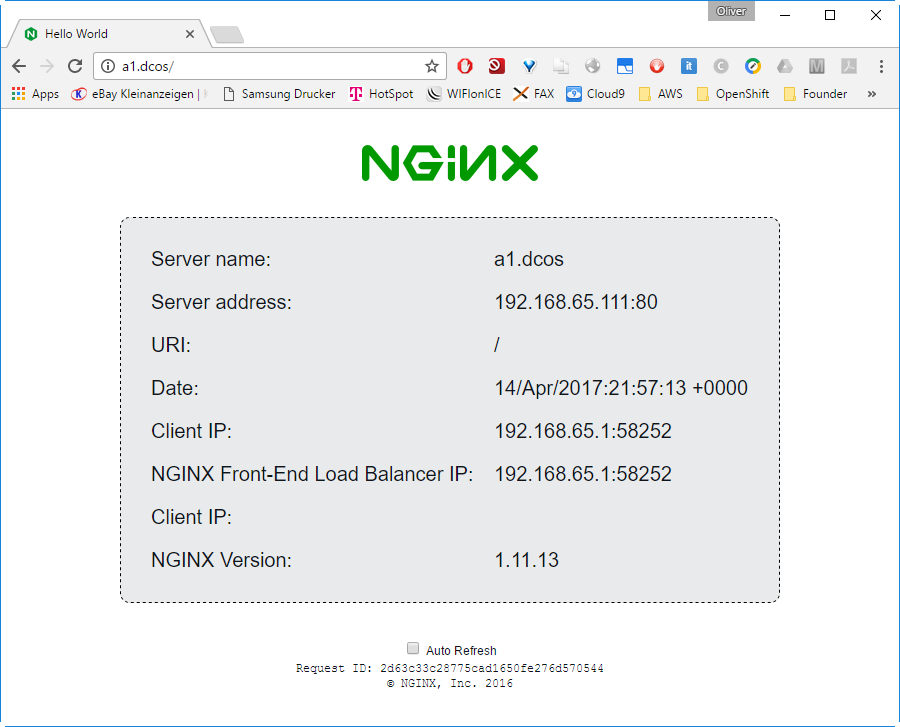

We can directly access the service by entering the private agent’s IP address 192.168.65.111 or name a1.dcos in the Browser’s URL field:

Here we can see that we have a quite simple networking model: the Windows host uses IP address 192.168.65.1 to reach the server on 192.168.65.111, which is the private Mesos agent’s IP address. The NginX container is just sharing the private agent’s network interface.

Because of the simple networking model, that was easier than expected:

- in other situations, you often need to configure port forwarding on VirtualBox VM, but not this time: the Mesos Agent is configured with a secondary Ethernet interface with host networking, which allows to connect from the VirtualBox host to any port of the private agent without VirtualBox port forwarding.

- in other situations, you often need to configure a port mapping between the docker container and the docker host (the Mesos agent in this case) is needed. Why not this time? Let us explore this in more detail in the next optional step.

Step 13 (optional): Explore the Default Mesos Networking

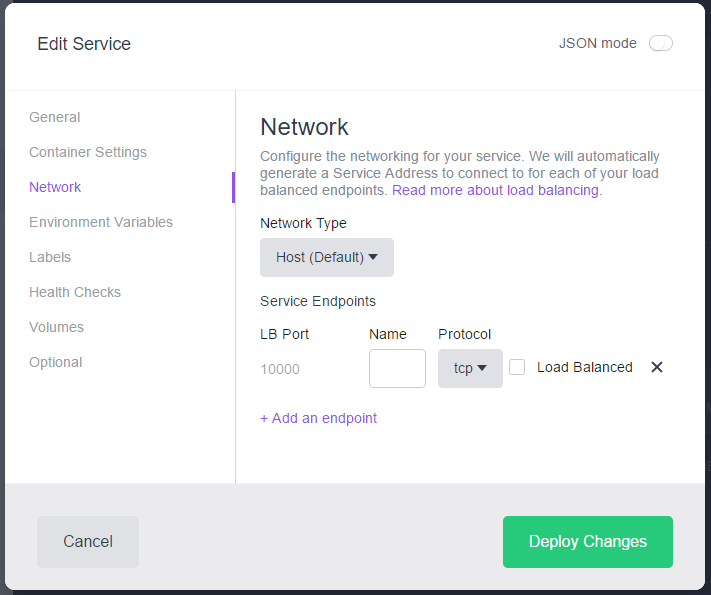

While deploying the service, we have not reviewed the network tab yet. However, we can do this now by clicking on the service, then „Edit“ and then „Network“:

The default network setting is the „Host“ networking, which means that the container is sharing the host’s network interface directly. The image, we have chosen is exposing port 80. This is, why we can reach the service by entering the host’s name or IP address with port 80 to the URL field of the browser.

Since the container is re-using the Docker host’s network interface, a port mapping is not needed, as we can confirm with a docker ps command:

(Vagranthost)$ vagrant ssh a1 ... (a1)$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES cd5a068aaa28 oveits/docker-nginx-busybox "/usr/sbin/nginx" 39 minutes ago Up 39 minutes mesos-1d3a11d0-1c3e-4ec2-8485-d1a3aa43c465-S2.39067bbf-c4b6-448b-9eb9-975c050bcf57

Here we cannot see any port mapping here (the PORTS field is empty).

Note that the default network configuration does not allow to scale the service: port 80 is already occupied.

Let us confirm this assumption by trying to scale the NginX service to two containers:

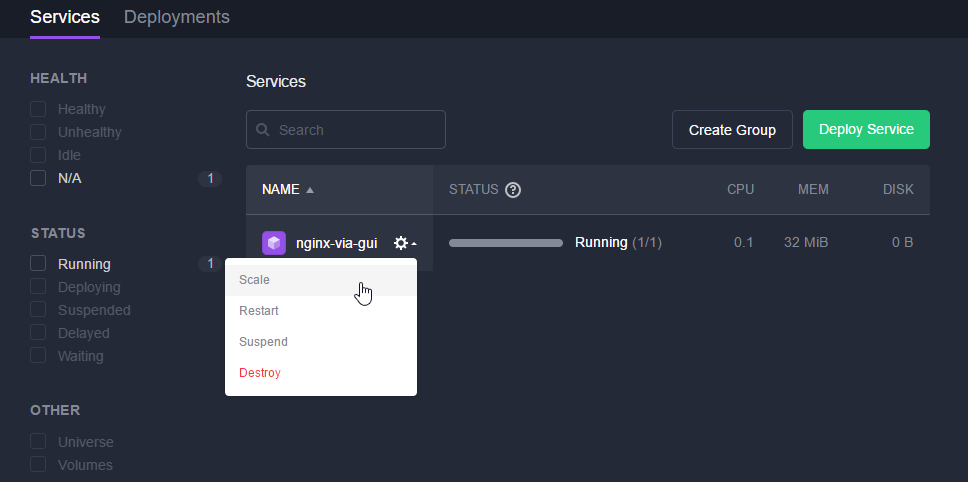

On Services -> Drop-down list right of name -> Scale

->choose 2 instances:

-> ![]()

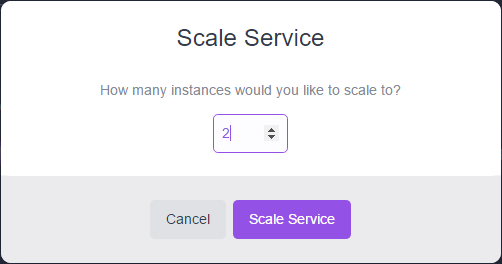

Now the service continually tries to start the second container and the status is toggling between Waiting, Running and Delayed:

![]()

![]()

![]()

As expected, the second docker container cannot start, because port 80 is already occupied on the docker host. The error log shows:

I0324 11:23:01.820436 7765 exec.cpp:161] Version: 1.0.3 I0324 11:23:01.825763 7769 exec.cpp:236] Executor registered on agent 1d3a11d0-1c3e-4ec2-8485-d1a3aa43c465-S2 I0324 11:23:01.827263 7772 docker.cpp:815] Running docker -H unix:///var/run/docker.sock run --cpu-shares 102 --memory 33554432 -e MARATHON_APP_VERSION=2017-03-24T18:18:00.202Z -e HOST=192.168.65.111 -e MARATHON_APP_RESOURCE_CPUS=0.1 -e MARATHON_APP_RESOURCE_GPUS=0 -e MARATHON_APP_DOCKER_IMAGE=oveits/docker-nginx-busybox -e PORT_10000=10298 -e MESOS_TASK_ID=nginx.ea26c7af-10be-11e7-9134-70b3d5800001 -e PORT=10298 -e MARATHON_APP_RESOURCE_MEM=32.0 -e PORTS=10298 -e MARATHON_APP_RESOURCE_DISK=2.0 -e MARATHON_APP_LABELS= -e MARATHON_APP_ID=/nginx -e PORT0=10298 -e LIBPROCESS_IP=192.168.65.111 -e MESOS_SANDBOX=/mnt/mesos/sandbox -e MESOS_CONTAINER_NAME=mesos-1d3a11d0-1c3e-4ec2-8485-d1a3aa43c465-S2.f752b208-f7d1-49d6-8cdd-cbb62eaf4768 -v /var/lib/mesos/slave/slaves/1d3a11d0-1c3e-4ec2-8485-d1a3aa43c465-S2/frameworks/1d3a11d0-1c3e-4ec2-8485-d1a3aa43c465-0001/executors/nginx.ea26c7af-10be-11e7-9134-70b3d5800001/runs/f752b208-f7d1-49d6-8cdd-cbb62eaf4768:/mnt/mesos/sandbox --net host --name mesos-1d3a11d0-1c3e-4ec2-8485-d1a3aa43c465-S2.f752b208-f7d1-49d6-8cdd-cbb62eaf4768 oveits/docker-nginx-busybox nginx: [alert] could not open error log file: open() "/var/log/nginx/error.log" failed (2: No such file or directory) 2017/03/24 18:23:01 [emerg] 1#0: bind() to 0.0.0.0:80 failed (98: Address in use) nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address in use) 2017/03/24 18:23:01 [notice] 1#0: try again to bind() after 500ms 2017/03/24 18:23:01 [emerg] 1#0: bind() to 0.0.0.0:80 failed (98: Address in use) nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address in use) 2017/03/24 18:23:01 [notice] 1#0: try again to bind() after 500ms 2017/03/24 18:23:01 [emerg] 1#0: bind() to 0.0.0.0:80 failed (98: Address in use) nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address in use) 2017/03/24 18:23:01 [notice] 1#0: try again to bind() after 500ms 2017/03/24 18:23:01 [emerg] 1#0: bind() to 0.0.0.0:80 failed (98: Address in use) nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address in use) 2017/03/24 18:23:01 [notice] 1#0: try again to bind() after 500ms 2017/03/24 18:23:01 [emerg] 1#0: bind() to 0.0.0.0:80 failed (98: Address in use) nginx: [emerg] bind() to 0.0.0.0:80 failed (98: Address in use) 2017/03/24 18:23:01 [notice] 1#0: try again to bind() after 500ms 2017/03/24 18:23:01 [emerg] 1#0: still could not bind() nginx: [emerg] still could not bind()

This is not a good configuration. Can we choose a different type of networking at the time we start the service? Let us follow Step 14 to create the same service, but now in a scalable and load-balanced fashion:

Step 14: Deploy a Hello World Service per JSON with improved Networking and Load-Balancing

Step 14.1: Install Marathon Load Balancer

Step 14.1.1: Check, if Marathon LB is already installed

In the moment, the Marathon Load Balancer is not installed. This can be checked with following DCOS CLI command:

(DCOS CLI Client)$ dcos package list There are currently no installed packages. Please use `dcos package install` to install a package.

Step 14.1.2 (optional): Check Options of Marathon Package

Let us install the Marathon Load balancer by following the version 1.8 documentation. First, we will have a look to the package (optional):

(DCOS CLI Client)$ dcos package describe --config marathon-lb

{

"$schema": "http://json-schema.org/schema#",

"properties": {

"marathon-lb": {

"properties": {

"auto-assign-service-ports": {

"default": false,

"description": "Auto assign service ports for tasks which use IP-per-task. See https://github.com/mesosphere/marathon-lb#mesos-with-ip-per-task-support for details.",

"type": "boolean"

},

"bind-http-https": {

"default": true,

"description": "Reserve ports 80 and 443 for the LB. Use this if you intend to use virtual hosts.",

"type": "boolean"

},

"cpus": {

"default": 2,

"description": "CPU shares to allocate to each marathon-lb instance.",

"minimum": 1,

"type": "number"

},

"haproxy-group": {

"default": "external",

"description": "HAProxy group parameter. Matches with HAPROXY_GROUP in the app labels.",

"type": "string"

},

"haproxy-map": {

"default": true,

"description": "Enable HAProxy VHost maps for fast VHost routing.",

"type": "boolean"

},

"haproxy_global_default_options": {

"default": "redispatch,http-server-close,dontlognull",

"description": "Default global options for HAProxy.",

"type": "string"

},

"instances": {

"default": 1,

"description": "Number of instances to run.",

"minimum": 1,

"type": "integer"

},

"marathon-uri": {

"default": "http://marathon.mesos:8080",

"description": "URI of Marathon instance",

"type": "string"

},

"maximumOverCapacity": {

"default": 0.2,

"description": "Maximum over capacity.",

"minimum": 0,

"type": "number"

},

"mem": {

"default": 1024.0,

"description": "Memory (MB) to allocate to each marathon-lb task.",

"minimum": 256.0,

"type": "number"

},

"minimumHealthCapacity": {

"default": 0.5,

"description": "Minimum health capacity.",

"minimum": 0,

"type": "number"

},

"name": {

"default": "marathon-lb",

"description": "Name for this LB instance",

"type": "string"

},

"role": {

"default": "slave_public",

"description": "Deploy marathon-lb only on nodes with this role.",

"type": "string"

},

"secret_name": {

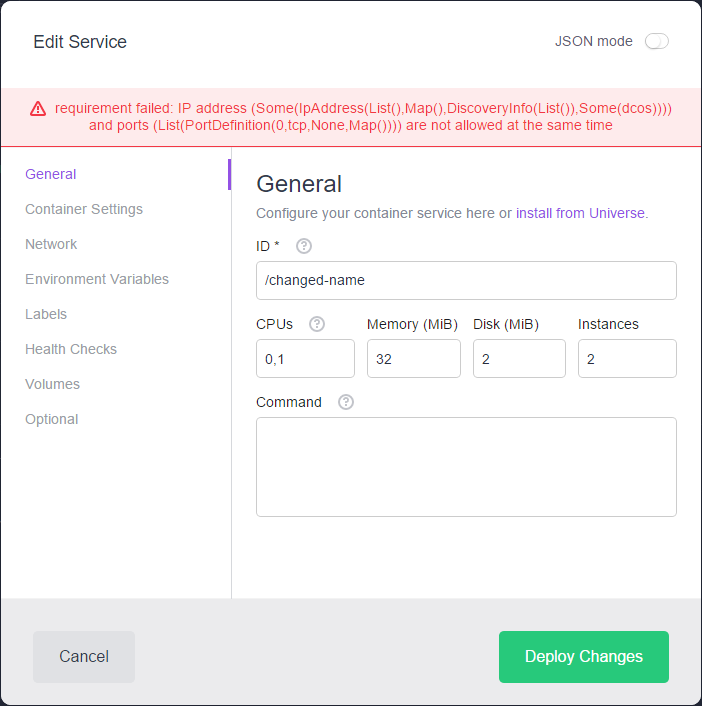

"default": "",

"description": "Name of the Secret Store credentials to use for DC/OS service authentication. This should be left empty unless service authentication is needed.",

"type": "string"

},

"ssl-cert": {

"description": "TLS Cert and private key for HTTPS.",

"type": "string"

},

"strict-mode": {

"default": false,

"description": "Enable strict mode. This requires that you explicitly enable each backend with `HAPROXY_{n}_ENABLED=true`.",

"type": "boolean"

},

"sysctl-params": {

"default": "net.ipv4.tcp_tw_reuse=1 net.ipv4.tcp_fin_timeout=30 net.ipv4.tcp_max_syn_backlog=10240 net.ipv4.tcp_max_tw_buckets=400000 net.ipv4.tcp_max_orphans=60000 net.core.somaxconn=10000",

"description": "sysctl params to set at startup for HAProxy.",

"type": "string"

},

"template-url": {

"default": "",

"description": "URL to tarball containing a directory templates/ to customize haproxy config.",

"type": "string"

}

},

"required": [

"cpus",

"mem",

"haproxy-group",

"instances",

"name"

],

"type": "object"

}

},

"type": "object"

}

Step 14.1.3: Install and Check Marathon Load Balancer

We install the Marathon Package now. We will keep the default configuration:

$ dcos package install marathon-lb We recommend at least 2 CPUs and 1GiB of RAM for each Marathon-LB instance. *NOTE*: ```Enterprise Edition``` DC/OS requires setting up the Service Account in all security modes. Follow these instructions to setup a Service Account: https://docs.mesosphere.com/administration/id-and-access-mgt/service-auth/mlb-auth/ Continue installing? [yes/no] yes Installing Marathon app for package [marathon-lb] version [1.5.1] Marathon-lb DC/OS Service has been successfully installed! See https://github.com/mesosphere/marathon-lb for documentation.

Now let uch check that the package is installed:

$ dcos package list NAME VERSION APP COMMAND DESCRIPTION marathon-lb 1.5.1 /marathon-lb --- HAProxy configured using Marathon state

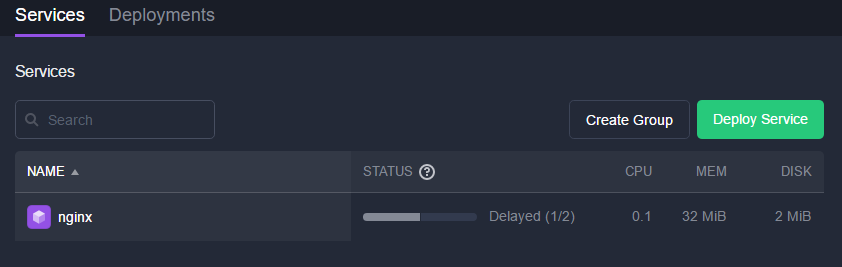

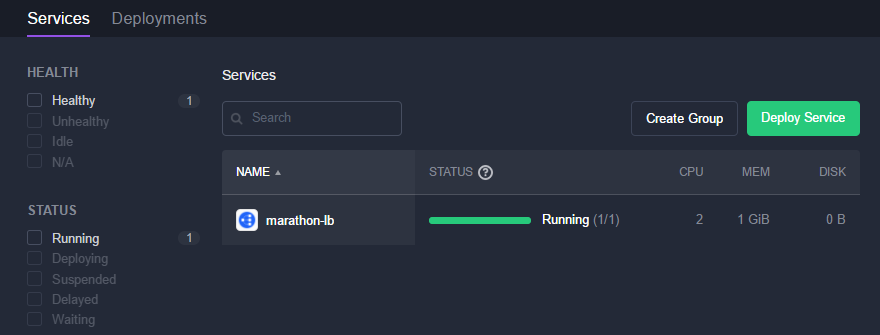

We are able to see the load balancer service on the GUI as well:

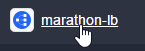

After clicking on marathon-lb service  and the container

and the container ![]() and scrolling down (see note), we see, that the load balancer is serving the ports 80, 443, 9090, 9091, and 10000 to 10100. We will use one of the high ports soon.

and scrolling down (see note), we see, that the load balancer is serving the ports 80, 443, 9090, 9091, and 10000 to 10100. We will use one of the high ports soon.

Note: scrolling is a little bit tricky at the moment, you might need to re-size the browser view with ctrl minus or ctrl plus to see the scroll bar on the right. Another possibility is to click into the black part of the browser page and use the arrow keys thereafter

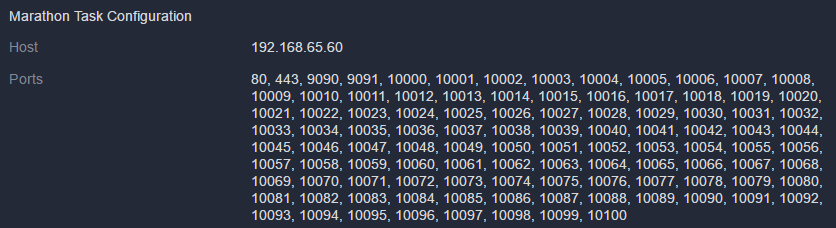

Port 9090 is used by the load balancer admin interface. We can see the statistics there:

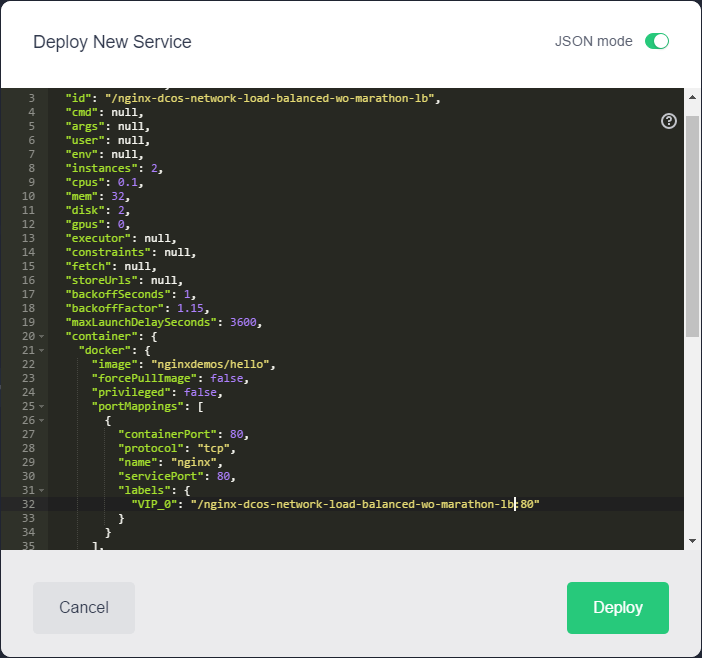

Step 14.2: Create an Application using Marathon Load Balancer

Now let us follow this instructions to add a service that makes use of the Marathon Load Balancer:

Step 14.2.1: Define the Application’s Configuration File

Save following File content as nginx-hostname-app.json:

{

"id": "nginx-hostname",

"container": {

"type": "DOCKER",

"docker": {

"image": "nginxdemos/hello",

"network": "BRIDGE",

"portMappings": [

{ "hostPort": 0, "containerPort": 80, "servicePort": 10006 }

]

}

},

"instances": 3,

"cpus": 0.25,

"mem": 100,

"healthChecks": [{

"protocol": "HTTP",

"path": "/",

"portIndex": 0,

"timeoutSeconds": 2,

"gracePeriodSeconds": 15,

"intervalSeconds": 3,

"maxConsecutiveFailures": 2

}],

"labels":{

"HAPROXY_DEPLOYMENT_GROUP":"nginx-hostname",

"HAPROXY_DEPLOYMENT_ALT_PORT":"10007",

"HAPROXY_GROUP":"external",

"HAPROXY_0_REDIRECT_TO_HTTPS":"true",

"HAPROXY_0_VHOST": "192.168.65.111"

}

}If you are running in another environment than the one we have created using Vagrant, you might need to adapt the IP address: replace

192.168.65.111in theHAPROXY_0_VHOSTby your public agent’s IP address.

Step 14.2.2 Create Service using DCOS CLI

Now create the Marathon app using the DCOS CLI (in my case, I have not adapted the Path variable yet, so I had to issue a cd to the full_path_to_dcos.exe, „D:\veits\downloads\DCOS CLI\dcos.exe“ in my case.

$ cd <folder_containing_dcos.exe> # needed, if dcos.exe is not in your PATH $ dcos marathon app add full_path_to_nginx-hostname-app.json Created deployment 63bac617-792c-488e-8489-80428b1c1e34 $ dcos marathon app list ID MEM CPUS TASKS HEALTH DEPLOYMENT WAITING CONTAINER CMD /marathon-lb 1024 2 1/1 1/1 --- False DOCKER ['sse', '-m', 'http://marathon.mesos:8080', '--health-check', '--haproxy-map', '--group', 'external'] /nginx-hostname 100 0.25 3/3 3/3 --- False DOCKER None

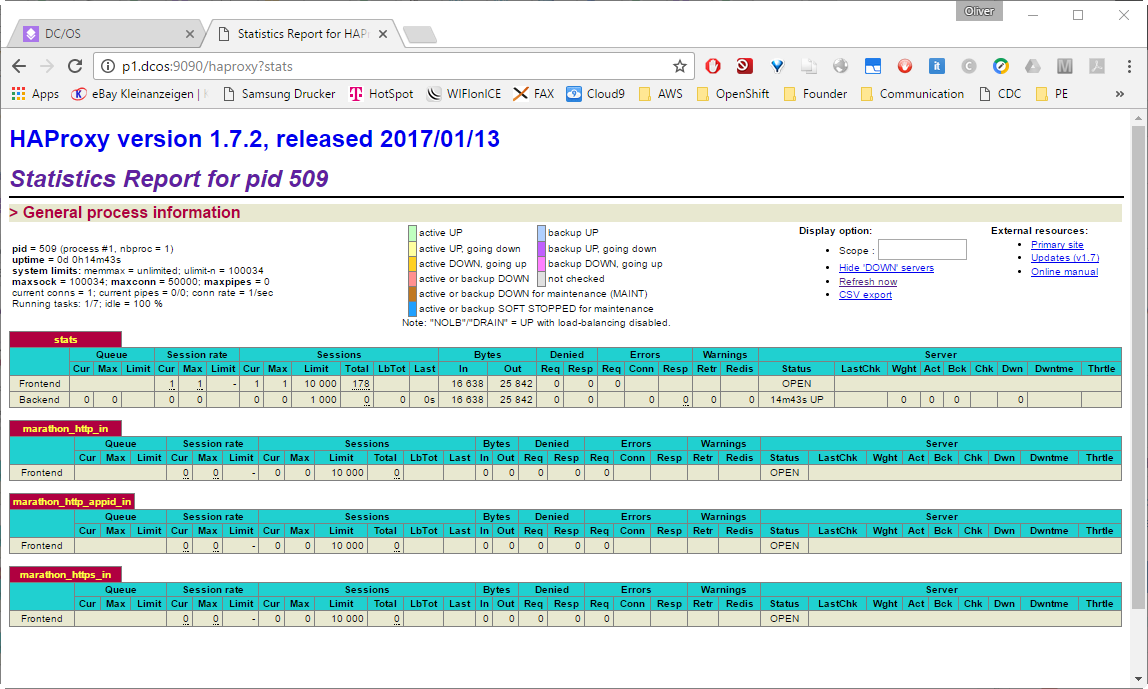

On the GUI, under Service we find:

![]()

After clicking on the service name nginx-hostname, we see more details on the three healthy containers that have been started:

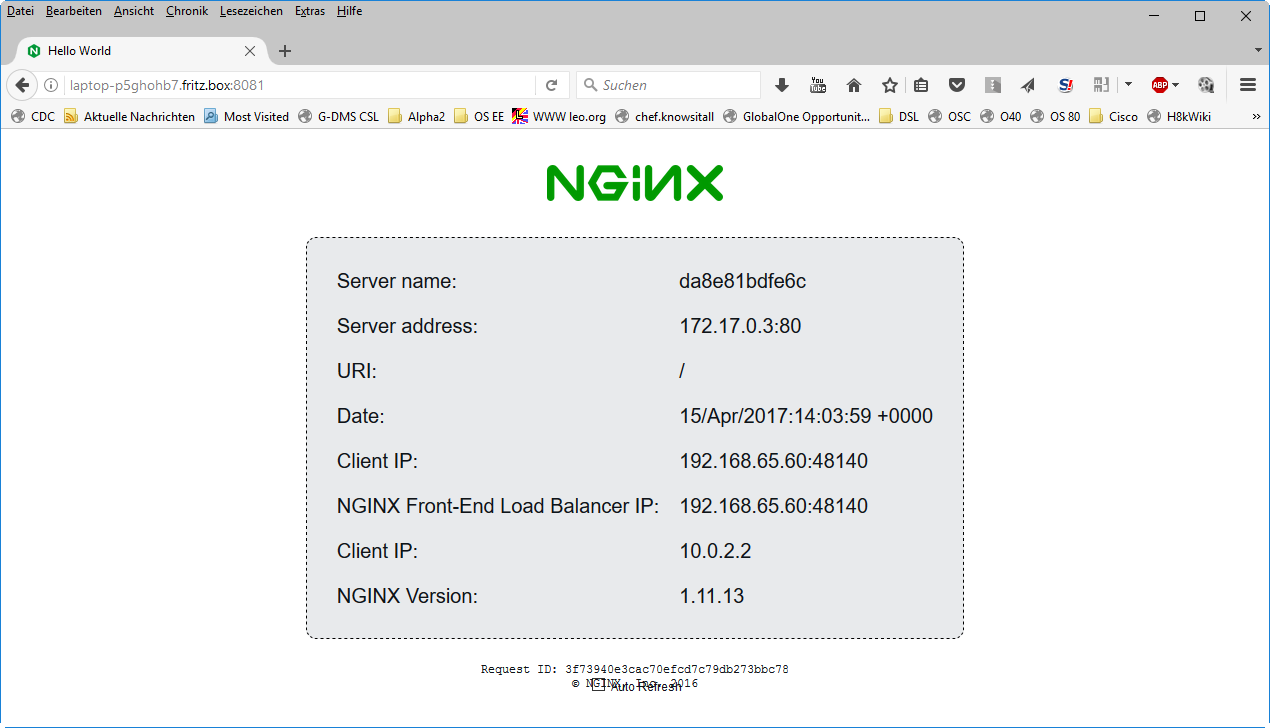

Now, the service is reachable via curl from within the Mesos netowork (testing on the private agent a1):

(a1)$ curl http://marathon-lb.marathon.mesos:10006

But can we reach it from outside? Yes: marathon-lb.marathon.mesos is mapped to the public agent’s (p1) address 192.168.65.60 and we can reach http://192.168.65.60:10006 from the inside …

(a1)$ curl http://192.168.65.60:10006

…as well as from the outside:

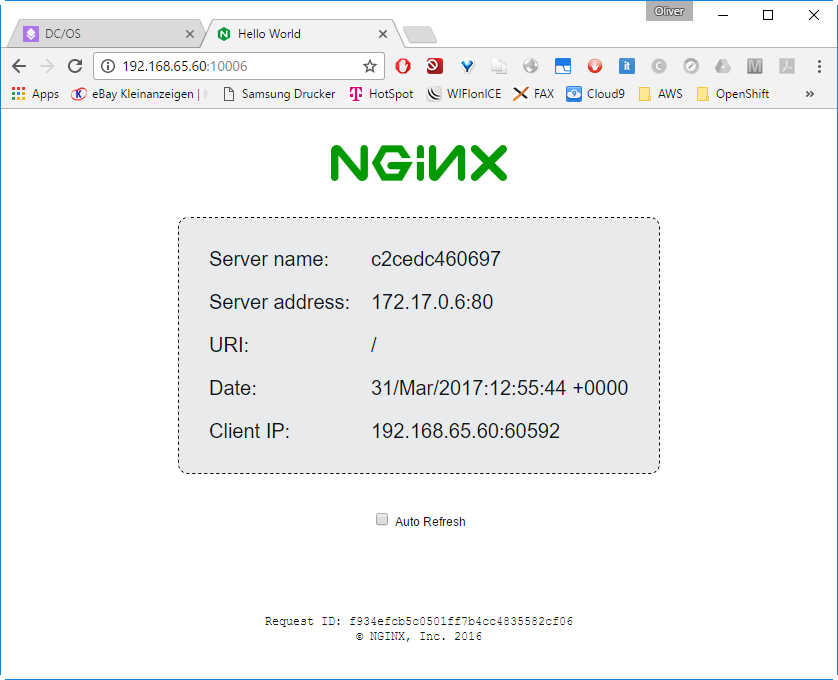

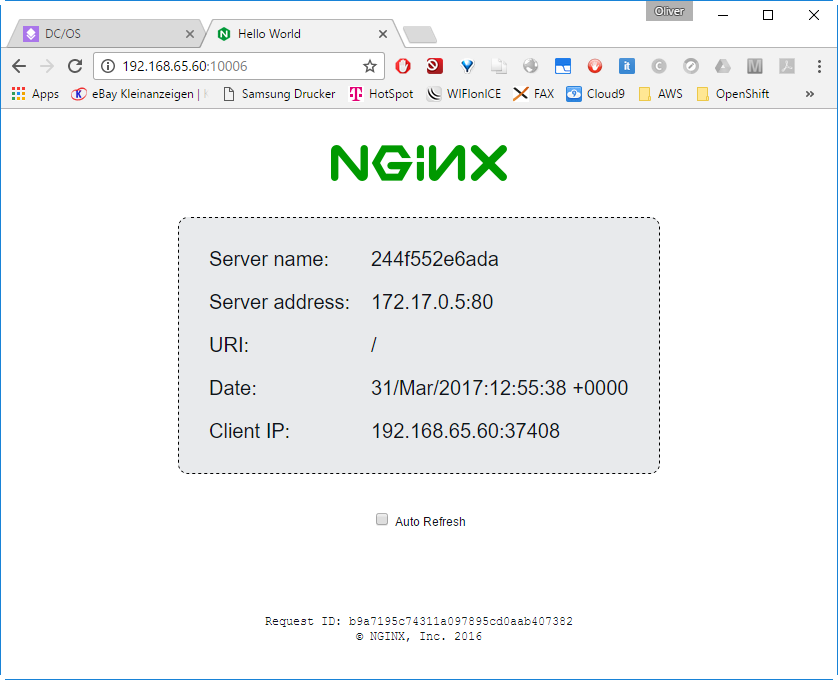

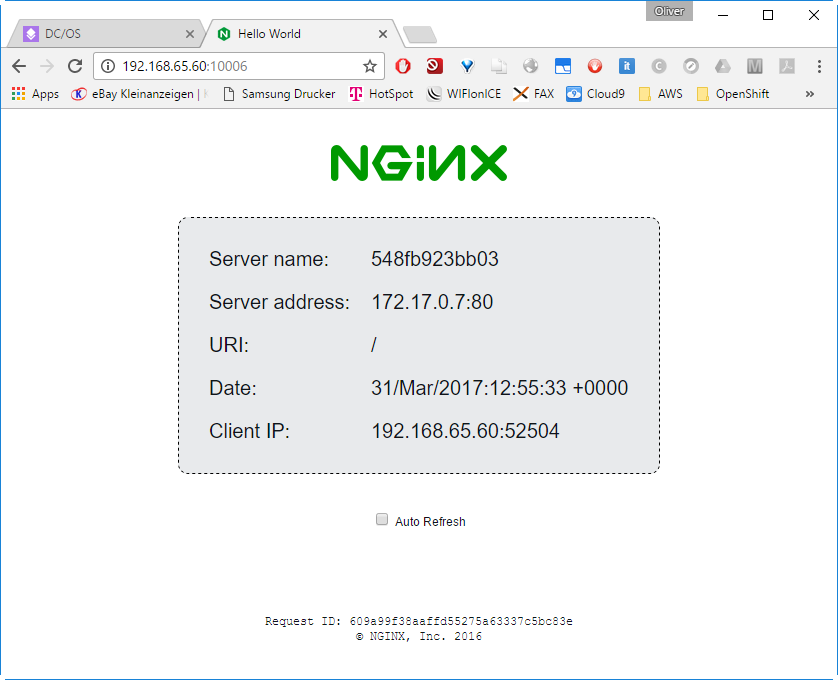

The image we have chosen will return the server name (i.e. the container ID), the server address and port as seen by the server (172.17.0.x with port 80), the called URI (root), the date and the client IP address and port.

When reloading the page via the browser’s reload button, the answering container will change randomly:

This proves that the request are load-balanced between the three NginX containers and can be reached from the Machine hosting the public agent VirtualBox VM. In the next step, we will make sure that the NginX service can be reached from any machine in your local area network.

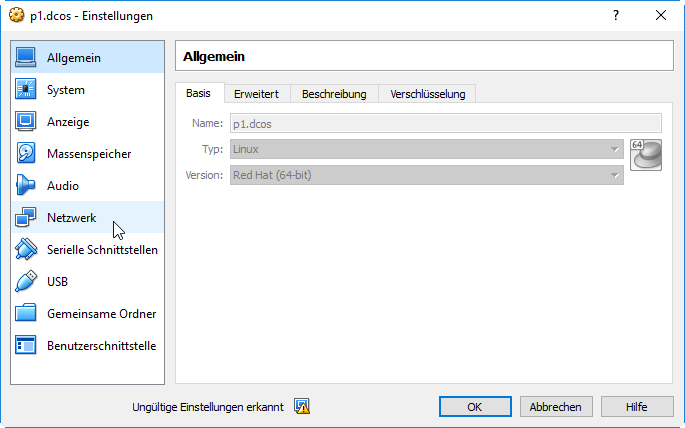

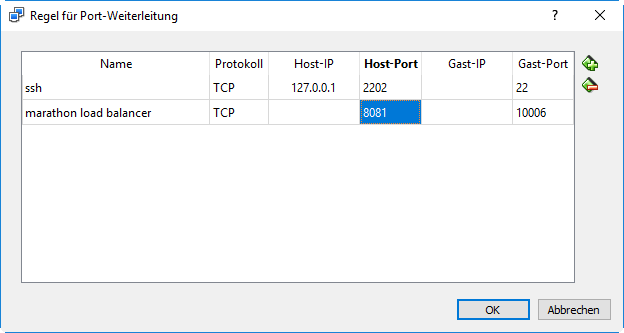

Step 15: Reaching the Server from the outside World

In case of a physical machine as public agent, the service will be reachable from the local area network (LAN) already. However, in our case, the public agent p1 is a VirtualBox VM using host networks. Since VirtualBox host networks are only reachable from the VirtualBox host, an additional step has to be taken, if the service is to be reachable from outside.

Note that the outside interface of the HAProxy on the DC/OS master hosting the is attached to a VirtualBox host network 192.168.65.0/24. So, if you want to reach the address from the local area network, an additional mapping from an outside interface of the VirtualBox host p1 to port 10006 is needed.

For that, choose

-> VirtualBox GUI

-> p1.dcos

-> Edit

-> Network

Then

-> Adapter1

-> Port Forwarding

-> Add (+)

-> choose a name and map a host port to the port 10006 we have used in the JSON file above:

-> OK

In this example you will be able to reach the service via any reachable IP address of the VirtualBox host on port 8081:

With that, the service is reachable from any machine in the local area network.

Appendix A: Virtualbox Installation Problem Resolution

- On Windows 7 or Windows 10, download the installer. Easy.

- When I start the installer, everything seems to be on track until I see „rolling back action“ and I finally get this:

„Oracle VM Virtualbox x.x.x Setup Wizard ended prematurely“

Resolution of the „Setup Wizard ended prematurely“ Problem

Let us try to resolve the problem: the installer of Virtualbox downloaded from Oracle shows the exact same error: „…ended prematurely“. This is not a docker bug. Playing with conversion tools from Virtualbox to VMware did not lead to the desired results.

The Solution: Google is your friend: the winner is:https://forums.virtualbox.org/viewtopic.php?f=6&t=61785. After backing up the registry and changing the registry entry

HKEY_LOCAL_MACHINE\SYSTEM\ControlSet001\Control\Network -> MaxFilters from 8 to 20 (decimal)

and a reboot of the Laptop, the installation of Virtualbox is successful.