In this blog post we will review the architecture of OpenShift before we will gain some hands-on experience with OpenShift Origin, which is the free version of the OpenShift Container Platform a.k.a. OpenShift Enterprise. Vagrant software will help us to automate the installation of an all-in-one OpenShift Virtual Machine deployed as a VirtualBox image. We will spin up a simple hello world service and we will access the service from remote via the in-built load balancer of OpenShift.

Versions:

2016-07-21: original published version

2016-09-12: added more docker and kubectl view command in order to better see, what is found under the hood of OpenShift.

Introduction

Someone has called my attention the Cloud category of the Red Hat Innovation Award 2016 won by Amadeus. This has triggered my curiosity. On paper, I already know OpenShift for quite a while, and I have made some experience with OpenShift Online. As a hobby Ruby and java developer I found that OpenShift Online cannot beat other PaaS services like Heroku. However, a local installation of the OpenShift Container Platform potentially can resolve the most pressing issue I have when working with Docker containers: the fact that persistent data needs to be separated from the ephemeral Docker containers.

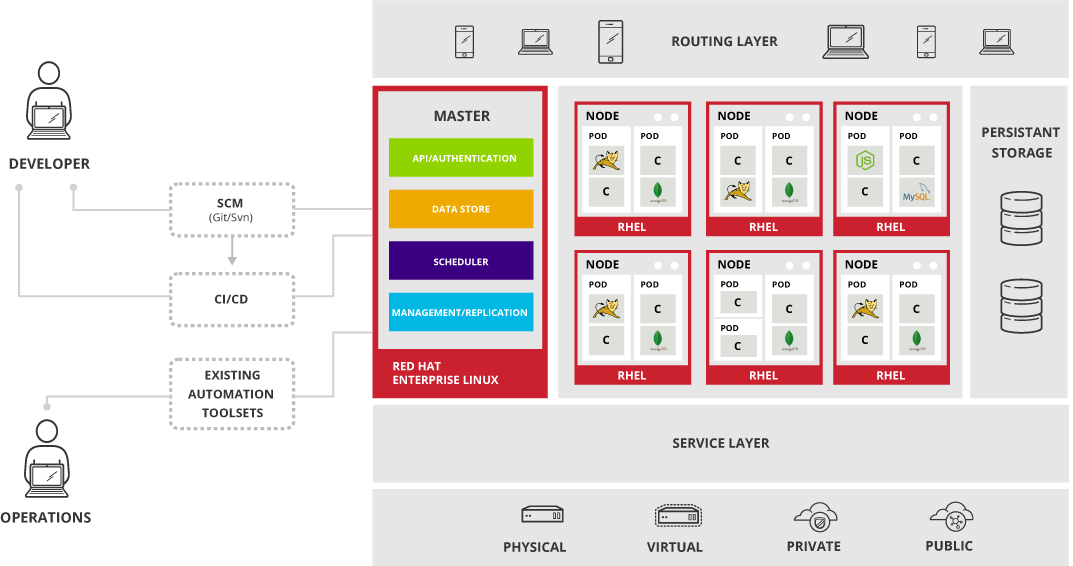

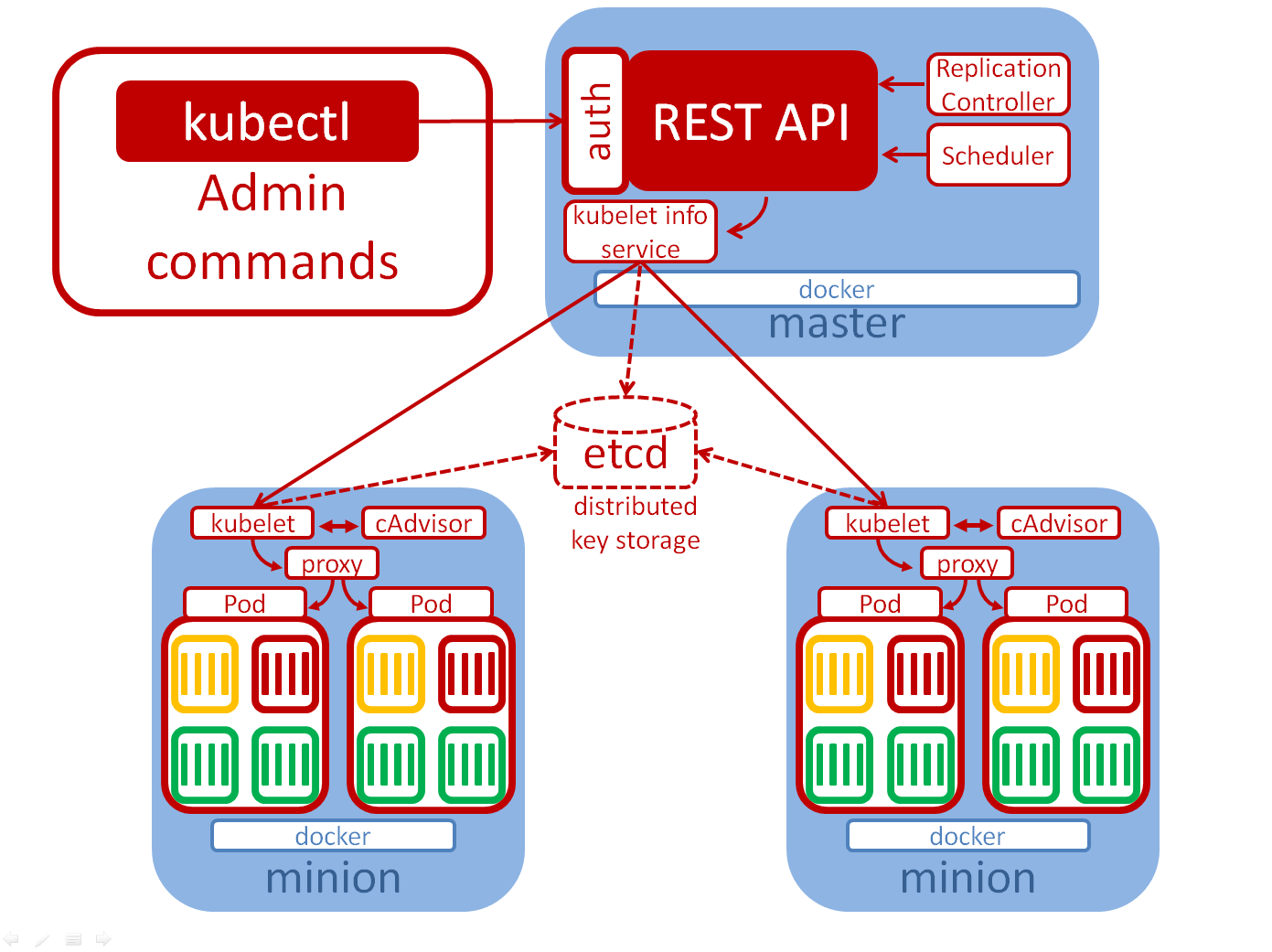

In a preceding theory session, we will analyze OpenShift Container Platform by comparing it with native Google Kubernetes, i.e. the core component of OpenShift. Kubernetes abstracts services from containers, offers cluster redundancy as well as internal load balancing functions for container to container communication. Among others, we will see that OpenShift is adding persistent storage and load balancing functions for communication to external parties.

After that we will install OpenShift Origin and spin up a simple hello world service, which we will make available to external parties using the built-in load balancer of OpenShift, i.e. the HAProxy.

Architecture of the OpenShift Container Platform

OpenShift comes in following flavors:

- OpenShift Container Platform (often called by its old name „OpenShift Enterprise“): a commercial version of the open source OpenShift Origin project

- OpenShift Origin: the upstream open source project

- OpenShift Dedicated: a commercial version of OpenShift installed on AWS

- OpenShift Online: a PaaS offered by Red Hat that is making use of OpenShift Enterprise software

See also this Q&A on the differences of those flavors.

The architecture of OpenShift Container Platform v3.2 is described here. Red Hat describes it with following words:

OpenShift v3 is a layered system designed to expose underlying Docker and Kubernetes concepts as accurately as possible, with a focus on easy composition of applications by a developer. For example, install Ruby, push code, and add MySQL.

OpenShift follows the Kubernetes network model with a single master node and several minion nodes installed on Red Hat Enterprise Linux (RHEL) v 7.1 or or Atomic 7.2.1 . According to the documentation, also . The minion nodes are hosting the actual services as Kubernetes PODs. In the Kubernetes network model, each POD is assigned an IP address and combines several micro-service containers into a full service. This is discussed in more detail in my Kubernetes blog post.

I would like to understand, which components are added by OpenShift to the Kubernetes model. For that, let us compare the OpenShift architecture with the Kubernetes architecture:

We see that the RHEL master modules API/Authentication, Scheduler and Management/Replication are part of Kubernetes. The Data Store is an OpenShift add-on for managing the Persistent Storage offered by OpenShift.

Step 0: Planning and Prerequisites

It looks like OpenShift Origin is offering a much simpler way of performing an evaluation than its professional brother OpenShift Enterprise. While the installation web page of OpenShift Enterprise 3.2 is hundreds of pages long and it is not clear, how to start a quick evaluation, OpenShift Origin is offering an All-In-One Virtual Machine based on Vagrant. This is perfect, since I have made very good experience with Vagrant’s way of automating the creation and configuration of VirtualBox virtual machines.

Note: OpenShift Origin is the free open source version of OpenShift Enterprise. For an evaluation OpenShift Container Platform based on OpenShift Origin, no license is needed.

Since we just want to evaluate OpenShift, we assume that the open source version is stable enough to do so and we will proceed with OpenShift Origin, following the above link.

Prerequisites:

With default settings, the all-in-one OpenShift VM poses following prerequisites:

- 2 vCPUs (I have tested it with 2 vCPUs on a i7-6700QM processor)

- 4 GB RAM

- up to 40 GB disk space (dynamic allocation, starting with ~10 GB after fresh installation)

- Hyper-V must be disabled on the host machine, since it conflicts with the usage of VirtualBox

Step 1: Install VirtualBox

If not already done, you need to install VirtualBox found here. For my tests, I am using the already installed VirtualBox 5.0.20 r106931 on Windows 10.

Step 2: Install Vagrant

If not already done, you need to install Vagrant found here. For my tests, I am using an already installed Vagrant version 1.8.1 on my Windows 10 machine.

Step 3: Create a new Folder and Initialize Vagrant:

Let us create and enter a new directory/folder and initialize Vagrant.

(vagranthost)$ mkdir OpenShift; cd OpenShift (vagranthost)$ vagrant init thesteve0/openshift-origin

This will create a Vagrantfile in the directory. Optionally, you can edit the Vagrantfile in order to change the resource settings. I have kept it, as it is.

Step 4: Download and start the VirtualBox Image:

The next command will download the vagrant box. This step is optional, since the vagrant up command will download the image automatically, if the image is not found locally. However, the download may take a long time (2.5 GB), so I prefer to explicitly download the image first. The advantage is that you can see better, if the vagrant up process requires your attendance. In my case, I am logged into Windows 10 as a non root user, and the vagrant up requires me to enter the administrator password. For now, let us download the vagrant box:

(vagranthost)$ vagrant box add thesteve0/openshift-origin

Now let us launch the VirtualBox image:

(vagranthost)$ vagrant up

Note: you might need to enter your administrator’s username/password in a pop-up window on a Windows system at this step.

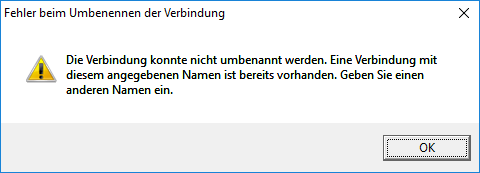

Note: As I did, you may get a VirtualBox pop up error message like follows (sorry, in German), telling you that a network interface/connection could not be renamed. If so, click the OK button, look for an error message like Failed to create the host-only adapter in the console output of the vagrant up command and consult the Appendix: Error Message: „Failed to create the host-only adapter“

Note: if you are experience an error message VT-x is not available in the console output of the vagrant up command, then either the virtualization function VT-x is not enabled yet in your BIOS, or another process has taken ownership of this function. In my case Hyper-V was enabled. For information on how to circumvent this problem, see the Appendix: Error message: „VT-x is not available“ below.

The log of the vagrant up command should look similar to follows:

(vagranthost)$ vagrant up Bringing machine 'default' up with 'virtualbox' provider... ==> default: Checking if box 'thesteve0/openshift-origin' is up to date... ==> default: Clearing any previously set forwarded ports... ==> default: Clearing any previously set network interfaces... ==> default: Preparing network interfaces based on configuration... default: Adapter 1: nat default: Adapter 2: hostonly ==> default: Forwarding ports... default: 8443 (guest) => 8443 (host) (adapter 1) default: 22 (guest) => 2222 (host) (adapter 1) ==> default: Running 'pre-boot' VM customizations... ==> default: Booting VM... ==> default: Waiting for machine to boot. This may take a few minutes... default: SSH address: 127.0.0.1:2222 default: SSH username: vagrant default: SSH auth method: private key default: Warning: Remote connection disconnect. Retrying... default: Warning: Remote connection disconnect. Retrying... default: default: Vagrant insecure key detected. Vagrant will automatically replace default: this with a newly generated keypair for better security. default: default: Inserting generated public key within guest... default: Removing insecure key from the guest if it's present... default: Key inserted! Disconnecting and reconnecting using new SSH key... ==> default: Machine booted and ready! ==> default: Checking for guest additions in VM... default: No guest additions were detected on the base box for this VM! Guest default: additions are required for forwarded ports, shared folders, host only default: networking, and more. If SSH fails on this machine, please install default: the guest additions and repackage the box to continue. default: default: This is not an error message; everything may continue to work properly, default: in which case you may ignore this message. ==> default: Configuring and enabling network interfaces... ==> default: Running provisioner: shell... default: Running: inline script ==> default: Successfully started and provisioned VM with 2 cores and 4 G of memory. ==> default: To modify the number of cores and/or available memory modify your local Vagrantfile ==> default: You can now access the OpenShift console on: https://10.2.2.2:8443/console ==> default: Configured users are (<username>/<password>): ==> default: admin/admin ==> default: user/user ==> default: But, you can also use any username and password combination you would like to create ==> default: a new user. ==> default: You can find links to the client libraries here: https://www.openshift.org/vm ==> default: If you have the oc client library on your host, you can also login from your host. ==> default: To use OpenShift CLI, run: ==> default: $ oc login https://10.2.2.2:8443

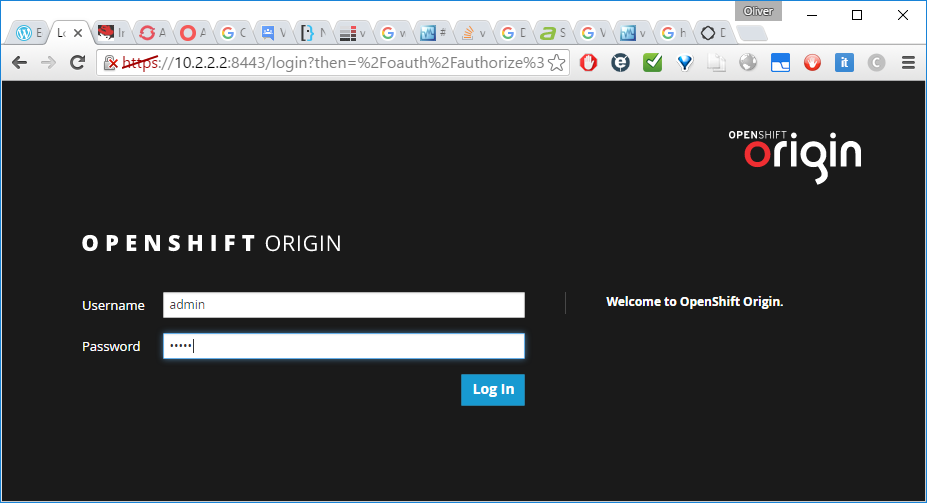

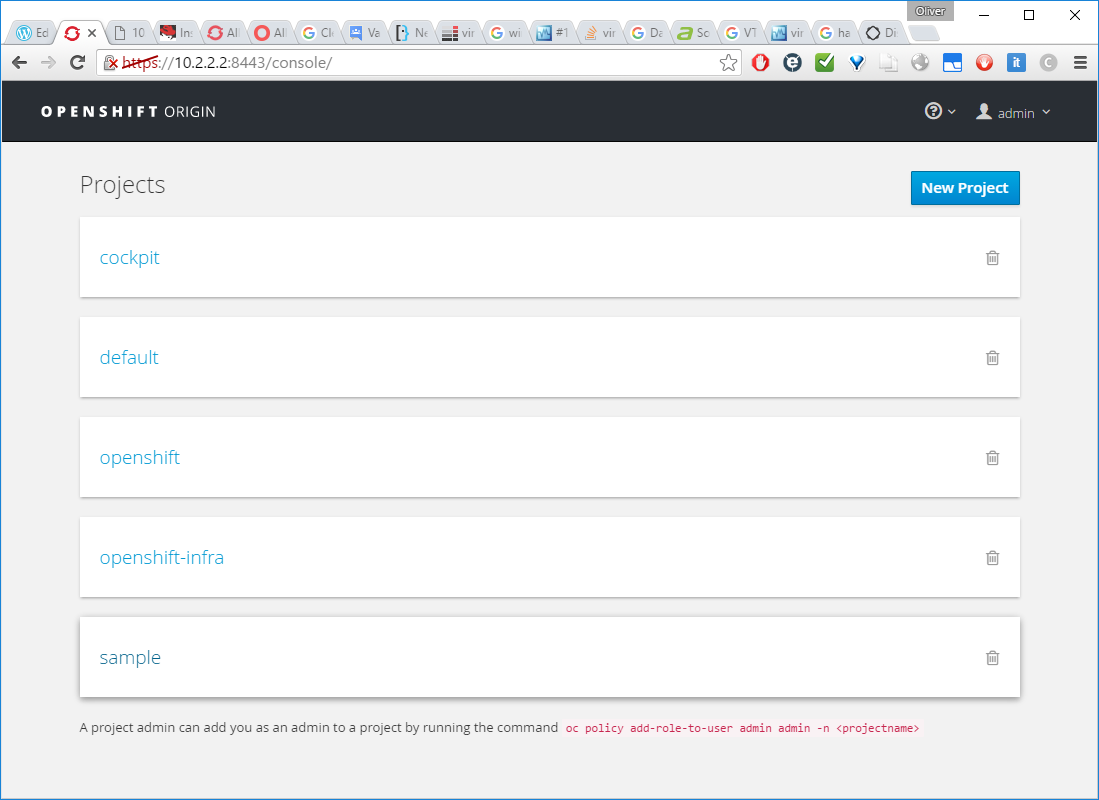

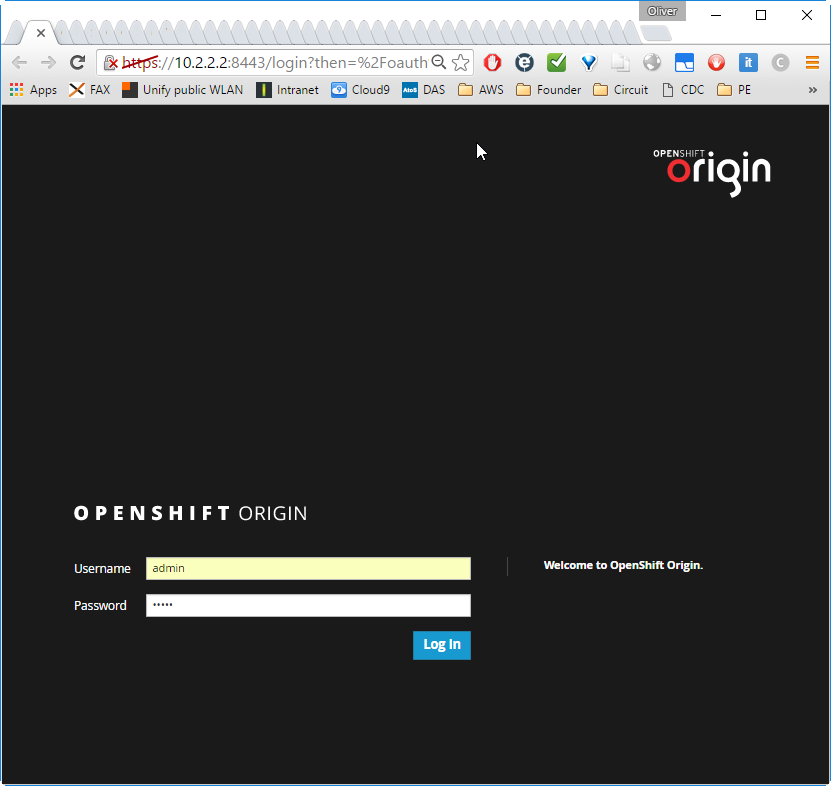

Now we can access the OpenShift Origin Console as described in the green console output of the vagrant up command:

We log in as admin (default password „admin“) and reach at the dashboard a.k.a. cockpit:

We also log into the VM via SSH and review the running docker containers:

(vagranthost)$ vagrant ssh Last login: Wed May 25 22:28:46 2016 from 10.0.2.2 [vagrant@localhost ~]$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 37f94a8b8fe7 docker.io/openshift/origin-logging-auth-proxy@sha256:5d8f0ecff0807befe18058468a4e4da1ffb1b62174f983a07814048a4c5e043f "openshift-auth-proxy" 6 hours ago Up 6 hours k8s_kibana-proxy.b7a9291f_logging-kibana-2-hxshn_openshift-infra_35dcef08-22bc-11e6-9b4c-525400659b2e_de8e9ea2 f0bef4b0dd5c cockpit/kubernetes "/usr/libexec/cockpit" 6 hours ago Up 6 hours k8s_openshift-cockpit.dcc67ea8_openshift-cockpit-1-d104s_cockpit_86637c96-22b8-11e6-9b4c-525400659b2e_217f2c94 a1015dbd379e openshift/mysql-55-centos7:latest "container-entrypoint" 6 hours ago Up 6 hours k8s_ruby-helloworld-database.187248a_database-1-otz5r_sample_d3306dbd-22c6-11e6-9b4c-525400659b2e_9f8ab573 503e40e814fe docker.io/openshift/origin-logging-kibana@sha256:d3dcb442918284146f52a64fbe240ba01f8981c5b7cd314ffb09897d6b7174f2 "container-entrypoint" 6 hours ago Up 6 hours k8s_kibana.d9cdf1ec_logging-kibana-2-hxshn_openshift-infra_35dcef08-22bc-11e6-9b4c-525400659b2e_0c33995f abaad1a7a04a docker.io/openshift/origin-logging-fluentd:v1.2.0 "sh run.sh" 6 hours ago Up 6 hours k8s_fluentd-elasticsearch.e80f8b94_logging-fluentd-b840r_openshift-infra_cb6ad37a-22b8-11e6-9b4c-525400659b2e_229b625d 79645fee1736 docker.io/openshift/origin-logging-elasticsearch@sha256:d343056e5b4c54cf6eba1a68d7ec882178b3496bc6ece1489c8d64c9c759c3dc "sh /opt/app-root/src" 6 hours ago Up 6 hours k8s_elasticsearch.283728d0_logging-es-x485kxh8-2-xf35t_openshift-infra_2d744803-22bc-11e6-9b4c-525400659b2e_fe1d72f2 05200e4b6faf docker.io/openshift/origin-logging-curator@sha256:bb8e1038ec218fa32f332a5ce4cbc1943a2698e529d43e582979328e9ea0625a "sh run.sh" 6 hours ago Up 6 hours k8s_curator.74da5ef0_logging-curator-2-bid2b_openshift-infra_3270ec38-22bc-11e6-9b4c-525400659b2e_871f529a e059b32d8156 openshift/origin-docker-registry:v1.2.0 "/bin/sh -c 'DOCKER_R" 6 hours ago Up 6 hours k8s_registry.9d8320b3_docker-registry-1-tkxzj_default_b0c96036-22b8-11e6-9b4c-525400659b2e_875f5403 d19bb703eb1f openshift/origin-haproxy-router:v1.2.0 "/usr/bin/openshift-r" 6 hours ago Up 6 hours k8s_router.e35a22a1_router-1-jiqbo_default_b2c59f4f-22b8-11e6-9b4c-525400659b2e_73bd3fdf f35b6347ed77 172.30.200.195:5000/sample/origin-ruby-sample@sha256:4c1c4abfedc00e1bbef4beee528c0400a1b116f164adbbcd5110ee63705b0cac "container-entrypoint" 6 hours ago Up 6 hours k8s_ruby-helloworld.88c553e5_frontend-1-euuc2_sample_5d32b2b0-22c7-11e6-9b4c-525400659b2e_0c7185f2 1eb852178239 openshift/origin-metrics-heapster:v1.2.0 "heapster-wrapper.sh " 6 hours ago Up 6 hours k8s_heapster.515aef5_heapster-ocq5r_openshift-infra_b8570f9c-22b8-11e6-9b4c-525400659b2e_368f65a3 77fa754bbd20 openshift/origin-metrics-cassandra:v1.2.0 "/opt/apache-cassandr" 6 hours ago Up 6 hours k8s_hawkular-cassandra-1.53e5010e_hawkular-cassandra-1-d2v1b_openshift-infra_b7910261-22b8-11e6-9b4c-525400659b2e_786012a7 3b81c1cc6661 openshift/origin-metrics-hawkular-metrics:v1.2.0 "/opt/hawkular/script" 6 hours ago Up 6 hours k8s_hawkular-metrics.ea4973e9_hawkular-metrics-p56fc_openshift-infra_b9d9ed7b-22b8-11e6-9b4c-525400659b2e_b55d8ac8 d1dc301d9a14 openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.4866dc9e_logging-curator-2-bid2b_openshift-infra_3270ec38-22bc-11e6-9b4c-525400659b2e_a126b251 5f62ac43421b openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.39efdc4_openshift-cockpit-1-d104s_cockpit_86637c96-22b8-11e6-9b4c-525400659b2e_9efdaed4 4f4fbd5d2436 openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.f81cfdbe_database-1-otz5r_sample_d3306dbd-22c6-11e6-9b4c-525400659b2e_c3ac0116 654f5542fd46 openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.4866dc9e_logging-fluentd-b840r_openshift-infra_cb6ad37a-22b8-11e6-9b4c-525400659b2e_d52bfc4b 10e9ddfa40c2 openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.ffc1fdc2_frontend-1-euuc2_sample_5d32b2b0-22c7-11e6-9b4c-525400659b2e_e59cbd94 7c5d41818a2a openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.4e1b0321_heapster-ocq5r_openshift-infra_b8570f9c-22b8-11e6-9b4c-525400659b2e_3f47f556 35cb2f4c06f3 openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.e73c2b2c_hawkular-metrics-p56fc_openshift-infra_b9d9ed7b-22b8-11e6-9b4c-525400659b2e_f15c3a5e 3476e5f5614e openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.4866dc9e_router-1-jiqbo_default_b2c59f4f-22b8-11e6-9b4c-525400659b2e_e73d4d2e f3c478ec5828 openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.17400d6_logging-kibana-2-hxshn_openshift-infra_35dcef08-22bc-11e6-9b4c-525400659b2e_e6b64a35 f3a91592f8ae openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.c487725a_hawkular-cassandra-1-d2v1b_openshift-infra_b7910261-22b8-11e6-9b4c-525400659b2e_9fe0be4c 45a67962af7e openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.d8bb260b_logging-es-x485kxh8-2-xf35t_openshift-infra_2d744803-22bc-11e6-9b4c-525400659b2e_24e7a7bd fd53357903db openshift/origin-pod:v1.2.0 "/pod" 6 hours ago Up 6 hours k8s_POD.ead9fdb7_docker-registry-1-tkxzj_default_b0c96036-22b8-11e6-9b4c-525400659b2e_713291ed

Try it out

Now is the time to try it out. We will try to follow the instructions on this official document.

If you are not logged into the VM already, log into the VM with

(vagranthost)$ vagrant ssh

Note: at this step we can verify that a kubernetes node is pre-deployed:

(master)$ kubectl get nodes NAME STATUS AGE origin Ready 109d

TryItOut Step 1: log in as a regular user

Then we can log in as an arbitrary user (the user seems to be created automatically, if it did not exist before):

(master)$ oc login

Server [https://localhost:8443]:

Authentication required for https://localhost:8443 (openshift)

Username: test

Password: test

Login successful.

You don't have any projects. You can try to create a new project, by running

(master)$ oc new-project <projectname>

TryItOut Step 2: Create a new Project

(master)$ oc new-project testproject Now using project "testproject" on server "https://localhost:8443". You can add applications to this project with the 'new-app' command. For example, try: (master)$ oc new-app centos/ruby-22-centos7~https://github.com/openshift/ruby-hello-world.git to build a new hello-world application in Ruby.

TryItOut Step 3: Create a new Application

We have chosen the OpenShift deployment-example, which is based on a Node.js image:

(master)$ oc new-app openshift/deployment-example

--> Found Docker image 1c839d8 (12 months old) from Docker Hub for "openshift/deployment-example"

* An image stream will be created as "deployment-example:latest" that will track this image

* This image will be deployed in deployment config "deployment-example"

* Port 8080/tcp will be load balanced by service "deployment-example"

* Other containers can access this service through the hostname "deployment-example"

* WARNING: Image "deployment-example" runs as the 'root' user which may not be permitted by your cluster administrator

--> Creating resources with label app=deployment-example ...

imagestream "deployment-example" created

deploymentconfig "deployment-example" created

service "deployment-example" created

--> Success

Run 'oc status' to view your app.

Note: at this step we can verify that two additional docker container for this service have been started: a pod container and a container for the actual deployment-example service are started on the OpenShift node (which is the same machine as the OpenShift master in our case, but will be different on productive systems; I am adding the correct information in the prompt for this case):

(node)$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 0942e5b837a3 openshift/deployment-example@sha256:ea9135488f323060cb18ab3ec06286cd49e4b3a611fce1a6a442651ecf421f99 "/deployment v1" 7 minutes ago Up 7 minutes k8s_deployment-example.bcf0b9f2_deployment-example-1-zf0wf_testproject_5551af7a-791d-11e6-8abe-525400659b2e_9ffce706 79ae0f3ad78a openshift/origin-pod:v1.2.0 "/pod" 8 minutes ago Up 8 minutes k8s_POD.ffc1fdc2_deployment-example-1-zf0wf_testproject_5551af7a-791d-11e6-8abe-525400659b2e_f3529323 ...In addition, we will find the service and pod via following kubectl get commands on the master (which is the same machine as the node in our case):

(master)$ kubectl get svc NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE deployment-example 172.30.0.241 <none> 8080/TCP 14m (master)$ kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE ... testproject deployment-example-1-zf0wf 1/1 Running 0 21m

TryItOut Step 4: Get Connection Details and access the new Service

With oc status we will get some information on the newly created app.

(master)$ oc status

In project testproject on server https://localhost:8443

svc/deployment-example - 172.30.0.241:8080

dc/deployment-example deploys istag/deployment-example:latest

deployment #1 deployed 43 seconds ago - 1 pod

1 warning identified, use 'oc status -v' to see details.In our case, the service should be reachable on 172.30.0.241:8080, but it is not (crossed out for not confusing the quick reader):

(node)$curl http://172.30.0.241/

is hanging forever. A docker ps command on the node reveals, that a new container and a pod was started, but without any classic port mapping.

(node)$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES f98e76d86dfd openshift/deployment-example@sha256:ea9135488f323060cb18ab3ec06286cd49e4b3a611fce1a6a442651ecf421f99 "/deployment v1" 19 minutes ago Up 19 minutes k8s_deployment-example.7a13c4ca_deployment-example-1-su671_testproject_b3ddd74d-b7b3-11e6-8815-024fdf8b2de5_e262d5ea a9c7298554da openshift/origin-pod:v1.3.1 "/pod" 19 minutes ago Up 19 minutes k8s_POD.b707fe8e_deployment-example-1-su671_testproject_b3ddd74d-b7b3-11e6-8815-024fdf8b2de5_ee64210c

The commands oc status -v and oc describe svc/deployment-example might show us, what we can do:

(master)$ oc status -v

In project testproject on server https://localhost:8443

svc/deployment-example - 172.30.0.241:8080

dc/deployment-example deploys istag/deployment-example:latest

deployment #1 deployed 19 minutes ago - 1 pod

Warnings:

* container "deployment-example" in pod/deployment-example-1-o6nc0 has restarted within the last 10 minutes

* dc/deployment-example has no readiness probe to verify pods are ready to accept traffic or ensure deployment is successful.

try: oc set probe dc/deployment-example --readiness ...

View details with 'oc describe /' or list everything with 'oc get all'.

From the next command, we will get the correct Endpoint IP address we need to address:

(master)$ oc describe svc/deployment-example Name: deployment-example Namespace: testproject Labels: app=deployment-example Selector: app=deployment-example,deploymentconfig=deployment-example Type: ClusterIP IP: 172.30.0.241 Port: 8080-tcp 8080/TCP Endpoints: 172.17.0.14:8080 Session Affinity: None No events.

While 172.30.0.241 is an internal address, the address 172.17.0.14 can be reached from the Docker host.

Note: the command

oc describe svc/deployment-exampleseems to be a synonym ofkubectl describe svc deployment-example:both commands produce the exact same response.

Note that „svc“ can be replaced by „services“ in both cases with no change of the output.

TryItOut Step 5: Access the new Service from the OpenShift VM

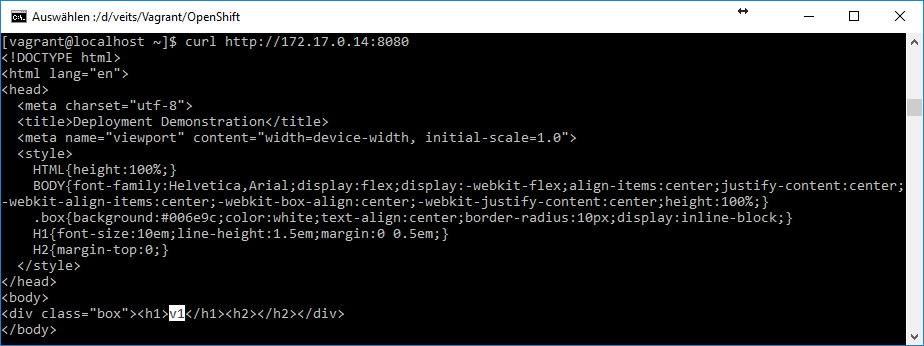

We access the service with a curl command on the OpenShift VM like follows:

(node)$ curl http://172.17.0.14:8080We can see the output of our new app: it is just a „v1“ in the body. All other parts will not be visible in an Internet browser.

TryItOut Step 6: Access the new Service from remote

In order to access a service from remote, we need to create a route like follows:

(master)$ oc expose svc/deployment-example --hostname deployment-example.apps.10.2.2.2.xip.io route "deployment-example" exposed

This will configure a HAProxy balancer with the FQDN hostname. All traffic will be distributed among the containers running this service.

Note: With the

oc exposecommand, we enter the OpenShift domain: a corresponding kubectl command does not exist.Note: Read Routes: The created routes can be shown will following get command:

(master)$ oc get routes # or kubectl get routes NAME HOST/PORT PATH SERVICE TERMINATION LABELS deployment-example deployment-example.apps.10.2.2.2.xip.io deployment-example:8080-tcp app=deployment-exampleNote: Delete Routes: The created routes can be deleted will following delete command:

(master)$ oc delete route deployment-example # or kubectl delete route deployment-example route "deployment-example" deletedNote that a corresponding

kubectlcommands exists as well. Therefore, kubernetes seems to be aware of the routes created by theoc exposecommand.

Note that we have chosen a subdomain name from the xip.com domain, which is a free wildcard DNS service that will return the embedded IP address of any domain of the format

<anything>.<ip-address>.xip.io

In our case, deployment-example.apps.10.2.2.2.xip.io will be resolved to 10.2.2.2.

It seems like the oc expose command is all you need to perform all the HAProxy magic: we can access the service via a browser from any machine that can access the eth1 interface (with IP address 10.2.2.2 in my case) of the OpenShift VM. In my case, I have used a browser on my physical host that is running the OpenShift VM on VirtualBox:

This is, what we wanted to achieve in this session: a sample service has been created from an existing docker image, and it is running on OpenShift’s Kubernetes. Moreover, we have made it accessible from external parties within the same private network on port 80 via the HAProxy load balancer provided by OpenShift.

Possible Next Steps:

OpenShift’s main component is Kubernetes. However, OpenShift is offering some add-on features I am interested to explore in more detail:

Explore following features of OpenShift as add-ons on Kubernetes:

- Access the service from the Internet by passing a local NAT device with port forwarding to the HAProxy and by adapting the HAproxy service DNS name accordingly

- Explore OpenShift’s Source code management, builds, and deployments for developers

- Explore Data Persistency options

- Explore OpenShift’s (and Kubernetes‘) REST API

- Explore Team and user tracking for organizing a large developer organization

- Design a Continuous Integration / Continuous Deployment workflow

Appendix: OpenShift Enterprise vs. OpenShift Origin

With the enterprise version of OpenShift, you can expect professional support for your money. OpenShift Enterprise will not release the latest features as early as the upstream open source project OpenShift Origin. OpenShift Enterprise has its focus on stability and streamlining. According to this Q&A on Stackoverflow, „some solutions / examples may not work exactly the same way. For example application templates, utilities come as part of packages for RHEL users. It also comes with some entitlements for things like RHEL and CloudForms integration.“

The only difference between OpenShift Origin and OpenShift Enterprise seems to be that Origin is more quickly releasing new features at the cost of stability. Moreover, the Enterprise version offers a tested environment and enterprise-grade support.

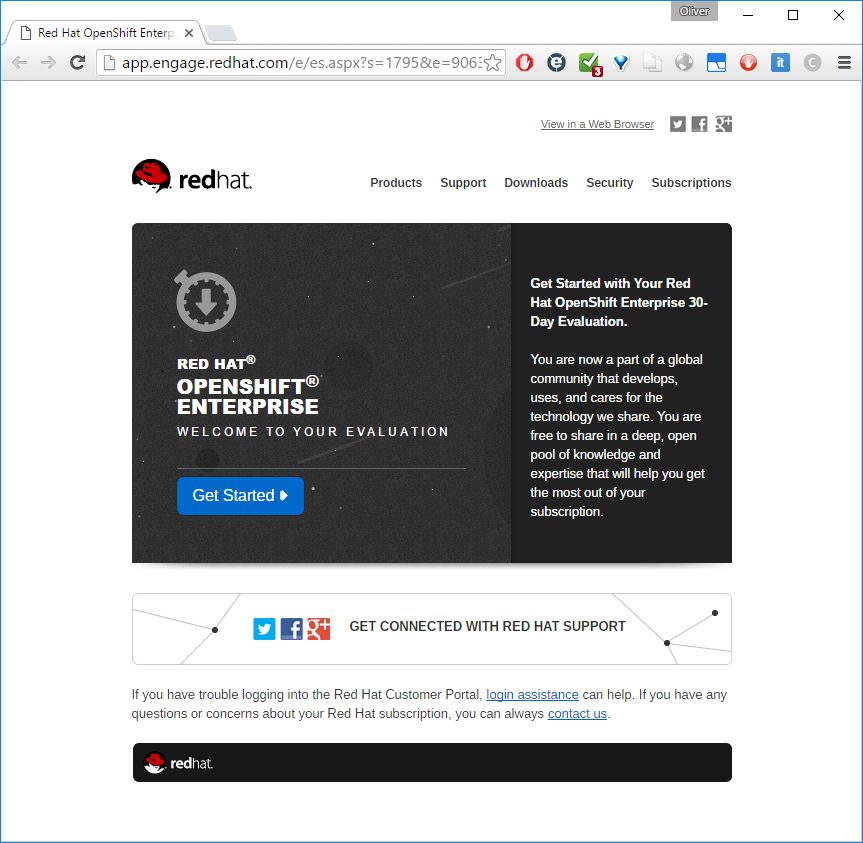

I have applied for a 30 day evaluation of Red Hat OpenShift Enterprise on this Red Hat link. Note that a Red Hat account with an email address that is not a freemail address is required. I have received an email that looks like follows:

Following the Get Started button, we reach at a page with links to the documentation for OpenShift Enterprise 3.2. However, the installation web page is hundreds of pages long with a ~25 page prerequisites chapter. This is quite overwhelming and it is not clear, where to start for a small evaluation test. Therefore, I have chosen the „Single VM path“ described above, which is based on OpenShift Origin. It looks like my OpenShift Enterprise evaluation license will expire with me not even having started to launch the enterprise version.

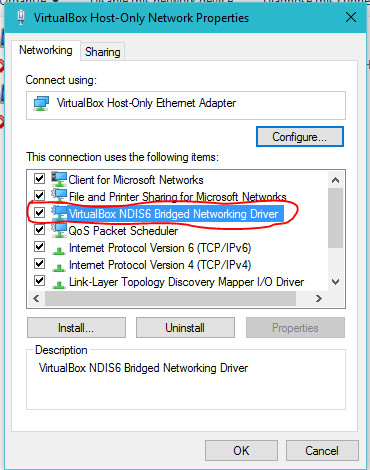

Appendix: Error Message: „Failed to create the host-only adapter“

This is a message I have received during my first attempt to start the all-in-one OpenShift VM using vagrant up.

Resolution: try again

I do not know, why, but in my case, the problem was resolved just by trying again. I also have reviewed the network adapter, as you can see in the full log below, but I cannot believe, that it had any influence. Who knows?

Full Error log and Solution Attempts:

This is, the error message I got during my first attempt to brint up the all-in-one OpenShift VM:

If so, the vagrant up process will hang at the hangs at the line starting with „==> default: Clearing“ and will show the red error information after clicking the OK button in the above window:

$ vagrant up Bringing machine 'default' up with 'virtualbox' provider... ==> default: Importing base box 'thesteve0/openshift-origin'... ==> default: Matching MAC address for NAT networking... ==> default: Checking if box 'thesteve0/openshift-origin' is up to date... ==> default: Setting the name of the VM: openshift-origin ==> default: Clearing any previously set network interfaces... There was an error while executing `VBoxManage`, a CLI used by Vagrant for controlling VirtualBox. The command and stderr is shown below. Command: ["hostonlyif", "create"] Stderr: 0%... Progress state: E_INVALIDARG VBoxManage.exe: error: Failed to create the host-only adapter VBoxManage.exe: error: Assertion failed: [!aInterfaceName.isEmpty()] at 'F:\tinderbox\win-5.0\src\VBox\Main\src-server\HostNetworkInterfaceImpl.cpp' (74) in long __cdecl HostNetworkInterface::init(class com::Bstr,class com::Bstr,class com::Guid,enum __MIDL___MIDL_itf_VirtualBox_0000_0000_0036). VBoxManage.exe: error: Please contact the product vendor! VBoxManage.exe: error: Details: code E_FAIL (0x80004005), component HostNetworkInterfaceWrap, interface IHostNetworkInterface VBoxManage.exe: error: Context: "enum RTEXITCODE __cdecl handleCreate(struct HandlerArg *)" at line 71 of file VBoxManageHostonly.cpp

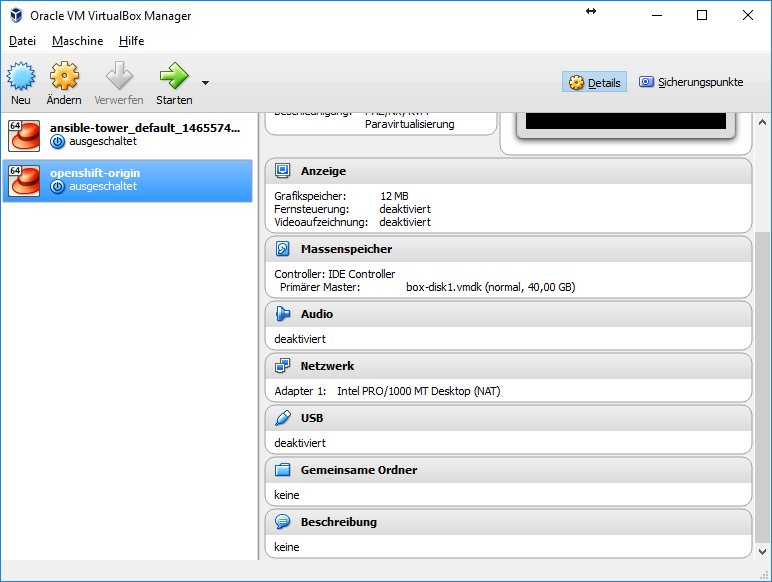

In the VirtualBox console, we will see that the openshift-origin VM is kept switched off, and there is only a NAT network interface, not a host-only interface as indicated by the above error.

I am not sure whether the error is caused by an additional VM that is found in the inventory already. Hm. Now the troubleshooting begins…

After a short research, I have found a promising stackoverflow page. Let us try:

Hm, in my case, the checkbox was already set:

After clicking the Configure button, there was a message that I have made changes in the adapter settings that will be lost. I have assumed that those are changes that have been induced by Vagrant and I have clicked „yes“.

The I have re-run the

vagrant up

command, and the host-only adapter was created successfully:

You can see that an additional host only adapter has been deployed that was missing before. Now, when issuing the command

vagrant up

the error should be gone.

Appendix: Error message: „VT-x is not available“

If you get an error message during vagrant up telling you that VT-x is not available, a reason may be that you have enabled Hyper-V on your Windows 10 machine: VirtualBox and Hyper-V cannot share the VT-x CPU:

$ vagrant up Bringing machine 'default' up with 'virtualbox' provider... ==> default: Checking if box 'thesteve0/openshift-origin' is up to date... ==> default: Clearing any previously set network interfaces... ==> default: Preparing network interfaces based on configuration... default: Adapter 1: nat default: Adapter 2: hostonly ==> default: Forwarding ports... default: 8443 (guest) => 8443 (host) (adapter 1) default: 22 (guest) => 2222 (host) (adapter 1) ==> default: Running 'pre-boot' VM customizations... ==> default: Booting VM... There was an error while executing `VBoxManage`, a CLI used by Vagrant for controlling VirtualBox. The command and stderr is shown below. Command: ["startvm", "8ec20c4c-d017-4dcf-8224-6cf530ee530e", "--type", "headless"] Stderr: VBoxManage.exe: error: VT-x is not available (VERR_VMX_NO_VMX) VBoxManage.exe: error: Details: code E_FAIL (0x80004005), component ConsoleWrap, interface IConsole

Resolution:

found here…

Step 1: prepare your Windows machine for dual boot with and without Hyper-V

As Administrator, open a CMD and issue the commands

bcdedit /copy "{current}" /d "Hyper-V"

bcdedit /set "{current}" hypervisorlaunchtype off

bcdedit /set "{current}" description "non Hyper-V"Step 2: Reboot the machine and choose the „non Hyper-V“ option.

Now, the vagrant up command should not show the „VT-x is not available“ error message anymore.

Appendix: Error: Vagrant OpenShift VirtualBox Image starts with only one Adapter

After some time not using OpenShift, I have started the existing OpenShift VirtualBox image, and I have noticed that the second host-only adapter was missing (again). Also, I could not find any reference in the Vagrantfile to the host-only adapter. Therefore, I have tried the following:

vagrant halt vagrant destroy vagrant up

The latter has produced following output:

$ vagrant up

Bringing machine 'default' up with 'virtualbox' provider...

==> default: Importing base box 'thesteve0/openshift-origin'...

==> default: Matching MAC address for NAT networking...

==> default: Checking if box 'thesteve0/openshift-origin' is up to date...

==> default: Setting the name of the VM: openshift-origin

==> default: Fixed port collision for 22 => 2222. Now on port 2202.

==> default: Clearing any previously set network interfaces...

==> default: Preparing network interfaces based on configuration...

default: Adapter 1: nat

default: Adapter 2: hostonly

==> default: Forwarding ports...

default: 8443 (guest) => 8443 (host) (adapter 1)

default: 22 (guest) => 2202 (host) (adapter 1)

==> default: Running 'pre-boot' VM customizations...

==> default: Booting VM...

==> default: Waiting for machine to boot. This may take a few minutes...

default: SSH address: 127.0.0.1:2202

default: SSH username: vagrant

default: SSH auth method: private key

default: Warning: Remote connection disconnect. Retrying...

default: Warning: Remote connection disconnect. Retrying...

default:

default: Vagrant insecure key detected. Vagrant will automatically replace

default: this with a newly generated keypair for better security.

default:

default: Inserting generated public key within guest...

default: Removing insecure key from the guest if it's present...

default: Key inserted! Disconnecting and reconnecting using new SSH key...

==> default: Machine booted and ready!

==> default: Checking for guest additions in VM...

default: No guest additions were detected on the base box for this VM! Guest

default: additions are required for forwarded ports, shared folders, host only

default: networking, and more. If SSH fails on this machine, please install

default: the guest additions and repackage the box to continue.

default:

default: This is not an error message; everything may continue to work properly,

default: in which case you may ignore this message.

==> default: Configuring and enabling network interfaces...

==> default: Running provisioner: shell...

default: Running: inline script

==> default:

==> default: Successfully started and provisioned VM with 2 cores and 4 G of memory.

==> default: To modify the number of cores and/or available memory modify your local Vagrantfile

==> default:

==> default: You can now access the OpenShift console on: https://10.2.2.2:8443/console

==> default:

==> default: Configured users are (/):

==> default: admin/admin

==> default: user/user

==> default: But, you can also use any username and password combination you would like to create

==> default: a new user.

==> default:

==> default: You can find links to the client libraries here: https://www.openshift.org/vm

==> default: If you have the oc client library on your host, you can also login from your host.

==> default:

==> default: To use OpenShift CLI, run:

==> default: $ oc login https://10.2.2.2:8443

Now, the host-only adapter has been implemented again and we can connect to https://10.2.2.2:8443:

and with

vagrant ssh docker ps

we see that all docker services are started again (docker ps had returned an empty list before reinstalling the box):

[vagrant@localhost ~]$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 05895a3fe36e docker.io/openshift/origin-logging-kibana@sha256:d3dcb442918284146f52a64fbe240ba01f8981c5b7cd314ffb09897d6b7174f2 "container-entrypoint" 39 seconds ago Up 38 seconds k8s_kibana.d9cdf1ec_logging-kibana-2-hxshn_openshift-infra_35dcef08-22bc-11e6-9b4c-525400659b2e_63360129 97ff65c455bf docker.io/openshift/origin-logging-elasticsearch@sha256:d343056e5b4c54cf6eba1a68d7ec882178b3496bc6ece1489c8d64c9c759c3dc "sh /opt/app-root/src" 42 seconds ago Up 41 seconds k8s_elasticsearch.283728d0_logging-es-x485kxh8-2-xf35t_openshift-infra_2d744803-22bc-11e6-9b4c-525400659b2e_5a9777b2 b8ae7fcefd5a docker.io/openshift/origin-logging-curator@sha256:bb8e1038ec218fa32f332a5ce4cbc1943a2698e529d43e582979328e9ea0625a "sh run.sh" 46 seconds ago Up 44 seconds k8s_curator.74da5ef0_logging-curator-2-bid2b_openshift-infra_3270ec38-22bc-11e6-9b4c-525400659b2e_646b13cf bf6e293e480d docker.io/openshift/origin-logging-fluentd:v1.2.0 "sh run.sh" 48 seconds ago Up 47 seconds k8s_fluentd-elasticsearch.e80f8b94_logging-fluentd-b840r_openshift-infra_cb6ad37a-22b8-11e6-9b4c-525400659b2e_7b39586e 3e70cb2efadc cockpit/kubernetes "/usr/libexec/cockpit" 51 seconds ago Up 50 seconds k8s_openshift-cockpit.dcc67ea8_openshift-cockpit-1-d104s_cockpit_86637c96-22b8-11e6-9b4c-525400659b2e_537550bf 537d61e29059 docker.io/openshift/origin-logging-auth-proxy@sha256:5d8f0ecff0807befe18058468a4e4da1ffb1b62174f983a07814048a4c5e043f "openshift-auth-proxy" 3 minutes ago Up 3 minutes k8s_kibana-proxy.b7a9291f_logging-kibana-2-hxshn_openshift-infra_35dcef08-22bc-11e6-9b4c-525400659b2e_680aace6 41ac226bbb48 openshift/mysql-55-centos7:latest "container-entrypoint" 3 minutes ago Up 3 minutes k8s_ruby-helloworld-database.187248a_database-1-otz5r_sample_d3306dbd-22c6-11e6-9b4c-525400659b2e_eb638ec4 63352a7444ce openshift/origin-metrics-hawkular-metrics:v1.2.0 "/opt/hawkular/script" 7 minutes ago Up 7 minutes k8s_hawkular-metrics.ea4973e9_hawkular-metrics-p56fc_openshift-infra_b9d9ed7b-22b8-11e6-9b4c-525400659b2e_99574b0b 3a53a5cfbe22 openshift/origin-metrics-cassandra:v1.2.0 "/opt/apache-cassandr" 7 minutes ago Up 7 minutes k8s_hawkular-cassandra-1.53e5010e_hawkular-cassandra-1-d2v1b_openshift-infra_b7910261-22b8-11e6-9b4c-525400659b2e_37a004e1 7e9db17f0210 172.30.200.195:5000/sample/origin-ruby-sample@sha256:4c1c4abfedc00e1bbef4beee528c0400a1b116f164adbbcd5110ee63705b0cac "container-entrypoint" 7 minutes ago Up 7 minutes k8s_ruby-helloworld.88c553e5_frontend-1-euuc2_sample_5d32b2b0-22c7-11e6-9b4c-525400659b2e_dea9c79c ee5aed65aa69 openshift/origin-haproxy-router:v1.2.0 "/usr/bin/openshift-r" 7 minutes ago Up 7 minutes k8s_router.e35a22a1_router-1-jiqbo_default_b2c59f4f-22b8-11e6-9b4c-525400659b2e_a008fe68 4f9e32e9d045 openshift/origin-metrics-heapster:v1.2.0 "heapster-wrapper.sh " 7 minutes ago Up 7 minutes k8s_heapster.515aef5_heapster-ocq5r_openshift-infra_b8570f9c-22b8-11e6-9b4c-525400659b2e_0f67a18d fa4bc1e06d60 openshift/origin-docker-registry:v1.2.0 "/bin/sh -c 'DOCKER_R" 7 minutes ago Up 7 minutes k8s_registry.9d8320b3_docker-registry-1-tkxzj_default_b0c96036-22b8-11e6-9b4c-525400659b2e_3e5b2362 05270fb7ed90 openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.d8bb260b_logging-es-x485kxh8-2-xf35t_openshift-infra_2d744803-22bc-11e6-9b4c-525400659b2e_1c4857c7 59193b1b2f5f openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.e73c2b2c_hawkular-metrics-p56fc_openshift-infra_b9d9ed7b-22b8-11e6-9b4c-525400659b2e_e1d086d8 3b2c6cdd65da openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.c487725a_hawkular-cassandra-1-d2v1b_openshift-infra_b7910261-22b8-11e6-9b4c-525400659b2e_0b91a76f ef890d51aa67 openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.4e1b0321_heapster-ocq5r_openshift-infra_b8570f9c-22b8-11e6-9b4c-525400659b2e_c3a458c5 95112b354da0 openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.17400d6_logging-kibana-2-hxshn_openshift-infra_35dcef08-22bc-11e6-9b4c-525400659b2e_547c358a 3bca6997c580 openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.39efdc4_openshift-cockpit-1-d104s_cockpit_86637c96-22b8-11e6-9b4c-525400659b2e_30cee83b ed1143cb897a openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.4866dc9e_logging-curator-2-bid2b_openshift-infra_3270ec38-22bc-11e6-9b4c-525400659b2e_fe75521d d9ce5dea6d20 openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.4866dc9e_logging-fluentd-b840r_openshift-infra_cb6ad37a-22b8-11e6-9b4c-525400659b2e_0c4761c6 4e28a6038c5c openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.ffc1fdc2_frontend-1-euuc2_sample_5d32b2b0-22c7-11e6-9b4c-525400659b2e_b68a506c e4615046a70a openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.4866dc9e_router-1-jiqbo_default_b2c59f4f-22b8-11e6-9b4c-525400659b2e_da02d7e3 7ac90bc3090f openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.f81cfdbe_database-1-otz5r_sample_d3306dbd-22c6-11e6-9b4c-525400659b2e_1f35c8a2 cf6dbe004501 openshift/origin-pod:v1.2.0 "/pod" 7 minutes ago Up 7 minutes k8s_POD.ead9fdb7_docker-registry-1-tkxzj_default_b0c96036-22b8-11e6-9b4c-525400659b2e_6dc4f670 [vagrant@localhost ~]$

Appendix: Sample Route

The OpenShift all-in-one VM comes with some sample projects you can access and review as admin. The „sample“ project shows a frontend and a backend database service and a sample route pointing to the frontend service. Here, we show, how this project can be explored on command line.

On the all-in-one VM of OpenShift, perform the following commands:

$ oc login Authentication required for https://localhost:8443 (openshift) Username: admin Password: Login successful. You have access to the following projects and can switch between them with 'oc project <projectname>': * cockpit * default * openshift * openshift-infra * sample (current) * testproject Using project "sample". $ oc project sample Now using project "sample" on server "https://localhost:8443". $ oc get svc NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE database 172.30.29.101 5434/TCP 54d frontend 172.30.39.127 5432/TCP 54d $ oc get routes NAME HOST/PORT PATH SERVICE TERMINATION LABELS frontend frontend-sample.apps.10.2.2.2.xip.io frontend:web app=ruby-sample-build,template=application-template-stibuild

This sample route can be used as a template for your own projects.

More Information

- Monitoring

- via Cockpit: youtube video; In the installation above, you can connect to http://cockpit.apps.10.2.2.2.xip.io/ in order to reach the cockpit. Note however, that in the youtube video there are two tabs that are not present in my installation; it seems that onle the content of the „Cluster“ tab is displayed. Moreover, the CPU graphs seem to be missing…

- List of Docker Monitoring tools on GitHub

- Articles with comparison of Docker monitoring tools:

- Comparing 7 monitoring tools

- 6 next-gen monitoring tools tackle Docker

- Docker con „Docker Monitoring“ presention with Brian Christner, System Architect, Swisscom AG: compares docker stats, cAdvisor (native), cAdvisor + InfluxDB +

5 comments