Today, we will introduce Cassandra, a distributed and resilient, highly scalable noSQL database. For simplicity, we will run a cluster within Docker containers. We will test the resiliency functions by killing one of two containers and verifying that all data is retained.

What is Cassandra?

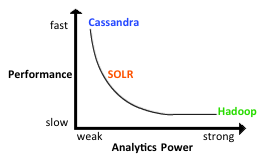

Apache Cassandra is a fast, distributed noSQL database that can be used for big data use cases. A short comparison of Apache Cassandra with Apache Hadoop can found in this Cassandra vs Hadoop blog post:

- Hadoop is a big data framework for storing and analyzing a vast amount of unstructured, historic data. Why ‚historic‘? The reason is that the search capabilities of Hadoop rely on long-running, CPU-intensive MapReduce processes that are running as batch processes.

- Cassandra is a distributed noSQL database for structured data, and is ideally suited for structured, „hot“ data, i.e. Cassandra is capable of processing online workloads of a transactional nature.

I have found following figure that compares Cassandra with SOLR/Lucene and Apache Hadoop:

Target Configuration for this Blog Post

In this Hello World blog post, we will create two Cassandra server containers and a Cassandra client container. For the sake of this test, we store the Cassandra databases within the containers (in a productive environment, you would most likely store the database outside the container). We will add data to the cluster and make sure the data is replicated to both servers. We can test this by shutting down one server container first, starting a new server container to restore the redundancy, shutting down a second container and make sure, that the data is still available.

Tools used

- Vagrant 1.8.6

- Virtualbox 5.0.20

- Docker 1.12.1

- Cassandra 3.9

Prerequisites:

- > 3.3 GB DRAM (Docker host: ~0.3 GB, ~1.5 GB per Cassandra node, < ~0.1 GB for Cassandra client)

Step 1: Install a Docker Host via Vagrant and Connect to the Host via SSH

If you are using an existing docker host, make sure that your host has enough memory and your own Docker ho

We will run Cassandra in Docker containers in order to allow for maximum interoperability. This way, we always can use the latest Logstash version without the need to control the java version used: e.g. Logstash v 1.4.x works with java 7, while version 5.0.x works with java 8 only, currently.

If you are new to Docker, you might want to read this blog post.

Installing Docker on Windows and Mac can be a real challenge, but no worries: we will show an easy way here, that is much quicker than the one described in Docker’s official documentation:

Prerequisites of this step:

- I recommend having direct access to the Internet: via your Firewall, but without any HTTP proxy. However, if you cannot get rid of your HTTP proxy, read this blog post.

- Administration rights on you computer.

Steps to install a Docker Host VirtualBox VM:

Download and install Virtualbox (if the installation fails with error message „<to be completed> see Appendix A of this blog post: Virtualbox Installation Workaround below)

1. Download and Install Vagrant (requires a reboot)

2. Download Vagrant Box containing an Ubuntu-based Docker Host and create a VirtualBox VM like follows:

basesystem# mkdir ubuntu-trusty64-docker ; cd ubuntu-trusty64-docker basesystem# vagrant init williamyeh/ubuntu-trusty64-docker basesystem# vagrant up basesystem# vagrant ssh

Now you are logged into the Docker host and we are ready for the next step: to create the Ansible Docker image.

Note: I have experienced problems with the vi editor when running

vagrant sshin a Windows terminal. In case of Windows, consider to follow Appendix C of this blog post and to use putty instead.

Step 2 (optional): Download Cassandra Image

This extra download step is optional, since the Cassandra Docker image will be downloaded automatically in step 3, if it is not already found on the system:

(dockerhost)$ docker pull cassandra Using default tag: latest latest: Pulling from library/cassandra 386a066cd84a: Already exists e4bd24d76b78: Pull complete 5ccb1c317672: Pull complete a7ffd548f738: Pull complete d6f6138be804: Pull complete 756363f453c9: Pull complete 26258521e648: Pull complete fb207e348163: Pull complete 3f9a7ac16b1d: Pull complete 49e0632fe1f1: Pull complete ba775b0b41f4: Pull complete Digest: sha256:f5b1391b457ead432dc05d34797212f038bd9bd4f0b0260d90ce74e53cbe7ca9 Status: Downloaded newer image for cassandra:latest

The version of the downloaded Cassandra image can be checked with following command:

(dockerhost)$ sudo docker run -it --rm --name cassandra cassandra -v 3.9

We are using version 3.9 currently. If you want to make sure that you use the exact same version as I have used in this blog, you can use the imagename cassandra:3.9 in all docker commands instead of cassandra only.

Step 2: Run Cassandra in interactive Terminal Mode

In this step, we will run Cassandra interactively (with -it switch instead of -d switch) to better see, what is happening. In a productive environment, you will use the detached mode -d instead of the interactive terminal mode -it.

We have found out by analyzing the Cassandra image via the online imagelayer tool, that the default command is to run /docker-entrypoint.sh cassandra -f and that cassandra uses the ports 7000/tcp 7001/tcp 7199/tcp 9042/tcp 9160/tcp. We keep the entrypoint and map the ports to the outside world:

(dockerhost)$ sudo docker run -it --rm --name cassandra-node1 -p7000:7000 -p7001:7001 -p9042:9042 -p9160:9160 cassandra

INFO 15:30:17 Configuration location: file:/etc/cassandra/cassandra.yaml

INFO 15:30:17 Node configuration:[allocate_tokens_for_keyspace=null; authenticator=AllowAllAuthenticator; authorizer=AllowAllAuthorizer; auto_bootstrap=true; auto_snapshot=true; batch_size_fail_threshold_in_kb=50; batch_size_warn_threshold_in_kb=5; batchlog_replay_throttle_in_kb=1024; broadcast_address=172.17.0.4; broadcast_rpc_address=172.17.0.4; buffer_pool_use_heap_if_exhausted=true; cas_contention_timeout_in_ms=1000; cdc_enabled=false; cdc_free_space_check_interval_ms=250; cdc_raw_directory=null; cdc_total_space_in_mb=null; client_encryption_options=; cluster_name=Test Cluster; column_index_cache_size_in_kb=2; column_index_size_in_kb=64; commit_failure_policy=stop; commitlog_compression=null; commitlog_directory=/var/lib/cassandra/commitlog; commitlog_max_compression_buffers_in_pool=3; commitlog_periodic_queue_size=-1; commitlog_segment_size_in_mb=32; commitlog_sync=periodic; commitlog_sync_batch_window_in_ms=null; commitlog_sync_period_in_ms=10000; commitlog_total_space_in_mb=null; compaction_large_partition_warning_threshold_mb=100; compaction_throughput_mb_per_sec=16; concurrent_compactors=null; concurrent_counter_writes=32; concurrent_materialized_view_writes=32; concurrent_reads=32; concurrent_replicates=null; concurrent_writes=32; counter_cache_keys_to_save=2147483647; counter_cache_save_period=7200; counter_cache_size_in_mb=null; counter_write_request_timeout_in_ms=5000; credentials_cache_max_entries=1000; credentials_update_interval_in_ms=-1; credentials_validity_in_ms=2000; cross_node_timeout=false; data_file_directories=[Ljava.lang.String;@2928854b; disk_access_mode=auto; disk_failure_policy=stop; disk_optimization_estimate_percentile=0.95; disk_optimization_page_cross_chance=0.1; disk_optimization_strategy=ssd; dynamic_snitch=true; dynamic_snitch_badness_threshold=0.1; dynamic_snitch_reset_interval_in_ms=600000; dynamic_snitch_update_interval_in_ms=100; enable_scripted_user_defined_functions=false; enable_user_defined_functions=false; enable_user_defined_functions_threads=true; encryption_options=null; endpoint_snitch=SimpleSnitch; file_cache_size_in_mb=null; gc_log_threshold_in_ms=200; gc_warn_threshold_in_ms=1000; hinted_handoff_disabled_datacenters=[]; hinted_handoff_enabled=true; hinted_handoff_throttle_in_kb=1024; hints_compression=null; hints_directory=null; hints_flush_period_in_ms=10000; incremental_backups=false; index_interval=null; index_summary_capacity_in_mb=null; index_summary_resize_interval_in_minutes=60; initial_token=null; inter_dc_stream_throughput_outbound_megabits_per_sec=200; inter_dc_tcp_nodelay=false; internode_authenticator=null; internode_compression=dc; internode_recv_buff_size_in_bytes=null; internode_send_buff_size_in_bytes=null; key_cache_keys_to_save=2147483647; key_cache_save_period=14400; key_cache_size_in_mb=null; listen_address=172.17.0.4; listen_interface=null; listen_interface_prefer_ipv6=false; listen_on_broadcast_address=false; max_hint_window_in_ms=10800000; max_hints_delivery_threads=2; max_hints_file_size_in_mb=128; max_mutation_size_in_kb=null; max_streaming_retries=3; max_value_size_in_mb=256; memtable_allocation_type=heap_buffers; memtable_cleanup_threshold=null; memtable_flush_writers=1; memtable_heap_space_in_mb=null; memtable_offheap_space_in_mb=null; min_free_space_per_drive_in_mb=50; native_transport_max_concurrent_connections=-1; native_transport_max_concurrent_connections_per_ip=-1; native_transport_max_frame_size_in_mb=256; native_transport_max_threads=128; native_transport_port=9042; native_transport_port_ssl=null; num_tokens=256; otc_coalescing_strategy=TIMEHORIZON; otc_coalescing_window_us=200; partitioner=org.apache.cassandra.dht.Murmur3Partitioner; permissions_cache_max_entries=1000; permissions_update_interval_in_ms=-1; permissions_validity_in_ms=2000; phi_convict_threshold=8.0; prepared_statements_cache_size_mb=null; range_request_timeout_in_ms=10000; read_request_timeout_in_ms=5000; request_scheduler=org.apache.cassandra.scheduler.NoScheduler; request_scheduler_id=null; request_scheduler_options=null; request_timeout_in_ms=10000; role_manager=CassandraRoleManager; roles_cache_max_entries=1000; roles_update_interval_in_ms=-1; roles_validity_in_ms=2000; row_cache_class_name=org.apache.cassandra.cache.OHCProvider; row_cache_keys_to_save=2147483647; row_cache_save_period=0; row_cache_size_in_mb=0; rpc_address=0.0.0.0; rpc_interface=null; rpc_interface_prefer_ipv6=false; rpc_keepalive=true; rpc_listen_backlog=50; rpc_max_threads=2147483647; rpc_min_threads=16; rpc_port=9160; rpc_recv_buff_size_in_bytes=null; rpc_send_buff_size_in_bytes=null; rpc_server_type=sync; saved_caches_directory=/var/lib/cassandra/saved_caches; seed_provider=org.apache.cassandra.locator.SimpleSeedProvider{seeds=172.17.0.4}; server_encryption_options=; snapshot_before_compaction=false; ssl_storage_port=7001; sstable_preemptive_open_interval_in_mb=50; start_native_transport=true; start_rpc=false; storage_port=7000; stream_throughput_outbound_megabits_per_sec=200; streaming_socket_timeout_in_ms=86400000; thrift_framed_transport_size_in_mb=15; thrift_max_message_length_in_mb=16; thrift_prepared_statements_cache_size_mb=null; tombstone_failure_threshold=100000; tombstone_warn_threshold=1000; tracetype_query_ttl=86400; tracetype_repair_ttl=604800; transparent_data_encryption_options=org.apache.cassandra.config.TransparentDataEncryptionOptions@27ae2fd0; trickle_fsync=false; trickle_fsync_interval_in_kb=10240; truncate_request_timeout_in_ms=60000; unlogged_batch_across_partitions_warn_threshold=10; user_defined_function_fail_timeout=1500; user_defined_function_warn_timeout=500; user_function_timeout_policy=die; windows_timer_interval=1; write_request_timeout_in_ms=2000]

INFO 15:30:17 DiskAccessMode 'auto' determined to be mmap, indexAccessMode is mmap

INFO 15:30:17 Global memtable on-heap threshold is enabled at 251MB

INFO 15:30:17 Global memtable off-heap threshold is enabled at 251MB

WARN 15:30:18 Only 22.856GiB free across all data volumes. Consider adding more capacity to your cluster or removing obsolete snapshots

INFO 15:30:18 Hostname: 4ba7699e4fc2

INFO 15:30:18 JVM vendor/version: OpenJDK 64-Bit Server VM/1.8.0_111

INFO 15:30:18 Heap size: 1004.000MiB/1004.000MiB

INFO 15:30:18 Code Cache Non-heap memory: init = 2555904(2496K) used = 3906816(3815K) committed = 3932160(3840K) max = 251658240(245760K)

INFO 15:30:18 Metaspace Non-heap memory: init = 0(0K) used = 15609080(15243K) committed = 16252928(15872K) max = -1(-1K)

INFO 15:30:18 Compressed Class Space Non-heap memory: init = 0(0K) used = 1909032(1864K) committed = 2097152(2048K) max = 1073741824(1048576K)

INFO 15:30:18 Par Eden Space Heap memory: init = 167772160(163840K) used = 73864848(72133K) committed = 167772160(163840K) max = 167772160(163840K)

INFO 15:30:18 Par Survivor Space Heap memory: init = 20971520(20480K) used = 0(0K) committed = 20971520(20480K) max = 20971520(20480K)

INFO 15:30:18 CMS Old Gen Heap memory: init = 864026624(843776K) used = 0(0K) committed = 864026624(843776K) max = 864026624(843776K)

INFO 15:30:18 Classpath: /etc/cassandra:/usr/share/cassandra/lib/HdrHistogram-2.1.9.jar:/usr/share/cassandra/lib/ST4-4.0.8.jar:/usr/share/cassandra/lib/airline-0.6.jar:/usr/share/cassandra/lib/antlr-runtime-3.5.2.jar:/usr/share/cassandra/lib/asm-5.0.4.jar:/usr/share/cassandra/lib/caffeine-2.2.6.jar:/usr/share/cassandra/lib/cassandra-driver-core-3.0.1-shaded.jar:/usr/share/cassandra/lib/commons-cli-1.1.jar:/usr/share/cassandra/lib/commons-codec-1.2.jar:/usr/share/cassandra/lib/commons-lang3-3.1.jar:/usr/share/cassandra/lib/commons-math3-3.2.jar:/usr/share/cassandra/lib/compress-lzf-0.8.4.jar:/usr/share/cassandra/lib/concurrent-trees-2.4.0.jar:/usr/share/cassandra/lib/concurrentlinkedhashmap-lru-1.4.jar:/usr/share/cassandra/lib/disruptor-3.0.1.jar:/usr/share/cassandra/lib/ecj-4.4.2.jar:/usr/share/cassandra/lib/guava-18.0.jar:/usr/share/cassandra/lib/high-scale-lib-1.0.6.jar:/usr/share/cassandra/lib/hppc-0.5.4.jar:/usr/share/cassandra/lib/jackson-core-asl-1.9.2.jar:/usr/share/cassandra/lib/jackson-mapper-asl-1.9.2.jar:/usr/share/cassandra/lib/jamm-0.3.0.jar:/usr/share/cassandra/lib/javax.inject.jar:/usr/share/cassandra/lib/jbcrypt-0.3m.jar:/usr/share/cassandra/lib/jcl-over-slf4j-1.7.7.jar:/usr/share/cassandra/lib/jflex-1.6.0.jar:/usr/share/cassandra/lib/jna-4.0.0.jar:/usr/share/cassandra/lib/joda-time-2.4.jar:/usr/share/cassandra/lib/json-simple-1.1.jar:/usr/share/cassandra/lib/libthrift-0.9.2.jar:/usr/share/cassandra/lib/log4j-over-slf4j-1.7.7.jar:/usr/share/cassandra/lib/logback-classic-1.1.3.jar:/usr/share/cassandra/lib/logback-core-1.1.3.jar:/usr/share/cassandra/lib/lz4-1.3.0.jar:/usr/share/cassandra/lib/metrics-core-3.1.0.jar:/usr/share/cassandra/lib/metrics-jvm-3.1.0.jar:/usr/share/cassandra/lib/metrics-logback-3.1.0.jar:/usr/share/cassandra/lib/netty-all-4.0.39.Final.jar:/usr/share/cassandra/lib/ohc-core-0.4.3.jar:/usr/share/cassandra/lib/ohc-core-j8-0.4.3.jar:/usr/share/cassandra/lib/primitive-1.0.jar:/usr/share/cassandra/lib/reporter-config-base-3.0.0.jar:/usr/share/cassandra/lib/reporter-config3-3.0.0.jar:/usr/share/cassandra/lib/sigar-1.6.4.jar:/usr/share/cassandra/lib/slf4j-api-1.7.7.jar:/usr/share/cassandra/lib/snakeyaml-1.11.jar:/usr/share/cassandra/lib/snappy-java-1.1.1.7.jar:/usr/share/cassandra/lib/snowball-stemmer-1.3.0.581.1.jar:/usr/share/cassandra/lib/stream-2.5.2.jar:/usr/share/cassandra/lib/thrift-server-0.3.7.jar:/usr/share/cassandra/apache-cassandra-3.9.jar:/usr/share/cassandra/apache-cassandra-thrift-3.9.jar:/usr/share/cassandra/apache-cassandra.jar:/usr/share/cassandra/stress.jar::/usr/share/cassandra/lib/jamm-0.3.0.jar

INFO 15:30:18 JVM Arguments: [-Xloggc:/var/log/cassandra/gc.log, -ea, -XX:+UseThreadPriorities, -XX:ThreadPriorityPolicy=42, -XX:+HeapDumpOnOutOfMemoryError, -Xss256k, -XX:StringTableSize=1000003, -XX:+AlwaysPreTouch, -XX:-UseBiasedLocking, -XX:+UseTLAB, -XX:+ResizeTLAB, -XX:+PerfDisableSharedMem, -Djava.net.preferIPv4Stack=true, -XX:+UseParNewGC, -XX:+UseConcMarkSweepGC, -XX:+CMSParallelRemarkEnabled, -XX:SurvivorRatio=8, -XX:MaxTenuringThreshold=1, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -XX:CMSWaitDuration=10000, -XX:+CMSParallelInitialMarkEnabled, -XX:+CMSEdenChunksRecordAlways, -XX:+CMSClassUnloadingEnabled, -XX:+PrintGCDetails, -XX:+PrintGCDateStamps, -XX:+PrintHeapAtGC, -XX:+PrintTenuringDistribution, -XX:+PrintGCApplicationStoppedTime, -XX:+PrintPromotionFailure, -XX:+UseGCLogFileRotation, -XX:NumberOfGCLogFiles=10, -XX:GCLogFileSize=10M, -Xms1024M, -Xmx1024M, -Xmn200M, -XX:CompileCommandFile=/etc/cassandra/hotspot_compiler, -javaagent:/usr/share/cassandra/lib/jamm-0.3.0.jar, -Dcassandra.jmx.local.port=7199, -Dcom.sun.management.jmxremote.authenticate=false, -Dcom.sun.management.jmxremote.password.file=/etc/cassandra/jmxremote.password, -Djava.library.path=/usr/share/cassandra/lib/sigar-bin, -Dcassandra.libjemalloc=/usr/lib/x86_64-linux-gnu/libjemalloc.so.1, -Dlogback.configurationFile=logback.xml, -Dcassandra.logdir=/var/log/cassandra, -Dcassandra.storagedir=/var/lib/cassandra, -Dcassandra-foreground=yes]

WARN 15:30:18 Unable to lock JVM memory (ENOMEM). This can result in part of the JVM being swapped out, especially with mmapped I/O enabled. Increase RLIMIT_MEMLOCK or run Cassandra as root.

INFO 15:30:18 jemalloc seems to be preloaded from /usr/lib/x86_64-linux-gnu/libjemalloc.so.1

WARN 15:30:18 JMX is not enabled to receive remote connections. Please see cassandra-env.sh for more info.

WARN 15:30:18 OpenJDK is not recommended. Please upgrade to the newest Oracle Java release

INFO 15:30:18 Initializing SIGAR library

WARN 15:30:18 Cassandra server running in degraded mode. Is swap disabled? : false, Address space adequate? : true, nofile limit adequate? : true, nproc limit adequate? : true

WARN 15:30:18 Directory /var/lib/cassandra/data doesn't exist

WARN 15:30:18 Directory /var/lib/cassandra/commitlog doesn't exist

WARN 15:30:18 Directory /var/lib/cassandra/saved_caches doesn't exist

WARN 15:30:18 Directory /var/lib/cassandra/hints doesn't exist

INFO 15:30:18 Initialized prepared statement caches with 10 MB (native) and 10 MB (Thrift)

INFO 15:30:19 Initializing system.IndexInfo

INFO 15:30:20 Initializing system.batches

INFO 15:30:20 Initializing system.paxos

INFO 15:30:20 Initializing system.local

INFO 15:30:20 Initializing system.peers

INFO 15:30:20 Initializing system.peer_events

INFO 15:30:20 Initializing system.range_xfers

INFO 15:30:20 Initializing system.compaction_history

INFO 15:30:20 Initializing system.sstable_activity

INFO 15:30:20 Initializing system.size_estimates

INFO 15:30:20 Initializing system.available_ranges

INFO 15:30:20 Initializing system.views_builds_in_progress

INFO 15:30:20 Initializing system.built_views

INFO 15:30:20 Initializing system.hints

INFO 15:30:20 Initializing system.batchlog

INFO 15:30:20 Initializing system.schema_keyspaces

INFO 15:30:20 Initializing system.schema_columnfamilies

INFO 15:30:20 Initializing system.schema_columns

INFO 15:30:20 Initializing system.schema_triggers

INFO 15:30:20 Initializing system.schema_usertypes

INFO 15:30:20 Initializing system.schema_functions

INFO 15:30:20 Initializing system.schema_aggregates

INFO 15:30:20 Not submitting build tasks for views in keyspace system as storage service is not initialized

INFO 15:30:20 Configured JMX server at: service:jmx:rmi://127.0.0.1/jndi/rmi://127.0.0.1:7199/jmxrmi

INFO 15:30:21 Initializing key cache with capacity of 50 MBs.

INFO 15:30:21 Initializing row cache with capacity of 0 MBs

INFO 15:30:21 Initializing counter cache with capacity of 25 MBs

INFO 15:30:21 Scheduling counter cache save to every 7200 seconds (going to save all keys).

INFO 15:30:21 Global buffer pool is enabled, when pool is exhausted (max is 251.000MiB) it will allocate on heap

INFO 15:30:21 Populating token metadata from system tables

INFO 15:30:21 Token metadata:

INFO 15:30:21 Initializing system_schema.keyspaces

INFO 15:30:21 Initializing system_schema.tables

INFO 15:30:21 Initializing system_schema.columns

INFO 15:30:21 Initializing system_schema.triggers

INFO 15:30:21 Initializing system_schema.dropped_columns

INFO 15:30:21 Initializing system_schema.views

INFO 15:30:21 Initializing system_schema.types

INFO 15:30:21 Initializing system_schema.functions

INFO 15:30:21 Initializing system_schema.aggregates

INFO 15:30:21 Initializing system_schema.indexes

INFO 15:30:21 Not submitting build tasks for views in keyspace system_schema as storage service is not initialized

INFO 15:30:21 Completed loading (5 ms; 1 keys) KeyCache cache

INFO 15:30:21 No commitlog files found; skipping replay

INFO 15:30:21 Populating token metadata from system tables

INFO 15:30:21 Token metadata:

INFO 15:30:22 Cassandra version: 3.9

INFO 15:30:22 Thrift API version: 20.1.0

INFO 15:30:22 CQL supported versions: 3.4.2 (default: 3.4.2)

INFO 15:30:22 Initializing index summary manager with a memory pool size of 50 MB and a resize interval of 60 minutes

INFO 15:30:22 Starting Messaging Service on /172.17.0.4:7000 (eth0)

WARN 15:30:22 No host ID found, created 1c7f41f6-4513-4949-abc3-0335af298fc8 (Note: This should happen exactly once per node).

INFO 15:30:22 Loading persisted ring state

INFO 15:30:22 Starting up server gossip

INFO 15:30:22 This node will not auto bootstrap because it is configured to be a seed node.

INFO 15:30:22 Generated random tokens. tokens are [295917137465811607, -302115512024598814, -4810310810107556185, -7541303704934353556, -6783448374042524533, -304630524111773314, 1533898300851998947, 3941083117284553885, -6988940081331410223, -4534890749515718142, 4308460093122238220, 7581503118159726763, -6723684273635062672, 1884874259542417756, 7788232024365633386, 5915388149540707275, -6016738271397646318, 1614489580517171638, -3947302573022728739, 1923926062108950529, -9108925830760249950, -9060042955322751814, -2000084340205921073, -6132707306760563996, -6883241210703381902, 8740195267857701913, 8041076389604804564, -6303053730206256533, 598769270772963796, -2041316525404296230, -3860133938689713137, 4497202060050914136, 8955694409023320159, 3976567367560776009, -9165275604411410822, 1012738234769039757, 7642490246886963502, -3432866062799149095, 2519199740046251471, 2388427761311398841, -6886560953340875448, -4905186677634319463, -2365025404983910008, -8627402965240057817, -7397018902928602797, 1108859385650835241, 5281094978891453223, 6855360109813178939, -7807165856254133598, 1028026472880211244, 16660751466306624, 4072175354537615176, 2046113304521435920, -4044116572714093080, 98476484927120434, -5650328009808548456, -1384196055490211145, 8269412378242105431, -3207741555694791033, 8461694112286132273, 7684200390338837062, -3510258253288340132, 8994172498716928245, 5962685449452339479, -6226929694277901237, -3500613953333362662, -1492091879021245132, -947060640443297664, -6146648192398214417, -4464345544784150661, 6672100140478851757, -1340386687486760416, -3402143056639425167, -8508238454664137195, 964918476456248216, -7768463348829798026, 7756599010305739999, 1151692851232028639, 5052762745532257677, -6938353080763027108, 6683494536543705153, -6365889230484419309, 7384531241040909254, -4442336472114294091, -3750727149103368055, -8877412501490432986, 2647020543458892072, 6274135164775101483, -3649936680386055010, -7567305039792354763, -2172430473128016611, -2414729292194865719, 1408230014943390277, 4364677156300888178, 755861929549178049, 8235690776885053324, 8581387345513151684, 5002718336674882238, -870258856608853484, -1483711216472527900, -1255676054139266272, 331419834776310203, 1622392659577676198, 1187388789833685773, -5932747467864101101, 8122153151262337345, -2146380548913123401, 8197662599537401443, 8506067402867065505, 3090918727224804345, 3744225329829803414, 7619357059829297568, 556700409131325501, 5248429818045721574, 4765015544140971772, 2971482486644427028, -9173245872558505964, -2210735674653180475, 1181488914969268296, 2089494377150191570, 2047582435108024564, -6175545876351053551, -3298063022651817995, -1325629347910090158, 7488863875459007234, -5497017350454887793, -6756613781665488411, -6330009014934080380, 7681124670001326945, -7376366050502109636, 7992819870754351976, 3544290132427354974, -254827227758952719, -5704064381235954635, -836110888355241863, 7698549346624041319, 2301405470858849916, -481871362892611650, 6645744400280051944, -3818320263511106188, 1562581647772413229, -8160175779708883692, -2739834834172049430, 3510749139267324868, -5348896967283946783, 3527384472005761253, 4400799032050497147, -8651238311044541754, -5523410360681048732, 7071021940179800806, -5960796444158211925, 8420370346185308708, -2728886487595348029, 3105537168230717181, -1517621972941887996, 8452927690910375980, 6016490440494310456, -6889421189750345676, -2831286529760432055, -2189711506599834998, -7186866319154931067, -7009440320556973546, -2037384534764248619, -2220440490024002478, -377216044270617087, -1134884987470025768, 5192116499655548796, -3347230676841655272, -9130715416947308740, 9204760499816567337, -2439108211250476827, 1538934571518472975, -337514931320682527, -1674538086055718391, -3843322791290462622, 953749838960659962, 3330174901016008157, -2756012697370451081, -3602025464158100127, 3704439510960864841, 3752924436635734010, -5000939990480386852, 6154714044831917923, -8885087254969833946, 8407434459532892399, 4101903548525500975, 7904189481335560232, -2940053311648165067, 7494278666585169078, 1192828145405490948, 4470543315284180590, 654377960824023051, 2686967977432480840, 1411203428069491170, -8646993717939343792, 1159570865425141646, 8797484166341348183, 1079738560110059198, 1312350127490152747, -5189555431814227920, -2519118820283044758, 6059756840747708677, 5774693484122764099, -4349072189170425833, 4035740869403628813, 1511153166937753622, -3218856330350607949, 7304305360157341382, 3235867109258004764, -8951317005617098076, -162420324859555355, -793345512783903889, 5117521076123648029, -5882312494461926993, 8597264656412748201, -2877683839203210639, 9189818776605217015, 3313374825585251422, -7874810056424078419, 5674307591120690376, 9164898553319153477, 4358330615806437879, -5310359817210733626, 3113922769030946482, -659865237366019522, -9119890847611597075, -9205810881436744029, 8288333514535517283, 110170749276212955, 4325548561407427018, -1734212991042000302, -6873916426971903298, -7698545135503972364, -6954734571985878843, 1921094010145318263, 8877598562894529515, 241048672326064469, 900676715600069606, 5777523205257439109, 3010110724142136055, 8665660702093987211, 8608092300575511901, 7093280185971788300, 2944189561076742298, -2953386007626714319, -4900156269772444277, -5634850246813770872, 2948626453088923273, 2176870549249253374, -7387349836523930484, -9134092894261200380, 3875564163537339084, 6061299752516911114, -8854152481465861942, -9205171033569700009, 1363364174055650687]

INFO 15:30:22 Create new Keyspace: KeyspaceMetadata{name=system_traces, params=KeyspaceParams{durable_writes=true, replication=ReplicationParams{class=org.apache.cassandra.locator.SimpleStrategy, replication_factor=2}}, tables=[org.apache.cassandra.config.CFMetaData@1ef21588[cfId=c5e99f16-8677-3914-b17e-960613512345,ksName=system_traces,cfName=sessions,flags=[COMPOUND],params=TableParams{comment=tracing sessions, read_repair_chance=0.0, dclocal_read_repair_chance=0.0, bloom_filter_fp_chance=0.01, crc_check_chance=1.0, gc_grace_seconds=0, default_time_to_live=0, memtable_flush_period_in_ms=3600000, min_index_interval=128, max_index_interval=2048, speculative_retry=99PERCENTILE, caching={'keys' : 'ALL', 'rows_per_partition' : 'NONE'}, compaction=CompactionParams{class=org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy, options={min_threshold=4, max_threshold=32}}, compression=org.apache.cassandra.schema.CompressionParams@cc79ec64, extensions={}, cdc=false},comparator=comparator(),partitionColumns=[[] | [client command coordinator duration request started_at parameters]],partitionKeyColumns=[session_id],clusteringColumns=[],keyValidator=org.apache.cassandra.db.marshal.UUIDType,columnMetadata=[client, command, session_id, coordinator, request, started_at, duration, parameters],droppedColumns={},triggers=[],indexes=[]], org.apache.cassandra.config.CFMetaData@43aa6aac[cfId=8826e8e9-e16a-3728-8753-3bc1fc713c25,ksName=system_traces,cfName=events,flags=[COMPOUND],params=TableParams{comment=tracing events, read_repair_chance=0.0, dclocal_read_repair_chance=0.0, bloom_filter_fp_chance=0.01, crc_check_chance=1.0, gc_grace_seconds=0, default_time_to_live=0, memtable_flush_period_in_ms=3600000, min_index_interval=128, max_index_interval=2048, speculative_retry=99PERCENTILE, caching={'keys' : 'ALL', 'rows_per_partition' : 'NONE'}, compaction=CompactionParams{class=org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy, options={min_threshold=4, max_threshold=32}}, compression=org.apache.cassandra.schema.CompressionParams@cc79ec64, extensions={}, cdc=false},comparator=comparator(org.apache.cassandra.db.marshal.TimeUUIDType),partitionColumns=[[] | [activity source source_elapsed thread]],partitionKeyColumns=[session_id],clusteringColumns=[event_id],keyValidator=org.apache.cassandra.db.marshal.UUIDType,columnMetadata=[activity, session_id, thread, event_id, source, source_elapsed],droppedColumns={},triggers=[],indexes=[]]], views=[], functions=[], types=[]}

INFO 15:30:22 Not submitting build tasks for views in keyspace system_traces as storage service is not initialized

INFO 15:30:22 Initializing system_traces.events

INFO 15:30:22 Initializing system_traces.sessions

INFO 15:30:22 Create new Keyspace: KeyspaceMetadata{name=system_distributed, params=KeyspaceParams{durable_writes=true, replication=ReplicationParams{class=org.apache.cassandra.locator.SimpleStrategy, replication_factor=3}}, tables=[org.apache.cassandra.config.CFMetaData@7a49fac6[cfId=759fffad-624b-3181-80ee-fa9a52d1f627,ksName=system_distributed,cfName=repair_history,flags=[COMPOUND],params=TableParams{comment=Repair history, read_repair_chance=0.0, dclocal_read_repair_chance=0.0, bloom_filter_fp_chance=0.01, crc_check_chance=1.0, gc_grace_seconds=0, default_time_to_live=0, memtable_flush_period_in_ms=3600000, min_index_interval=128, max_index_interval=2048, speculative_retry=99PERCENTILE, caching={'keys' : 'ALL', 'rows_per_partition' : 'NONE'}, compaction=CompactionParams{class=org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy, options={min_threshold=4, max_threshold=32}}, compression=org.apache.cassandra.schema.CompressionParams@cc79ec64, extensions={}, cdc=false},comparator=comparator(org.apache.cassandra.db.marshal.TimeUUIDType),partitionColumns=[[] | [coordinator exception_message exception_stacktrace finished_at parent_id range_begin range_end started_at status participants]],partitionKeyColumns=[keyspace_name, columnfamily_name],clusteringColumns=[id],keyValidator=org.apache.cassandra.db.marshal.CompositeType(org.apache.cassandra.db.marshal.UTF8Type,org.apache.cassandra.db.marshal.UTF8Type),columnMetadata=[status, id, coordinator, finished_at, participants, exception_stacktrace, parent_id, range_end, range_begin, exception_message, keyspace_name, started_at, columnfamily_name],droppedColumns={},triggers=[],indexes=[]], org.apache.cassandra.config.CFMetaData@19525fa0[cfId=deabd734-b99d-3b9c-92e5-fd92eb5abf14,ksName=system_distributed,cfName=parent_repair_history,flags=[COMPOUND],params=TableParams{comment=Repair history, read_repair_chance=0.0, dclocal_read_repair_chance=0.0, bloom_filter_fp_chance=0.01, crc_check_chance=1.0, gc_grace_seconds=0, default_time_to_live=0, memtable_flush_period_in_ms=3600000, min_index_interval=128, max_index_interval=2048, speculative_retry=99PERCENTILE, caching={'keys' : 'ALL', 'rows_per_partition' : 'NONE'}, compaction=CompactionParams{class=org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy, options={min_threshold=4, max_threshold=32}}, compression=org.apache.cassandra.schema.CompressionParams@cc79ec64, extensions={}, cdc=false},comparator=comparator(),partitionColumns=[[] | [exception_message exception_stacktrace finished_at keyspace_name started_at columnfamily_names options requested_ranges successful_ranges]],partitionKeyColumns=[parent_id],clusteringColumns=[],keyValidator=org.apache.cassandra.db.marshal.TimeUUIDType,columnMetadata=[requested_ranges, exception_message, keyspace_name, successful_ranges, started_at, finished_at, options, exception_stacktrace, parent_id, columnfamily_names],droppedColumns={},triggers=[],indexes=[]], org.apache.cassandra.config.CFMetaData@59907bc9[cfId=5582b59f-8e4e-35e1-b913-3acada51eb04,ksName=system_distributed,cfName=view_build_status,flags=[COMPOUND],params=TableParams{comment=Materialized View build status, read_repair_chance=0.0, dclocal_read_repair_chance=0.0, bloom_filter_fp_chance=0.01, crc_check_chance=1.0, gc_grace_seconds=0, default_time_to_live=0, memtable_flush_period_in_ms=3600000, min_index_interval=128, max_index_interval=2048, speculative_retry=99PERCENTILE, caching={'keys' : 'ALL', 'rows_per_partition' : 'NONE'}, compaction=CompactionParams{class=org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy, options={min_threshold=4, max_threshold=32}}, compression=org.apache.cassandra.schema.CompressionParams@cc79ec64, extensions={}, cdc=false},comparator=comparator(org.apache.cassandra.db.marshal.UUIDType),partitionColumns=[[] | [status]],partitionKeyColumns=[keyspace_name, view_name],clusteringColumns=[host_id],keyValidator=org.apache.cassandra.db.marshal.CompositeType(org.apache.cassandra.db.marshal.UTF8Type,org.apache.cassandra.db.marshal.UTF8Type),columnMetadata=[status, keyspace_name, view_name, host_id],droppedColumns={},triggers=[],indexes=[]]], views=[], functions=[], types=[]}

INFO 15:30:22 Not submitting build tasks for views in keyspace system_distributed as storage service is not initialized

INFO 15:30:22 Initializing system_distributed.parent_repair_history

INFO 15:30:22 Initializing system_distributed.repair_history

INFO 15:30:22 Initializing system_distributed.view_build_status

INFO 15:30:22 Node /172.17.0.4 state jump to NORMAL

INFO 15:30:22 Create new Keyspace: KeyspaceMetadata{name=system_auth, params=KeyspaceParams{durable_writes=true, replication=ReplicationParams{class=org.apache.cassandra.locator.SimpleStrategy, replication_factor=1}}, tables=[org.apache.cassandra.config.CFMetaData@2bcd7a78[cfId=5bc52802-de25-35ed-aeab-188eecebb090,ksName=system_auth,cfName=roles,flags=[COMPOUND],params=TableParams{comment=role definitions, read_repair_chance=0.0, dclocal_read_repair_chance=0.0, bloom_filter_fp_chance=0.01, crc_check_chance=1.0, gc_grace_seconds=7776000, default_time_to_live=0, memtable_flush_period_in_ms=3600000, min_index_interval=128, max_index_interval=2048, speculative_retry=99PERCENTILE, caching={'keys' : 'ALL', 'rows_per_partition' : 'NONE'}, compaction=CompactionParams{class=org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy, options={min_threshold=4, max_threshold=32}}, compression=org.apache.cassandra.schema.CompressionParams@cc79ec64, extensions={}, cdc=false},comparator=comparator(),partitionColumns=[[] | [can_login is_superuser salted_hash member_of]],partitionKeyColumns=[role],clusteringColumns=[],keyValidator=org.apache.cassandra.db.marshal.UTF8Type,columnMetadata=[role, salted_hash, member_of, can_login, is_superuser],droppedColumns={},triggers=[],indexes=[]], org.apache.cassandra.config.CFMetaData@14f7f6de[cfId=0ecdaa87-f8fb-3e60-88d1-74fb36fe5c0d,ksName=system_auth,cfName=role_members,flags=[COMPOUND],params=TableParams{comment=role memberships lookup table, read_repair_chance=0.0, dclocal_read_repair_chance=0.0, bloom_filter_fp_chance=0.01, crc_check_chance=1.0, gc_grace_seconds=7776000, default_time_to_live=0, memtable_flush_period_in_ms=3600000, min_index_interval=128, max_index_interval=2048, speculative_retry=99PERCENTILE, caching={'keys' : 'ALL', 'rows_per_partition' : 'NONE'}, compaction=CompactionParams{class=org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy, options={min_threshold=4, max_threshold=32}}, compression=org.apache.cassandra.schema.CompressionParams@cc79ec64, extensions={}, cdc=false},comparator=comparator(org.apache.cassandra.db.marshal.UTF8Type),partitionColumns=[[] | []],partitionKeyColumns=[role],clusteringColumns=[member],keyValidator=org.apache.cassandra.db.marshal.UTF8Type,columnMetadata=[role, member],droppedColumns={},triggers=[],indexes=[]], org.apache.cassandra.config.CFMetaData@53491684[cfId=3afbe79f-2194-31a7-add7-f5ab90d8ec9c,ksName=system_auth,cfName=role_permissions,flags=[COMPOUND],params=TableParams{comment=permissions granted to db roles, read_repair_chance=0.0, dclocal_read_repair_chance=0.0, bloom_filter_fp_chance=0.01, crc_check_chance=1.0, gc_grace_seconds=7776000, default_time_to_live=0, memtable_flush_period_in_ms=3600000, min_index_interval=128, max_index_interval=2048, speculative_retry=99PERCENTILE, caching={'keys' : 'ALL', 'rows_per_partition' : 'NONE'}, compaction=CompactionParams{class=org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy, options={min_threshold=4, max_threshold=32}}, compression=org.apache.cassandra.schema.CompressionParams@cc79ec64, extensions={}, cdc=false},comparator=comparator(org.apache.cassandra.db.marshal.UTF8Type),partitionColumns=[[] | [permissions]],partitionKeyColumns=[role],clusteringColumns=[resource],keyValidator=org.apache.cassandra.db.marshal.UTF8Type,columnMetadata=[resource, role, permissions],droppedColumns={},triggers=[],indexes=[]], org.apache.cassandra.config.CFMetaData@24fc2ad0[cfId=5f2fbdad-91f1-3946-bd25-d5da3a5c35ec,ksName=system_auth,cfName=resource_role_permissons_index,flags=[COMPOUND],params=TableParams{comment=index of db roles with permissions granted on a resource, read_repair_chance=0.0, dclocal_read_repair_chance=0.0, bloom_filter_fp_chance=0.01, crc_check_chance=1.0, gc_grace_seconds=7776000, default_time_to_live=0, memtable_flush_period_in_ms=3600000, min_index_interval=128, max_index_interval=2048, speculative_retry=99PERCENTILE, caching={'keys' : 'ALL', 'rows_per_partition' : 'NONE'}, compaction=CompactionParams{class=org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy, options={min_threshold=4, max_threshold=32}}, compression=org.apache.cassandra.schema.CompressionParams@cc79ec64, extensions={}, cdc=false},comparator=comparator(org.apache.cassandra.db.marshal.UTF8Type),partitionColumns=[[] | []],partitionKeyColumns=[resource],clusteringColumns=[role],keyValidator=org.apache.cassandra.db.marshal.UTF8Type,columnMetadata=[resource, role],droppedColumns={},triggers=[],indexes=[]]], views=[], functions=[], types=[]}

INFO 15:30:23 Not submitting build tasks for views in keyspace system_auth as storage service is not initialized

INFO 15:30:23 Initializing system_auth.resource_role_permissons_index

INFO 15:30:23 Initializing system_auth.role_members

INFO 15:30:23 Initializing system_auth.role_permissions

INFO 15:30:23 Initializing system_auth.roles

INFO 15:30:23 Waiting for gossip to settle before accepting client requests...

INFO 15:30:31 No gossip backlog; proceeding

INFO 15:30:31 Netty using native Epoll event loop

INFO 15:30:31 Using Netty Version: [netty-buffer=netty-buffer-4.0.39.Final.38bdf86, netty-codec=netty-codec-4.0.39.Final.38bdf86, netty-codec-haproxy=netty-codec-haproxy-4.0.39.Final.38bdf86, netty-codec-http=netty-codec-http-4.0.39.Final.38bdf86, netty-codec-socks=netty-codec-socks-4.0.39.Final.38bdf86, netty-common=netty-common-4.0.39.Final.38bdf86, netty-handler=netty-handler-4.0.39.Final.38bdf86, netty-tcnative=netty-tcnative-1.1.33.Fork19.fe4816e, netty-transport=netty-transport-4.0.39.Final.38bdf86, netty-transport-native-epoll=netty-transport-native-epoll-4.0.39.Final.38bdf86, netty-transport-rxtx=netty-transport-rxtx-4.0.39.Final.38bdf86, netty-transport-sctp=netty-transport-sctp-4.0.39.Final.38bdf86, netty-transport-udt=netty-transport-udt-4.0.39.Final.38bdf86]

INFO 15:30:31 Starting listening for CQL clients on /0.0.0.0:9042 (unencrypted)...

INFO 15:30:31 Not starting RPC server as requested. Use JMX (StorageService->startRPCServer()) or nodetool (enablethrift) to start it

INFO 15:30:33 Scheduling approximate time-check task with a precision of 10 milliseconds

INFO 15:30:33 Created default superuser role 'cassandra'

Step 3: Create a second Cassandra Node

We want to start a second Cassandra container on the same Docker host for simple testing. We will connect to the container running on the first node via IP. For that we need to find out the IP address as follows:

(dockerhost)$ sudo docker inspect --format='{{ .NetworkSettings.IPAddress }}' cassandra-node1

172.17.0.2eThis information can be used in the next command by setting the CASSANDRA_SEEDS variable accordingly:

Note also that we have changed the port mapping in order to avoid port conflicts with the first Cassandra node:

(dockerhost)$ sudo docker run -it --rm --entrypoint="bash" --name cassandra-node2 \

-p27000:7000 -p27001:7001 -p29042:9042 -p29160:9160 \

-e CASSANDRA_SEEDS="$(docker inspect --format='{{ .NetworkSettings.IPAddress }}' cassandra-node1)" \

cassandra

Note that we have overridden the default entrypoint, so we get access to the terminal.

We now start Cassandra on the second node:

(container):/# /docker-entrypoint.sh cassandra -f ... INFO 17:37:03 Starting listening for CQL clients on /0.0.0.0:9042 (unencrypted)... INFO 17:37:03 Not starting RPC server as requested. Use JMX (StorageService->startRPCServer()) or nodetool (enablethrift) to start it INFO 17:37:05 Created default superuser role 'cassandra'

On the first Cassandra node, we will see following additional log lines:

INFO 17:36:21 Handshaking version with /172.17.0.3 INFO 17:36:21 Handshaking version with /172.17.0.3 INFO 17:36:22 InetAddress /172.17.0.3 is now DOWN INFO 17:36:22 Handshaking version with /172.17.0.3 INFO 17:36:23 Handshaking version with /172.17.0.3 INFO 17:36:54 [Stream #beb912f0-bca3-11e6-a935-4b019c4b758d ID#0] Creating new streaming plan for Bootstrap INFO 17:36:54 [Stream #beb912f0-bca3-11e6-a935-4b019c4b758d, ID#0] Received streaming plan for Bootstrap INFO 17:36:54 [Stream #beb912f0-bca3-11e6-a935-4b019c4b758d, ID#0] Received streaming plan for Bootstrap INFO 17:36:55 [Stream #beb912f0-bca3-11e6-a935-4b019c4b758d] Session with /172.17.0.3 is complete INFO 17:36:55 [Stream #beb912f0-bca3-11e6-a935-4b019c4b758d] All sessions completed INFO 17:36:55 Node /172.17.0.3 state jump to NORMAL INFO 17:36:55 InetAddress /172.17.0.3 is now UP

Note: if you get following error message:

Exception (java.lang.RuntimeException) encountered during startup: A node with address /172.17.0.3 already exists, cancelling join. Use cassandra.replace_address if you want to replace this node.you need to start the service using the following line instead:

(container):/# /docker-entrypoint.sh cassandra -f -Dcassandra.replace_address=$(ip addr show | grep eth0 | grep -v '@' | awk '{print $2}' | awk -F"\/" '{print $1}')The error will show up, if you have connected a Cassandra node to the cluster, then you destroy the node (by stopping the container) and re-start a new container. The new container will re-claim the now unused IP address of the destroyed container. However, this address is marked as unreachable within the cluster. We would like to re-use the IP address in the cluster, which requires the

-Dcassandra.replace_addressoption.The term

(container):/# ip addr show | grep eth0 | grep -v '@' | awk '{print $2}' | awk -F"\/" '{print $1}' 172.17.0.3will return the current IP address of eth0 of the docker container and helps to feed in the correct IP address to the

-Dcassandra.replace_addressoption.

Step 4: Start a CQL Client Container

Now we want to add some data to the distributed noSQL database. For that, we start a third container that can be used as CQL Client (CQL=Cassandra Query Language similar to SQL). We can start a CQL shell like follows:

(dockerhost)$ sudo docker run -it --rm -e CQLSH_HOST=$(docker inspect --format='{{ .NetworkSettings.IPAddress }}' cassandra-node1) --name cassandra-client --entrypoint=cqlsh cassandra

Connected to Test Cluster at 172.17.0.2:9042.

[cqlsh 5.0.1 | Cassandra 3.9 | CQL spec 3.4.2 | Native protocol v4]

Use HELP for help.

cqlsh>

Step 5: Create Keyspace

Now let us create a keyspace. A keyspace is the pendant for a database in SQL databases:

cqlsh> create keyspace mykeyspace with replication = {'class':'SimpleStrategy','replication_factor' : 2};

cqlsh>Upon successful creation, the prompt will be printed without error.

Step 6: Create Table

For adding data, we need to enter the keyspace and

cqlsh> use mykeyspace; cqlsh:mykeyspace> create table usertable (userid int primary key, usergivenname varchar, userfamilyname varchar, userprofession varchar); cqlsh:mykeyspace>

Step 7: Add Data

Now we can add our data:

cqlsh:mykeyspace> insert into usertable (userid, usergivenname, userfamilyname, userprofession) values (1, 'Oliver', 'Veits', 'Freelancer'); cqlsh:mykeyspace>

The CQL INSERT command has the same syntax as an SQL INSERT command.

Step 8 (optional): Update Data

We now can update a single column as well:

cqlsh:mykeyspace> update usertable set userprofession = 'IT Consultant' where userid = 1;

Now let us read the entry:

qlsh:mykeyspace> select * from usertable where userid = 1; userid | userfamilyname | usergivenname | userprofession --------+----------------+---------------+---------------- 1 | Veits | Oliver | IT Consultant (1 rows) cqlsh:mykeyspace>

Step 9 (optional): Query on Data other than the primary Index

In Cassandra, we need to enable data filtering, if we try to retrieve data based on a column that has no index:

cqlsh:mykeyspace> select * from usertable where userprofession = 'IT Consultant';

InvalidRequest: Error from server: code=2200 [Invalid query] message="Cannot execute this query as it might involve data filtering and thus may have unpredictable performance. If you want to execute this query despite the performance unpredictability, use ALLOW FILTERING"Since data filtering will cost a lot of performance, we will add a secondary index instead. That helps us running the query without such a performance impact:

cqlsh:mykeyspace> create index idx_dept on usertable(userprofession);

Now the same query should be successful:

cqlsh:mykeyspace> select * from usertable where userprofession = 'IT Consultant';

userid | userfamilyname | usergivenname | userprofession --------+----------------+---------------+---------------- 1 | Veits | Oliver | IT Consultant

(1 rows)

Yes, perfect.

Step 10: Test Resiliency

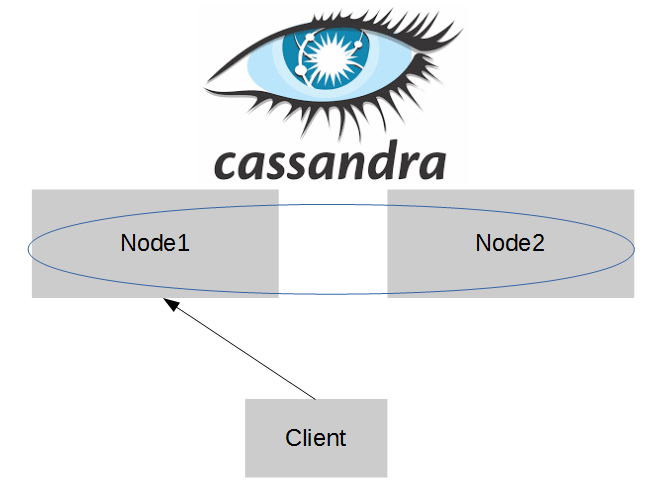

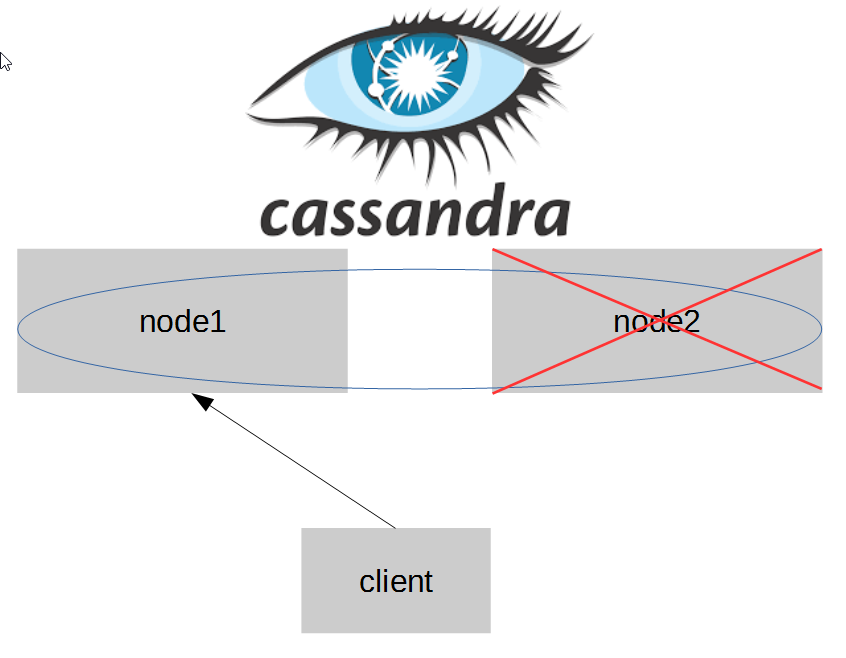

In the moment, we have following topology (with all nodes and the client being Docker containers on the same Docker host):

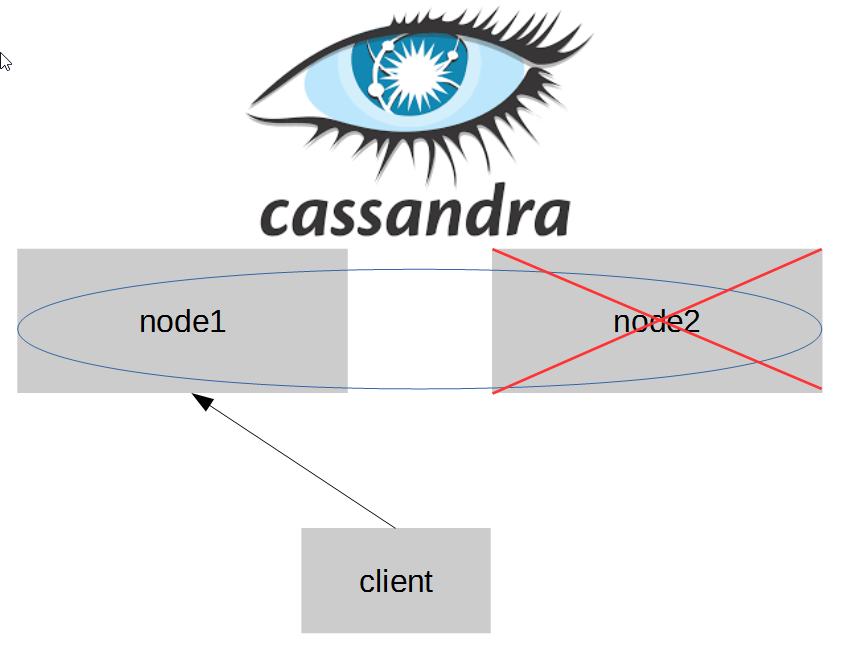

Now, we will test, whether the data is retained, if the Cassandra application on node2 is stopped first. For that we stop the application on node2 by pressing Ctrl-C.

On node1 we see:

INFO 18:06:50 InetAddress /172.17.0.5 is now DOWN INFO 18:06:51 Handshaking version with /172.17.0.5

On the client we see that the data is still there:

cqlsh:mykeyspace> select * from usertable where userprofession = 'IT Consultant';

userid | userfamilyname | usergivenname | userprofession --------+----------------+---------------+---------------- 1 | Veits | Oliver | IT Consultant

(1 rows)

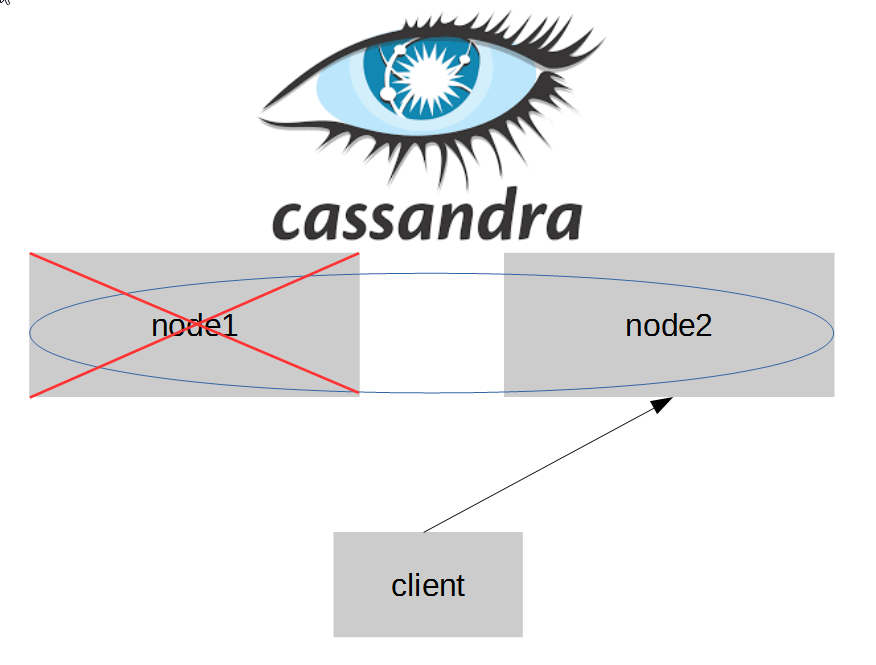

Now let us start the Cassandra application on node 2 again and wait some time until the nodes are synchronized. On node1 we will get a log similar to:

INFO 17:36:35 Handshaking version with /172.17.0.4 INFO 17:38:58 Handshaking version with /172.17.0.4 INFO 17:38:58 Handshaking version with /172.17.0.4 INFO 17:39:00 Node /172.17.0.4 has restarted, now UP INFO 17:39:00 Node /172.17.0.4 state jump to NORMAL INFO 17:39:00 InetAddress /172.17.0.4 is now UP INFO 17:39:00 Updating topology for /172.17.0.4 INFO 17:39:00 Updating topology for /172.17.0.4

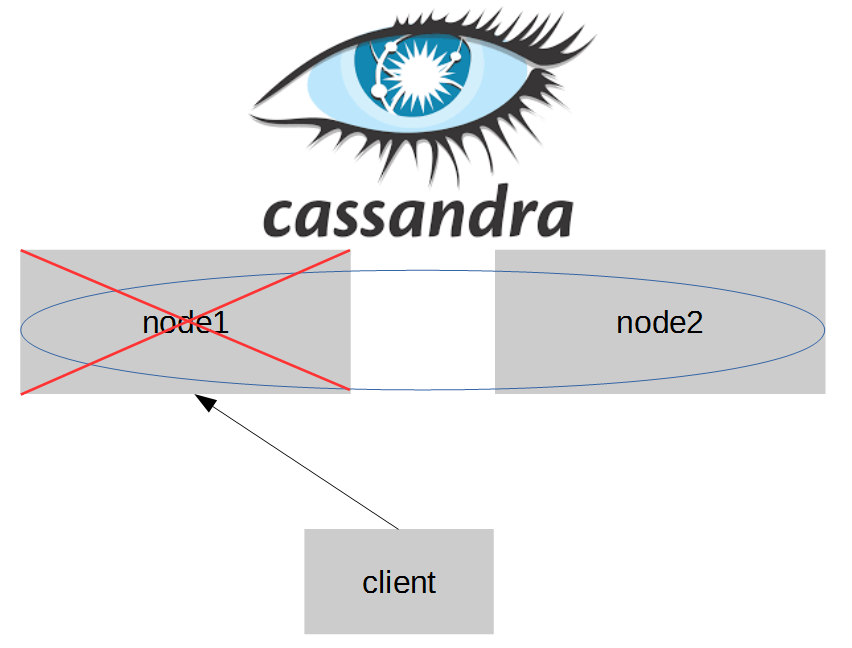

Now we can stop Cassandra on node1 by pressing Ctrl-C on terminal 1. On node2, we will get a message similar to:

INFO 17:41:32 InetAddress /172.17.0.3 is now DOWN INFO 17:41:32 Handshaking version with /172.17.0.3

At the same time, the node1 container is destroyed, since we have not changed the entrypoint for node1 and we have given the --rm option in the docker run command in step 2.

Now, we verify that the data is still retained:

cqlsh:mykeyspace> select * from usertable where userprofession = 'IT Consultant';

NoHostAvailable:

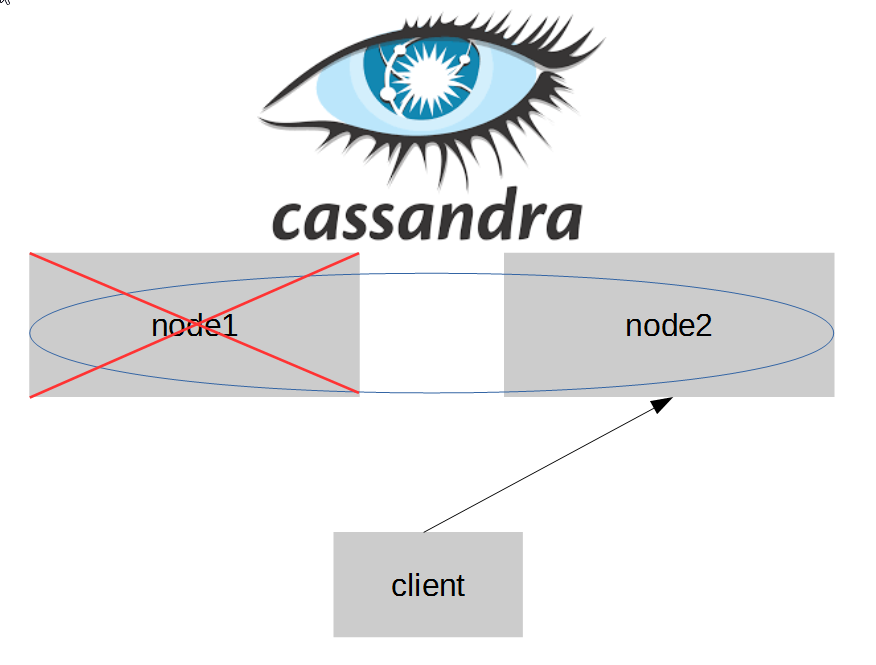

Oh, yes, that is clear: we have used node1’s IP address and port, when we have started the client.

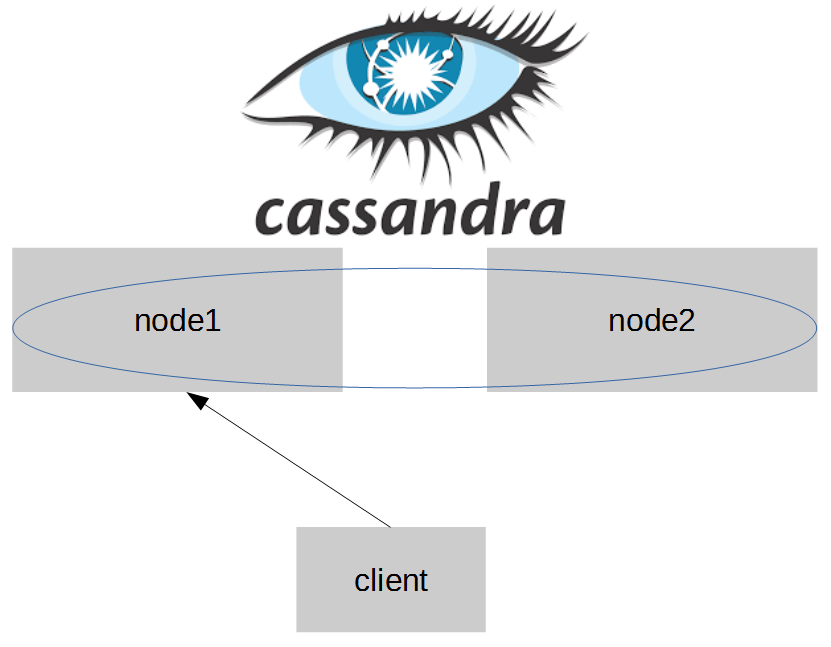

Let us now connect to node2 by entering „exit" and starting a new client container like follows:

(dockerhost)$ sudo docker run -it --rm -e CQLSH_HOST=$(docker inspect --format='{{ .NetworkSettings.IPAddress }}' cassandra-node2) -e CQLSH_PORT=9042 --name cassandra-client --entrypoint=cqlsh cassandra

Connected to Test Cluster at 172.17.0.4:9042.

[cqlsh 5.0.1 | Cassandra 3.9 | CQL spec 3.4.2 | Native protocol v4]

Use HELP for help.

cqlsh>

To be honest, I was a little bit confused here and would have expected that I need to connect to port 29042 instead, since I have started node2 with a port mapping from 29042 (outside port) to 9042 (container port). But this is wrong: from the Docker host, we can directly access the node2 container IP address with all its ports, including port 9042. Only, if we want to access container from outside the Docker host, we need to access port 29042 of the Docker host IP address instead of port 9042 of the node2 container:

(dockerhost)$ netstat -an | grep 9042

tcp6 0 0 :::29042 :::* LISTEN

Now let us check on the second node that the data is retained:

Anyway, we are connected to the second node now and can check the data:

cqlsh> use mykeyspace; cqlsh:mykeyspace> select * from usertable where userid = 1;

userid | userfamilyname | usergivenname | userprofession --------+----------------+---------------+---------------- 1 | Veits | Oliver | IT Consultant

(1 rows)

Perfect! The data is still there.

Appendix A: No keyspace has been specified.

If you get an error message like follows,

cqlsh> select * from usertable where userprofession = 'IT Consultant';

InvalidRequest: Error from server: code=2200 [Invalid query] message="No keyspace has been specified. USE a keyspace, or explicitly specify keyspace.tablename"Then you have forgotten to prepend the „use mykeyspace; command:

cqlsh> use mykeyspace; cqlsh:mykeyspace> select * from usertable where userid = 1;

userid | userfamilyname | usergivenname | userprofession --------+----------------+---------------+---------------- 1 | Veits | Oliver | IT Consultant

(1 rows)

Summary

In this blog post we have performed the following tasks:

- Introduced Cassandra with a little comparison with Hadoop

- We have started a Cassandra node in a Docker container

- Spun up a second Cassandra node and build a Cassandra cluster

- Started a Cassandra Client in a container

- Added Data with replication factor 2 and performed some CQL commands for a warm-up

- shut down node 2 and verified that the data is still available

- started node 2 again and wait some seconds

- shut down node1 and verified that the data is still available on node2

With this test, we could verify that the data replication between nodes in a Cassandra cluster works and no data is lost if a node fails.